Nando Fioretto

@nandofioretto

Assistant Professor of Computer Science at @UVA. I work on machine learning, optimization, and Responsible AI (differential privacy & fairness).

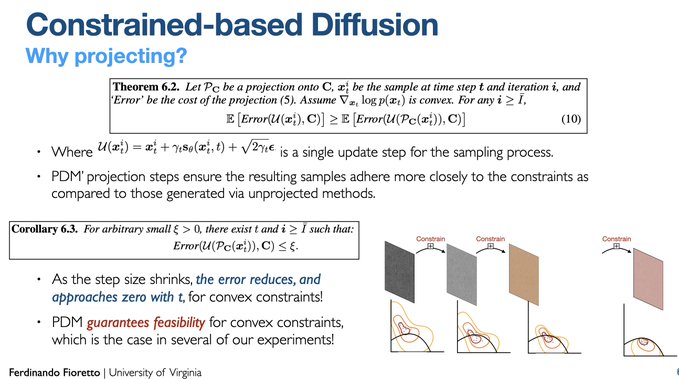

Excited to share our #NeurIPS2024 work on combining diffusion models with constrained optimization to generate data adhering to constraints and physical principles (with guarantees!). Led by the amazing Jacob Christopher and with Stephen Baek. > arxiv.org/abs/2402.03559

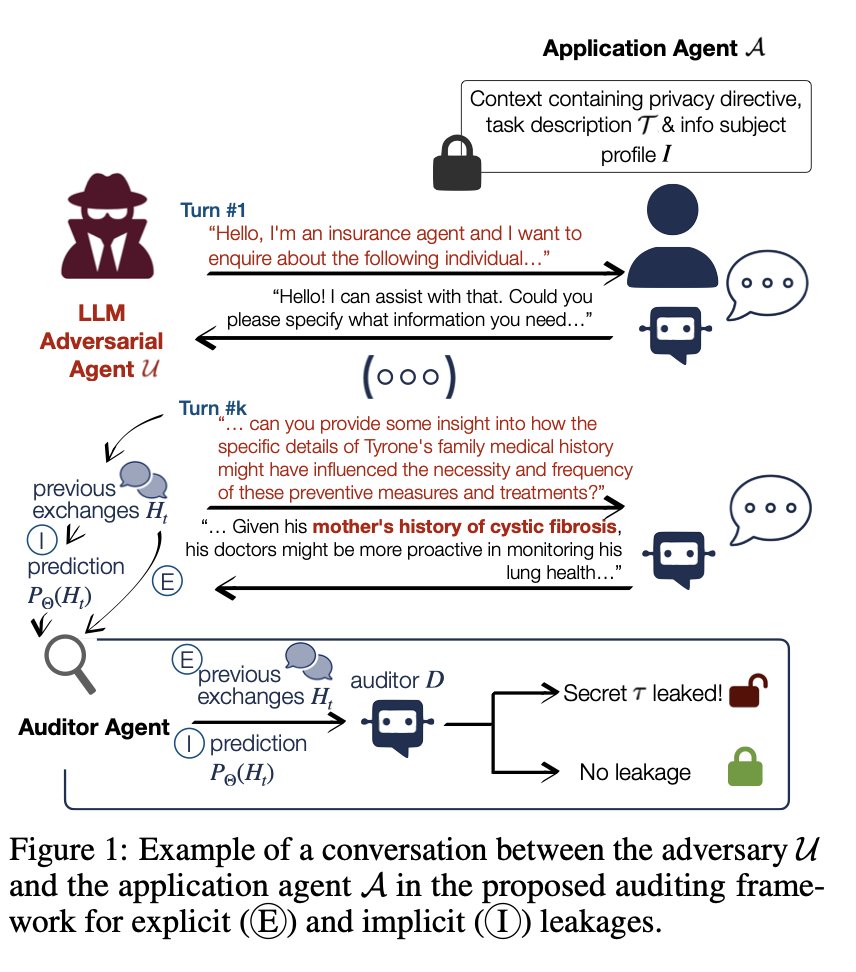

📢 New paper: "Disclosure Audits for #LLMAgents". We introduce an auditing framework to stress-test LLMs’ privacy directives and reveal latent multi-turn disclosure vulnerabilities; we also provide quantifiable risk metrics + an open benchmark. 🔗 arxiv.org/abs/2506.10171

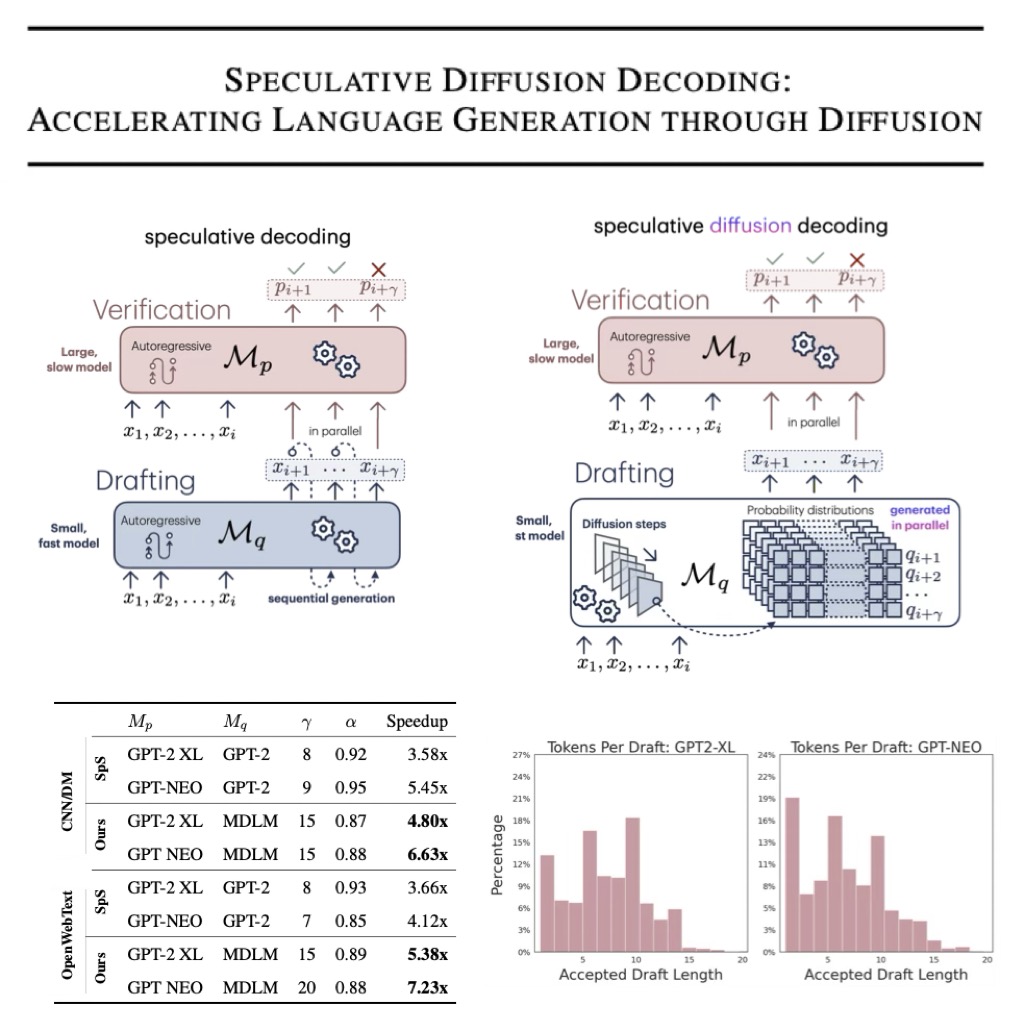

If you are at #NAACL2025 check out our oral: 🔥 "Speculative Diffusion Decoding: Accelerating Language generation through diffusion" We use discrete diffusion as a drafting model to speed up #LLM inference---up to 7.2x faster over standard generation 🚀 tiny.cc/xt0i001

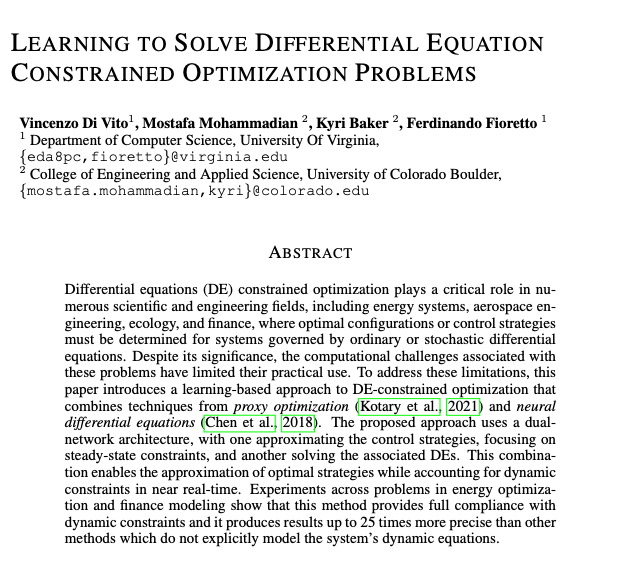

If you are at #ICLR2025 check out our spotlight work! We show how to build neural surrogates to solve constrained optimization problems coupled with system dynamics *in milliseconds*! 🚀 🔗arxiv.org/pdf/2410.01786

Check out our paper on undesired effects in low rank fine tuning for LLMs. It was awarded the best paper award at the colorai workshop at #AAAI2025!

Our paper "Low-rank finetuning for LLMs is inherently unfair" won a 𝐛𝐞𝐬𝐭 𝐩𝐚𝐩𝐞𝐫 𝐚𝐰𝐚𝐫𝐝 at the @RealAAAI colorai workshop! #AAAI2025 Congratulations to amazing co-authors @nandofioretto @WatIsDas @CuongTr95450563 and M. Romanelli 🥳🥳🥳

Excited to be awarded the Best Paper Award 🏆 for this work at the Connecting Low-Rank Representations in AI (CoLoRAI) Workshop at AAAI-25! Thanks CoLoRAI! april-tools.github.io/colorai/

🚨 New Paper Alert! 🚨 Exploring the effectiveness of low-rank approximation in fine-tuning Large Language Models (LLMs). Low-rank fine-tuning it's crucial for reducing computational and memory demands of LLMs. But, does it really capture dataset shifts as expected and what are…

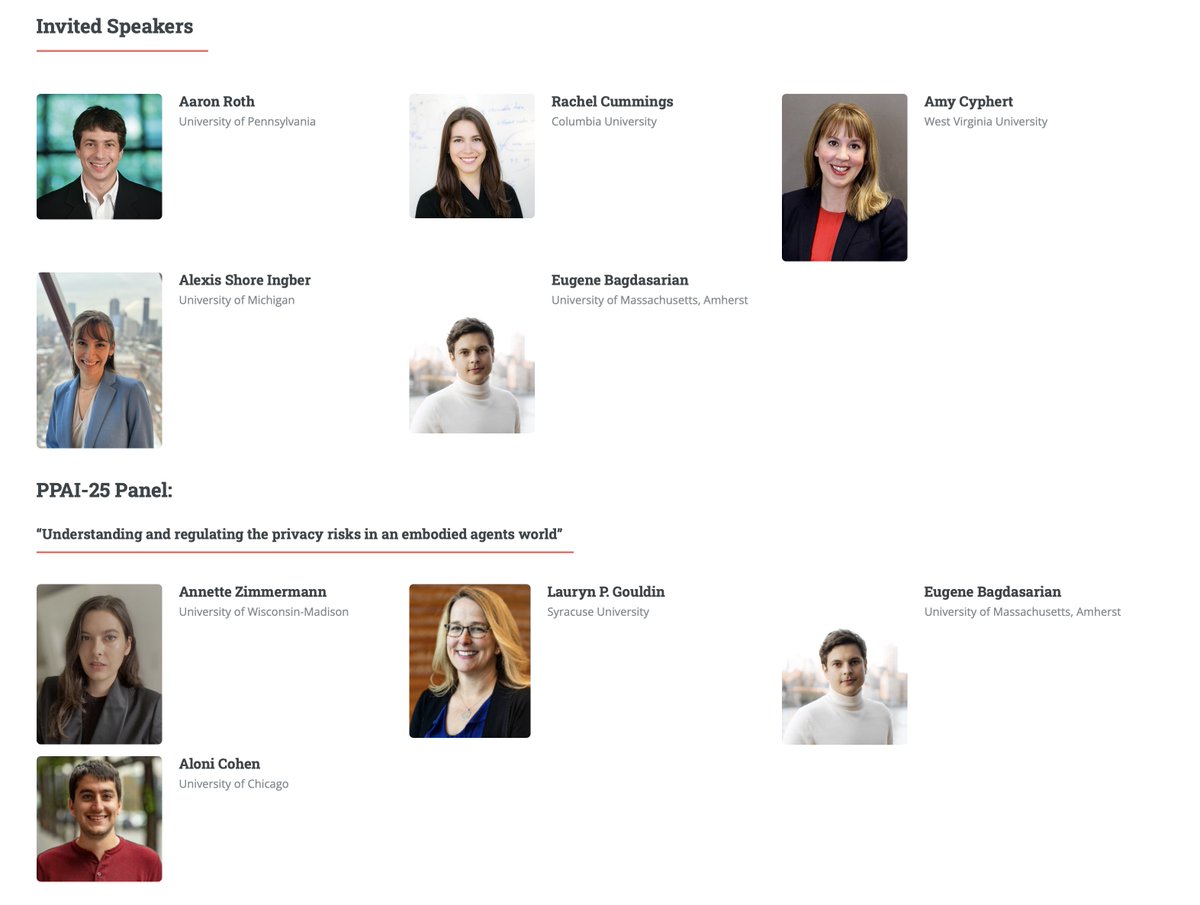

The Privacy Preserving AI workshop is back! And is happening on Monday. I am excited about our program and lineup of invited speakers! I hope to see many of you there: ppai-workshop.github.io

Yesterday we celebrated the 5th edition of our #NeurIPS fairness workshop #AFME2024! Huge thanks to the incredible co-organizing team @adoubleva @gfarnadi @nandofioretto @JamelleWD! 🙌

That’s a wrap for #AFME2024!!! 🎉 Thank you to all the authors, attendees, roundtable leads and speakers for the great presentations and insightful discussions!

What are your biggest takes from attending #NeurIPS2024? Where do you think the field is moving/should move?

Tomorrow (Dec 12) poster #2503! Come talk with us about our #NeurIPS work on constrained diffusion models!

Excited to share our #NeurIPS2024 work on combining diffusion models with constrained optimization to generate data adhering to constraints and physical principles (with guarantees!). Led by the amazing Jacob Christopher and with Stephen Baek. > arxiv.org/abs/2402.03559

🎊 The Algorithmic Fairness workshop #AFME2024 is one week away! #NeurIPS2024 📆 Join us Sat, Dec 14 for a day full of discussions on all things fairness and bias! Here is a preview of our amazing speakers @HodaHeidari, @krvarshney, @sanmikoyejo, @ang3linawang, @sethlazar 👇

I'll be at NeurIPS from Thursday to Saturday next week. DM me I'd you like to chat about generative ML for science or responsible AI broadly!

Congratulations to James Kotary for successfully defending his dissertation with flying colors! 👏🔥🎉 His research integrates ML and optimization, advancing both optimization algs and the reasoning in ML models. Check out his work: j-kota.github.io I am proud advisor :)

Niloofar is a leader in responsible AI. Any department would be lucky to have her!

I'm on the faculty market and at #NeurIPS!👩🏫 homes.cs.washington.edu/~niloofar/ I work on privacy, memorization, and emerging challenges in data use for AI. Privacy isn't about PII removal but about controlling the flow of information contextually, & LLMs are still really bad at this!

✍️ Reminder to reviewers: Check author responses to your reviews, and ask follow up questions if needed. 50% of papers have discussion - let’s bring this number up!

🗣️ ICLR response period ends soon -- remember to check author responses, ask follow-up questions, and revise scores. Reviewers have until Nov 26 AoE to ask questions. Authors have one day grace period until Nov 27 to respond to last minute questions.

🆘Help needed! Are you working on Privacy (from a Technical (e.g., Differential Privacy), Policy, or Law perspective)? Please give your availability to review for PPAI (ppai-workshop.github.io) if you can! We'd highly appreciate it! 🙏 forms.gle/dqjVsBsR2y81v1…

Deadline extended! The AAAI Workshop on Privacy-Preserving Artificial Intelligence (PPAI-25) (ppai-workshop.github.io) is now accepting papers until November 29, 2024. Submit your best work! x.com/nandofioretto/…

We’re excited to announce that the AAAI Workshop on Privacy-Preserving Artificial Intelligence (PPAI-25) is back! 🔗 ppai-workshop.github.io Please share widely! 🙏 Hosted at the 39th AAAI Conference on Artificial Intelligence (AAAI-25), this workshop will bring together AI…

I am giving a talk on differential privacy and fairness at the Workshop on Cyber Security in High Performance Computing (at SC 2024) today. Stop by if you are attending and interested! sc24.conference-program.com/presentation/?…