Morph (4,500+ tok/sec)

@morphllm

The Fastest Way to Apply AI Edits to Code and Files. YC S23 https://morphllm.com https://discord.gg/AdXta4yxEK

Same is helping host THE FIRST coding agent hackathon at the YC office aug 9, 9am. dm me for an invite.

Today, avante.nvim has two updates: 1. Fast apply is supported, using morphllm.com to greatly improve the issue of failure when editing files. 2. Only the moonshot provider is used; you can directly use provider = 'moonshot' for one-click access to kimi/k2 models.

cursor是做了自己的小模型来处理的,这个cline的方法只能说减少了一些输出量,该有问题的仍然有。 社区方案是FastApply模型,商业方案有 morphllm.com 之类的

Kimi K2 outperforms Gemini 2.5 Pro and matches Sonnet-4 in real-world diff edit failure rates.

Kimi K2 outperforms Gemini 2.5 Pro and matches Sonnet-4 in real-world diff edit failure rates.

@RepoPrompt using @OpenAI o3 in pair-programming mode with @AnthropicAI Claude code with edits done through @morphllm to complete the migration to Hive.

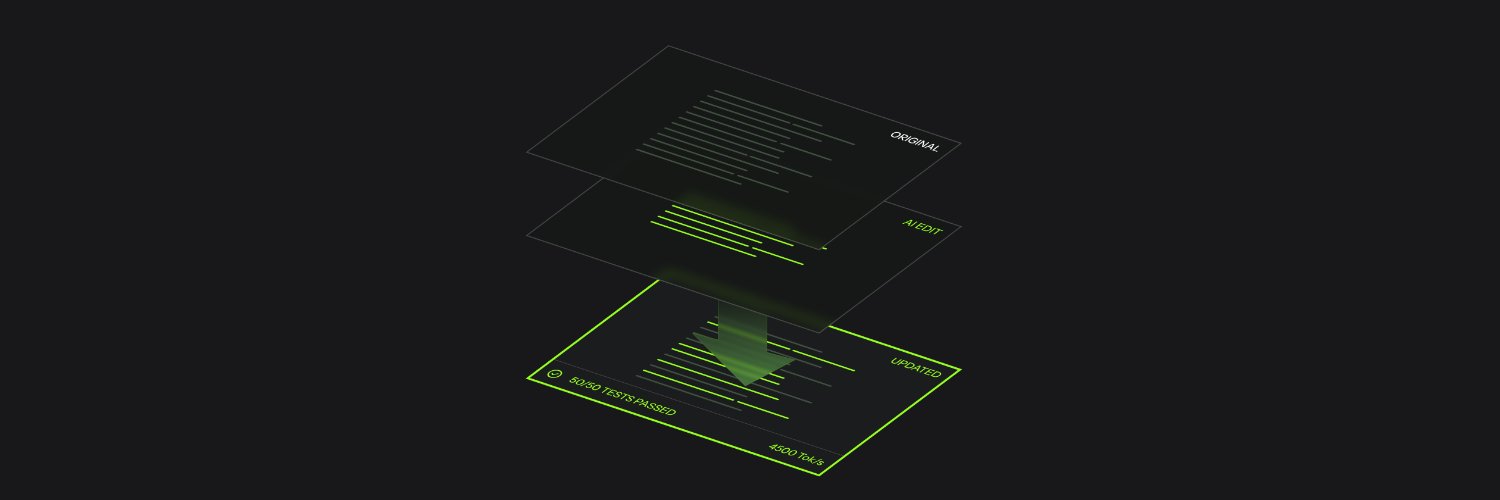

Morph was #3 on the OpenRouter trending — just after Grok and Kimi2! We built Morph to do one thing: apply code edits accurately, FAST (4,500 tokens/sec) and cheaper than doing full file rewrites or search & replace. Here's how it works 🧵

The issue I kept having with string replace was in situations like this, where fast apply works a lot better: x.com/ErikBjare/stat…

Another case where morph shines: gptme runs pre-commit hooks (format, lint fix, etc) after edits, which previously confused gptme as hooks caused contents to change, and search/replace needs exact match. Morph uses the latest file when generating the actual patch, which works!

I'd be curious to see benchmarks for stuff like @morphllm, since most of my diff-apply usage has been replaced by it.

avante.nvim + kimi-k2 + morph fastapply 这套完美搭配真的给我用爽了😍,又便宜又强大又快又丝滑,我昨天 Vibe Coding 了 9 个小时,才花费我 $4.6!其中 k2 花费 $4.1,morph 花费 $0.5 截图为证:图一是 moonshot 消费,图二是 morph 消费,图三是 wakatime 中的编程时间统计图

I found that the combination of kimi-k2 and morph fastapply is incredibly effective. Since kimi-k2 is a bit slow, using morph fastapply means that when calling the edit_file tool, kimi-k2 only need to return the path and edit_code without returning the lengthy search_code part,…

remember when we used to use chatgpt to generate code and copy it over to vscode?

I found that the combination of kimi-k2 and morph fastapply is incredibly effective. Since kimi-k2 is a bit slow, using morph fastapply means that when calling the edit_file tool, kimi-k2 only need to return the path and edit_code without returning the lengthy search_code part,…

Today, avante.nvim has two updates: 1. Fast apply is supported, using morphllm.com to greatly improve the issue of failure when editing files. 2. Only the moonshot provider is used; you can directly use provider = 'moonshot' for one-click access to kimi/k2 models.

Grok 4 and Kimi K2 competing on top of the Trending models charts

Morph 🩷 nvim

Today, avante.nvim has two updates: 1. Fast apply is supported, using morphllm.com to greatly improve the issue of failure when editing files. 2. Only the moonshot provider is used; you can directly use provider = 'moonshot' for one-click access to kimi/k2 models.

The success of Kimi K2 is no accident. The unfortunate reality in AI is that user experiences haven't yet fully caught up to raw model capabilities. Experiences have plateaued. There are only so many coding assistants, research tools, or agents you can realistically offer, and…

Just switched out the edit_file tool to the new MCP by @morphllm and was giving the hyped up @Kimi_Moonshot a go but yeah, free tier struck me out. Losing track of how many places I have credits in!