mohit

@mohitwt_

19 • ml • stay hard

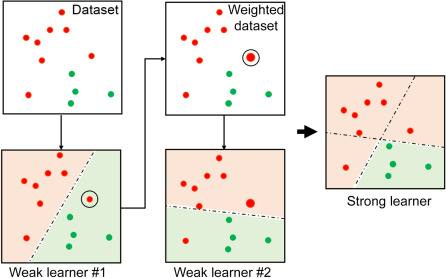

dev log: > wrapped up Random Forest and how it builds multiple decision trees on different samples > understood how it's used with ensemble techniques like bagging to improve accuracy and reduce overfitting > started FastAPI for a project > Random Forest is completed.

Gradient Boosting, from first few models till it started overfitting:

1/ What is Random Forest? A Random Forest is not just one decision tree, it’s a group of them. Each tree gives its own answer, and the forest takes a vote (for classification) or average (for regression). More trees = better, stable results.

You’re training a model, and the training accuracy is 95%, but the validation accuracy is only 70%. What’s likely happening, and how might you fix it?