Mohit Bansal

@mohitban47

Parker Distinguished Prof @UNC. PECASE/AAAI Fellow. Director http://MURGeLab.cs.unc.edu (@unc_ai_group). Past @Berkeley_AI @TTIC_Connect @IITKanpur #NLP #CV #AI

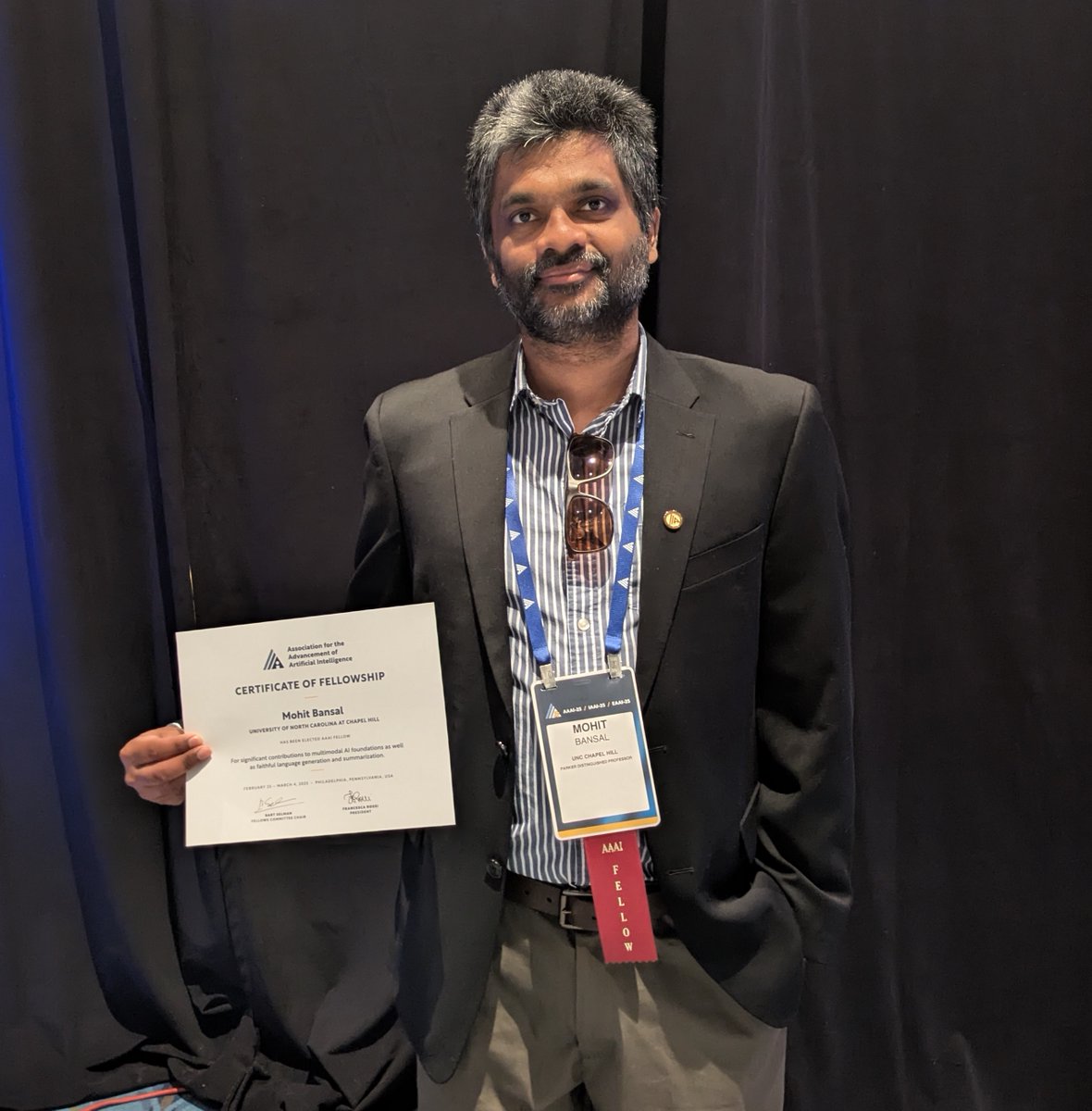

Thank you @RealAAAI for the honor & the fun ceremonies -- humbled to be inducted as a AAAI Fellow in esteemed company 🙏 PS. I am still around today in Philadelphia if anyone wants to meet up at #AAAI2025 :-) Thanks once again to everyone (students+postdocs+collaborators,…

My talented collaborator & mentor @jaemincho will be recruiting PhD students at @JHUCompSci for Fall 2026! If you're interested in vision, language, or generative models, definitely reach out!🎓🙌

🥳 Gap year update: I'll be joining @allen_ai/@UW for 1 year (Sep2025-Jul2026 -> @JHUCompSci) & looking forward to working with amazing folks there, incl. @RanjayKrishna, @HannaHajishirzi, Ali Farhadi. 🚨 I’ll also be recruiting PhD students for my group at @JHUCompSci for Fall…

🚨 Excited to announce GrAInS, our new LLM/VLM steering method that uses gradient-based attribution to build more targeted interventions. Some highlights: 1️⃣ Compatible with both LLMs and VLMs, can intervene on text and vision tokens 2️⃣ Gains across variety of tasks +…

🚀 We introduce GrAInS, a gradient-based attribution method for inference-time steering (of both LLMs & VLMs). ✅ Works for both LLMs (+13.2% on TruthfulQA) & VLMs (+8.1% win rate on SPA-VL). ✅ Preserves core abilities (<1% drop on MMLU/MMMU). LLMs & VLMs often fail because…

📢 Excited to share our new paper, where we introduce, ✨GrAInS✨, an inference-time steering approach for LLMs and VLMs via token attribution. Some highlights: ➡️GrAIns leverages contrastive, gradient-based attribution to identify the most influential textual or visual tokens…

🚀 We introduce GrAInS, a gradient-based attribution method for inference-time steering (of both LLMs & VLMs). ✅ Works for both LLMs (+13.2% on TruthfulQA) & VLMs (+8.1% win rate on SPA-VL). ✅ Preserves core abilities (<1% drop on MMLU/MMMU). LLMs & VLMs often fail because…

🚀 We introduce GrAInS, a gradient-based attribution method for inference-time steering (of both LLMs & VLMs). ✅ Works for both LLMs (+13.2% on TruthfulQA) & VLMs (+8.1% win rate on SPA-VL). ✅ Preserves core abilities (<1% drop on MMLU/MMMU). LLMs & VLMs often fail because…

Big congrats! If you’re thinking about a PhD in multimodal reasoning, generation, and evaluation, go work with @jmin__cho!

🥳 Gap year update: I'll be joining @allen_ai/@UW for 1 year (Sep2025-Jul2026 -> @JHUCompSci) & looking forward to working with amazing folks there, incl. @RanjayKrishna, @HannaHajishirzi, Ali Farhadi. 🚨 I’ll also be recruiting PhD students for my group at @JHUCompSci for Fall…

Jaemin is amazing and I would highly recommend applying for a PhD with him.

🥳 Gap year update: I'll be joining @allen_ai/@UW for 1 year (Sep2025-Jul2026 -> @JHUCompSci) & looking forward to working with amazing folks there, incl. @RanjayKrishna, @HannaHajishirzi, Ali Farhadi. 🚨 I’ll also be recruiting PhD students for my group at @JHUCompSci for Fall…

🥳 Gap year update: I'll be joining @allen_ai/@UW for 1 year (Sep2025-Jul2026 -> @JHUCompSci) & looking forward to working with amazing folks there, incl. @RanjayKrishna, @HannaHajishirzi, Ali Farhadi. 🚨 I’ll also be recruiting PhD students for my group at @JHUCompSci for Fall…

Sharing some personal updates 🥳: - I've completed my PhD at @unccs! 🎓 - Starting Fall 2026, I'll be joining the Computer Science dept. at Johns Hopkins University (@JHUCompSci) as an Assistant Professor 💙 - Currently exploring options + finalizing the plan for my gap year (Aug…

🎉 Our paper, GenerationPrograms, which proposes a modular framework for attributable text generation, has been accepted to @COLM_conf! GenerationPrograms produces a program that executes to text, providing an auditable trace of how the text was generated and major gains on…

Excited to share GenerationPrograms! 🚀 How do we get LLMs to cite their sources? GenerationPrograms is attributable by design, producing a program that executes text w/ a trace of how the text was generated! Gains of up to +39 Attribution F1 and eliminates uncited sentences,…

The MUGen workshop at #ICML2025 is happening now! Stop by for talks on adversarial ML, unlearning as rational belief revision, failure modes in unlearning, robust LLM unlearning, and the bright vs. dark side of forgetting in generative AI!

🚨Exciting @icmlconf workshop alert 🚨 We’re thrilled to announce the #ICML2025 Workshop on Machine Unlearning for Generative AI (MUGen)! ⚡Join us in Vancouver this July to dive into cutting-edge research on unlearning in generative AI—featuring an incredible lineup of…

I’ll be at #ICML2025 this week to present ScPO: 📌 Wednesday, July 16th, 11:00 AM-1:30 PM 📍East Exhibition Hall A-B, E-2404 Stop by or reach out to chat about improving reasoning in LLMs, self-training, or just tips about being on the job market next cycle! 😃

🚨 Self-Consistency Preference Optimization (ScPO)🚨 - New self-training method without human labels - learn to make the model more consistent! - Works well for reasoning tasks where RMs fail to evaluate correctness. - Close to performance of supervised methods *without* labels,…

Overdue job update -- I am now: - A Visiting Scientist at @schmidtsciences, supporting AI safety and interpretability - A Visiting Researcher at the Stanford NLP Group, working with @ChrisGPotts I am so grateful I get to keep working in this fascinating and essential area, and…

🎉 Glad to see our work on handling conflicting & noisy evidence and ambiguous queries in RAG systems (via a new benchmark & multi-agent debate method) has been accepted to #COLM2025 @COLM_conf!! 🇨🇦 Congrats to Han on leading this effort. More details in the thread below and…

🚨Real-world retrieval is messy: queries can be ambiguous, or documents may conflict/have incorrect/irrelevant info. How can we jointly address all these problems? We introduce: ➡️ RAMDocs, a challenging dataset with ambiguity, misinformation, and noise. ➡️ MADAM-RAG, a…

🥳 Excited to share our work -- Retrieval-Augmented Generation with Conflicting Evidence -- on addressing conflict in RAG due to ambiguity, misinformation, and noisy/irrelevant evidence has been accepted to @COLM_conf #COLM2025! Our new benchmark RAMDocs proves challenging for…

🚨Real-world retrieval is messy: queries can be ambiguous, or documents may conflict/have incorrect/irrelevant info. How can we jointly address all these problems? We introduce: ➡️ RAMDocs, a challenging dataset with ambiguity, misinformation, and noise. ➡️ MADAM-RAG, a…

🚨 Check our new paper, Video-RTS, a novel and data-efficient RL solution for complex video reasoning tasks, complete with video-adaptive Test-Time Scaling (TTS). 1⃣️Traditionally, such tasks have relied on massive SFT datasets. Video-RTS bypasses this by employing pure RL with…

🚨Introducing Video-RTS: Resource-Efficient RL for Video Reasoning with Adaptive Video TTS! While RL-based video reasoning with LLMs has advanced, the reliance on large-scale SFT with extensive video data and long CoT annotations remains a major bottleneck. Video-RTS tackles…

🚀 Check out our new paper Video-RTS — a data-efficient RL approach for video reasoning with video-adaptive TTS! While prior work relies on massive SFT (400K+ VQA and/or CoT samples 🤯), Video-RTS: ▶️ Replaces expensive SFT with pure RL using output-based rewards ▶️ Introduces a…

🚨Introducing Video-RTS: Resource-Efficient RL for Video Reasoning with Adaptive Video TTS! While RL-based video reasoning with LLMs has advanced, the reliance on large-scale SFT with extensive video data and long CoT annotations remains a major bottleneck. Video-RTS tackles…

Video-RTS Rethinking Reinforcement Learning and Test-Time Scaling for Efficient and Enhanced Video Reasoning

🚨Introducing Video-RTS: Resource-Efficient RL for Video Reasoning with Adaptive Video TTS! While RL-based video reasoning with LLMs has advanced, the reliance on large-scale SFT with extensive video data and long CoT annotations remains a major bottleneck. Video-RTS tackles…

I've officially joined Meta Superintelligence Labs (MSL) org in the Bay Area. I'll be working on critical aspects of pre-training, synthetic data and RL for the next generation of models. Humbled and eager to contribute to the quest for superintelligence. @AIatMeta

🎉 Very excited to see TaCQ — our work on task-conditioned mixed-precision quantization that draws on interpretability methods — accepted to @COLM_conf #COLM2025 with strong scores and a nice shoutout from the AC! Kudos to Hanqi on leading this effort!

🚨Announcing TaCQ 🚨 a new mixed-precision quantization method that identifies critical weights to preserve. We integrate key ideas from circuit discovery, model editing, and input attribution to improve low-bit quant., w/ 96% 16-bit acc. at 3.1 avg bits (~6x compression)…

🎉 Excited to share that TaCQ (Task-Circuit Quantization), our work on knowledge-informed mixed-precision quantization, has been accepted to #COLM2025 @COLM_conf! Happy to see that TaCQ was recognized with high scores and a nice shoutout from the AC – big thanks to @EliasEskin…

🚨Announcing TaCQ 🚨 a new mixed-precision quantization method that identifies critical weights to preserve. We integrate key ideas from circuit discovery, model editing, and input attribution to improve low-bit quant., w/ 96% 16-bit acc. at 3.1 avg bits (~6x compression)…