ML Safety

@ml_safety

Course: http://course.mlsafety.org Newsletter: http://newsletter.mlsafety.org Papers as they come out: https://twitter.com/topofmlsafety. More: http://mlsafety.org

Join us at the AI Safety Social at #ICML2025! We'll open with a panel on the impacts of reasoning & agency on safety with @AdtRaghunathan, @ancadianadragan, @DavidDuvenaud, & @sivareddyg. Come connect over snacks & drinks. 🗓️ Thursday, July 17, from 7-9 PM in West Ballroom A

Join us for a panel and social on ML Safety at ICML tomorrow (07/23) at 5.30 CET in Lehar 1-4! We have a great set of panelists lined up to discuss progress in ML Safety research including Bo Li, David Krueger and Sanmi Koyejo.

We’re having a social on ML Safety at ICLR this Thursday (5/9) with drinks and snacks! The social will be from 5:30-7:30 pm CET in room Schubert 4 at the Messe Wien Exhibition and Congress Center. Register here (so we can estimate how much food to buy)! forms.gle/zWhi6BXbdBTYhE…

A collection of some of the best safety papers of 2023 newsletter.mlsafety.org/p/ml-safety-ne…

Tomorrow at 1pm PST, Kenneth Li will present at the Center for AI Safety’s Reading and Learning event. Kenneth has recently published on identifying world models in LLM activations and improving truthfulness in LLM outputs. Here are the details: centerforaisafety.github.io/reading/

We’re having a social on ML safety at ICML this Wednesday (7/26) with food and snacks! The social will be from 5:45 pm to 7:30 PM Hawaii time in room 323 in Hawaii Convention Center. Register here (so we can estimate how much food to buy)! docs.google.com/forms/d/e/1FAI…

Following the statement on AI extinction risks, many have called for further discussion of the challenges posed by AI and ideas on how to mitigate risk. Our new paper provides a detailed overview of catastrophic AI risks. Read it here: arxiv.org/abs/2306.12001 (🧵 below)

In the 9th edition of the ML safety newsletter, we cover verifying large training runs, security risks from LLM access to APIs, why natural selection may favor AIs over humans, and more! newsletter.mlsafety.org/p/ml-safety-ne…

In the 8th edition of the ML Safety Newsletter, we cover interpretability, using law to inform AI alignment, and scaling laws for proxy gaming. newsletter.mlsafety.org/p/ml-safety-ne…

In the 7th ML Safety newsletter, we discuss AI lie detectors, research on transparency and grokking, adversarial defenses for text models, and the new ML safety course. newsletter.mlsafety.org/p/ml-safety-ne…

“If you cannot measure it, you cannot improve it.” ML Safety research lacks benchmarks. We are offering up to $500,000 in prizes for ML Safety benchmark ideas (or papers). Main site: benchmarking.mlsafety.org Example ideas: benchmarking.mlsafety.org/ideas

In the sixth ML Safety newsletter, we cover a survey of transparency research, a substantial improvement to certified robustness, new examples of 'goal misgeneralization,' and what the ML community thinks about safety issues. newsletter.mlsafety.org/p/ml-safety-ne…

Can ML models spot an ethical dilemma? As ML systems make more real-world decisions it will become more important that they have a calibrated ethical awareness. Announcing a $100,000 competition for research on detecting moral ambiguity. moraluncertainty.mlsafety.org

In this special newsletter, we cover safety competitions and prizes: ML Safety Workshop ($100K), Trojan Detection ($50K), Forecasting ($625K), Uncertainty Estimation ($100K), Inverse Scaling ($250K), AI Worldview Writing Prize ($1.5M). Details: newsletter.mlsafety.org/p/ml-safety-ne…

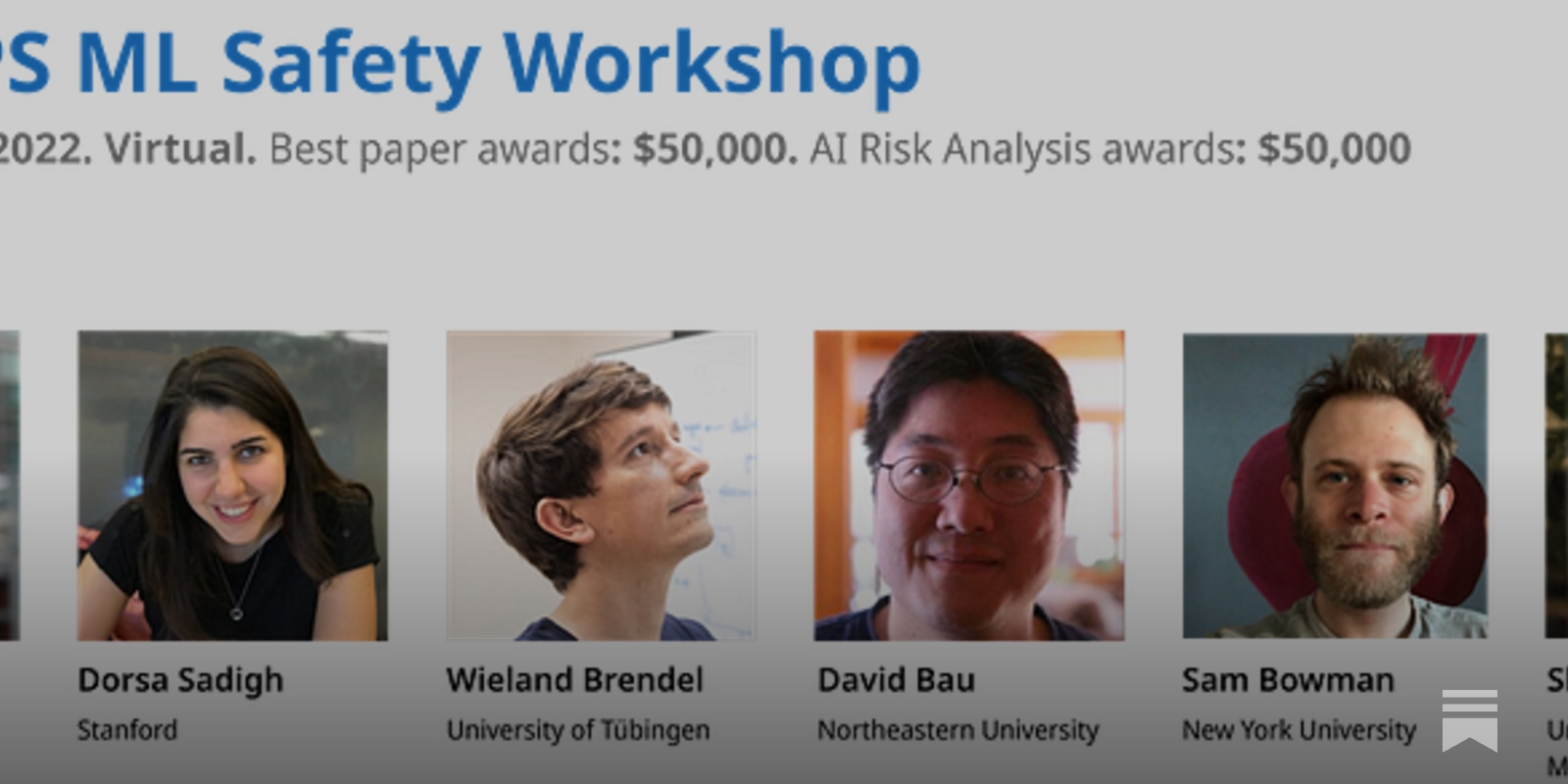

We’ll be organizing a NeurIPS workshop on Machine Learning Safety! We'll have $50K in best papers awards. To encourage proactiveness about tail risks, we'll also have $50K in awards for papers that discuss their impact on long-term, long-tail risks. neurips2022.mlsafety.org

In the fourth ML Safety newsletter, we cover many new interpretability papers, virtual logit matching, and how rationalization can help robustness. newsletter.mlsafety.org/p/ml-safety-ne…