Andrei Mircea

@mirandrom

PhD student @Mila_Quebec ⊗ mechanistic interpretability + systematic generalization + LLMs for science ⊗ http://mirandrom.github.io

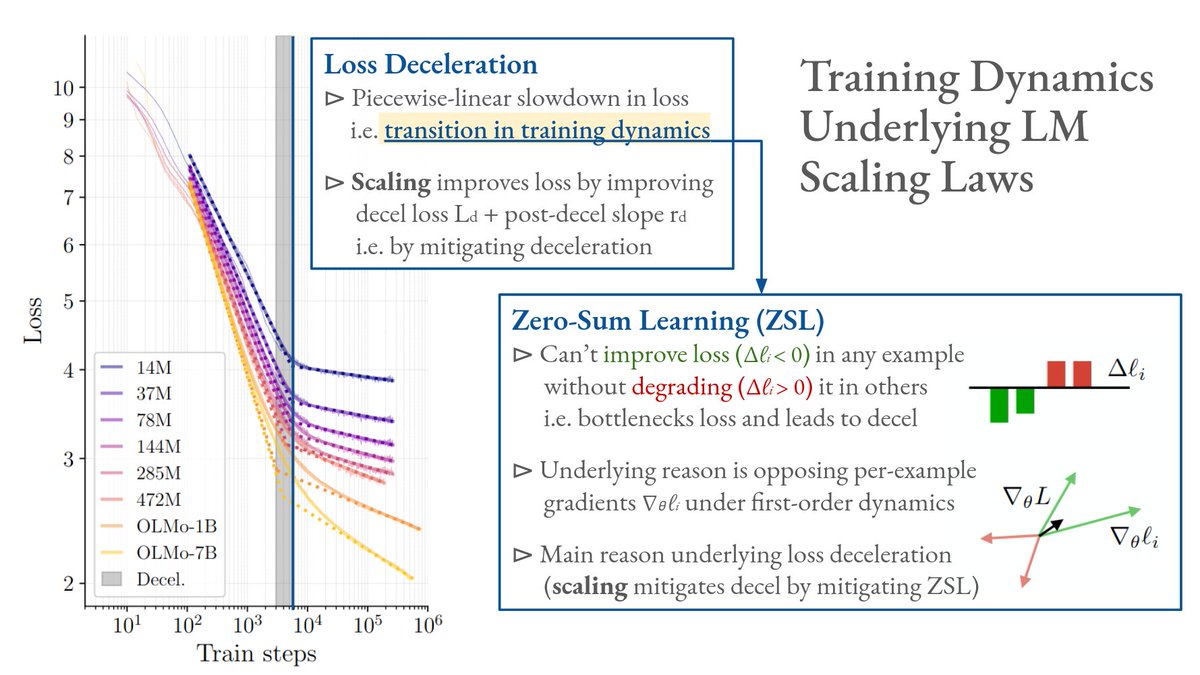

Step 1: Understand how scaling improves LLMs. Step 2: Directly target underlying mechanism. Step 3: Improve LLMs independent of scale. Profit. In our ACL 2025 paper we look at Step 1 in terms of training dynamics. Project: mirandrom.github.io/zsl Paper: arxiv.org/pdf/2506.05447

Life update: I’m excited to share that I’ll be starting as faculty at the Max Planck Institute for Software Systems(@mpi_sws_) this Fall!🎉 I’ll be recruiting PhD students in the upcoming cycle, as well as research interns throughout the year: lasharavichander.github.io/contact.html

🚨 70 million US workers are about to face their biggest workplace transmission due to AI agents. But nobody asks them what they want. While AI races to automate everything, we took a different approach: auditing what workers want vs. what AI can do across the US workforce.🧵

Mechanistic understanding of systematic failures in language models is something more research should strive for IMO. This is really interesting work in that vein by @ziling_cheng, highly recommend you check it out.

Do LLMs hallucinate randomly? Not quite. Our #ACL2025 (Main) paper shows that hallucinations under irrelevant contexts follow a systematic failure mode — revealing how LLMs generalize using abstract classes + context cues, albeit unreliably. 📎 Paper: arxiv.org/abs/2505.22630 1/n

Do LLMs hallucinate randomly? Not quite. Our #ACL2025 (Main) paper shows that hallucinations under irrelevant contexts follow a systematic failure mode — revealing how LLMs generalize using abstract classes + context cues, albeit unreliably. 📎 Paper: arxiv.org/abs/2505.22630 1/n

Are you still using hand-designed optimizers? Tomorrow morning, I’ll explain how we can meta-train learned optimizers that generalize to large unseen tasks! Don't miss my talk at OPT-2024, Sun 15 Dec 11:15-11:30 a.m. PST, West Ballroom A! x.com/benjamintherie…

Learned optimizers can’t generalize to large unseen tasks…. Until now! Excited to present μLO: Compute-Efficient Meta-Generalization of Learned Optimizers! Don’t miss my talk about it next Sunday at the OPT2024 Neurips Workshop :) 🧵arxiv.org/abs/2406.00153 1/N

Learned optimizers can’t generalize to large unseen tasks…. Until now! Excited to present μLO: Compute-Efficient Meta-Generalization of Learned Optimizers! Don’t miss my talk about it next Sunday at the OPT2024 Neurips Workshop :) 🧵arxiv.org/abs/2406.00153 1/N

I will be presenting our work at the MATH-AI workshop at #NeurIPS2024 today. West Meeting Room 118-120 11:00 AM - 12:30 PM; 4:00 PM - 5:00 PM Come by if you want to discuss designing difficult evaluation benchmarks, follow-up work, and mathematical reasoning in LLMs!

🚨 New paper alert! 🚨 A pipeline to generate increasingly difficult math evaluations using frontier LLMs, and human intervention! TLDR: Models show 📉 in performances on the generated questions ranging from 13.4% to 92.9%, relative to MATH, which bootstrapped the generation 🤯

We'll be presenting our work at the NeurReps workshop at #NeurIPS2024 today from 4:15pm to 5:30pm in West Ballroom C. Stop by if you're interested in the geometry of representations in LLMs!

New paper! 🌟How does LM representational geometry encode compositional complexity? A: it depends on how we define compositionality! We distinguish compositionality of form vs. meaning, and show LMs encode form complexity linearly and meaning complexity nonlinearly... 1/9

Presenting two works at @NeurIPSConf workshops today, led by my amazing collaborators. Drop by if you’re curious about issues with current preference datasets, and use of model merging methods for alignment! @MIla_Quebec. Details below 🧵👇

I am presenting the two following works (in🧵) done at @Mila_Quebec on Sunday 3:00 PM at the SafeGenAI workshop @NeurIPSConf. Drop by and let’s chat! Papers:

Hi everyone, I have a new paper about how to make planning agents avoid delusional subgoals, which will be discussed tomorrow at the NeurIPS SafeGenAI workshop (3-5 PM, in East Exhibition Hall A) Please drop by! #NeurIPS2024 #NeurIPS #AIsafety

Come check out my poster with @shahrad_mz today from 3:00-5:00pm at @NeurIPSConf ‘s Safe Generative AI Workshop! We are presenting our work done at @Mila_Quebec on LLM hallucinations through in-training methods we call “Sensitivity Dropout”. arxiv.org/abs/2410.15460