Matt Groh

@mattgroh

Assistant professor @NorthwesternU @KelloggSchool | PhD @MIT @medialab | human AI collaboration | computational social science | cognitive science

🚨 New paper in @NatureComms 🚨 We created deepfakes of the current & former @POTUS giving speeches (w/ voices from voice actors & @elevenlabsio) to study what drives how well people can tell fake speeches from real ones Time to update the "Seeing is Believing" narrative 👇

Time for @IC2S2 in Norrköping!! Super excited to share this year’s projects in the lab revealing how (M)LLMs can offer insights into human behavior & cognition More at …boration-lab.kellogg.northwestern.edu/ic2s2 If you're here too, say hi! #IC2S2

RLCF: Reinforcement Learning with Community Feedback This is an awesome Human-AI Collaboration research agenda

🚨🚨 Excited to share a new paper led by @Li_Haiwen_ with the @CommunityNotes team! LLMs will reshape the information ecosystem. Community Notes offers a promising model for keeping human judgment central but it's an open question how to best integrate LLMs. Thread👇

I'm excited to share that I have been appointed as a new MIT faculty starting this September as an assistant professor at the MIT Media Lab. Very excited! Official Announcement: media.mit.edu/posts/patpat-p… @medialab @FluidInterfaces @AHA_MediaLab @MIT

How does trust in LLMs differ across countries? Neat experiment using data from Prolific participants in 11 countries to measure trust in LLMs and how its related to other measures of societal trust.

🚨New pre-print!🚨 “Understanding Trust in AI as an Information Source: Cross-Country Evidence”, joint w @m_serra_garcia! Coupling experimental data from 2900 participants & 11 countries with WVS data, we provide novel evidence of individuals' trust in LLMs as info sources.

How do should we measure the value of an explanation? First, we need a goal of what the explanation should do. Then, we need to evaluate how the explanation is moving a decision maker towards that goal. Must read for thinking about human-AI collaboration

Explainable AI has long frustrated me by lacking a clear theory of what explanations should do. Improve use of a model for what? How? Given a task what's max effect explanation can have? It's complicated bc most methods are functions of features & prediction but not true state 1/

The human touch matters a great deal for empathic support! Awesome new research to check out

🚨New paper Alert! So many people ask AI for emotional support – but is it like support from a human? Our new paper published in @NatureHumBehav explores whether people value #AI - generated #empathy as much as human empathy, in 9 preregistered studies with 6,282 participants.🧵

Our study led by @ChengleiSi reveals an “ideation–execution gap” 😲 Ideas from LLMs may sound novel, but when experts spend 100+ hrs executing them, they flop: 💥 👉 human‑generated ideas outperform on novelty, excitement, effectiveness & overall quality!

Are AI scientists already better than human researchers? We recruited 43 PhD students to spend 3 months executing research ideas proposed by an LLM agent vs human experts. Main finding: LLM ideas result in worse projects than human ideas.

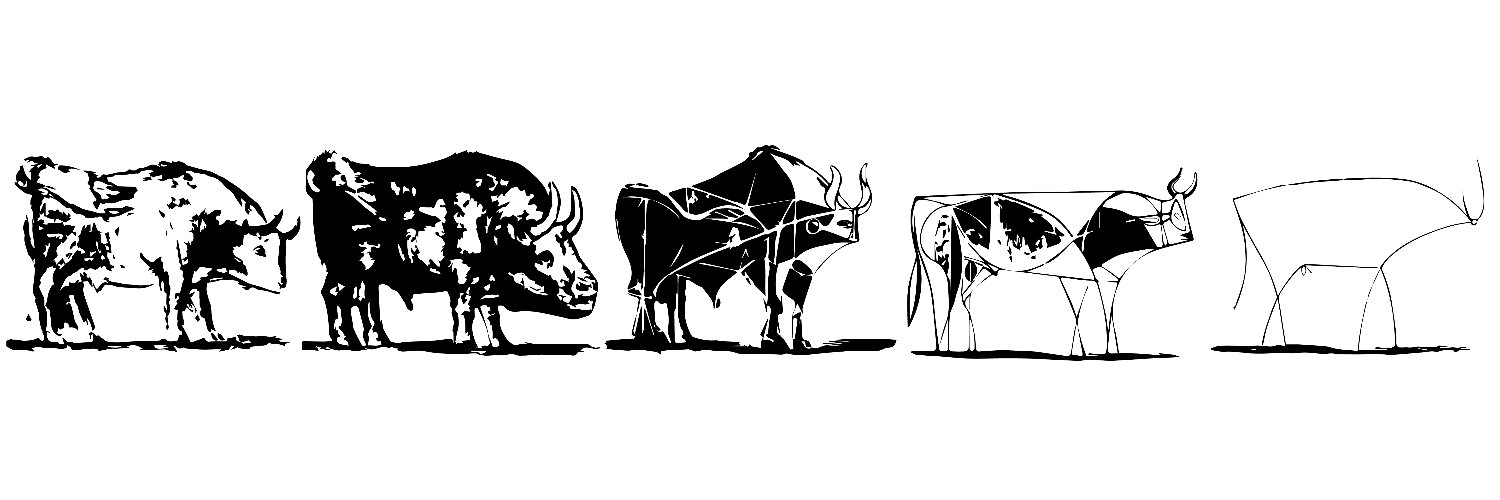

"My survival as an artist will depend on whether I’ll be able to offer something that A.I. can’t: drawings that are as powerful as a birthday doodle from a child." Epic visual story by @abstractsundayby in NYT Magazine

Awesome work showing the metaphors the general public use to describe AI And interesting to juxtapose this to the popular metaphors described by Melanie Mitchell science.org/doi/10.1126/sc…

How does the public conceptualize AI? Rather than self-reported measures, we use metaphors to understand the nuance and complexity of people’s mental models. In our #FAccT2025 paper, we analyzed 12,000 metaphors collected over 12 months to track shifts in public perceptions.

When are LLMs-as-judge reliable? That's a big question for frontier labs and it's a big question for computational social science. Excited to share our findings (led by @aakriti1kumar!) on how to address this question for any subjective task & specifically for empathic comms

How do we reliably judge if AI companions are performing well on subjective, context-dependent, and deeply human tasks? 🤖 Excited to share the first paper from my postdoc (!!) investigating when LLMs are reliable judges - with empathic communication as a case study 🧐 🧵👇

Awesome tutorial!

Curious how to build agents that can control a browser? I just wrote up a full tutorial on how to do it completely from scratch and with Magentic-UI. My goal is to demystify browser-use and CUA agents, it's fun to follow along! Link: husseinmozannar.github.io/#/blog/web_age… Jupyter notebook:…

Veo3 represents a paradigm shift in AI capabilities for realistic media that tells provocative, fabricated stories This video + thread offer a quick tutorial of the capabilities and limitations (like malformed text & character consistency) that are easy to creatively bypass

I decided to use my lunch time to show you how easy it is to make a fake news story in 30 minutes with Veo3 (I didn't try to perfect it). First: the footage. A mayor comes with a crazy idea and people hate it: (1/10) #verification #ai

Looking at Van Gogh’s Starry Night, we see not only its content (a French village beneath a night sky) but also its *style*. How does that work? How do we see style? In @NatureHumBehav, @chazfirestone & I take an experimental approach to style perception! osf.io/preprints/psya…

This Wednesday, NICO is thrilled to once again host Lightning Talks! This term we are lucky to have three amazing researchers from NICO, Kellogg, and McCormick Engineering. Join us in Chambers Hall or online via Zoom. 🗓️ Wed 5/14 at 12pm US Central 🔗 bit.ly/WedatNICO

Awesome write up in Kellogg Insight on our paper published at #CHI2025 this week! insight.kellogg.northwestern.edu/article/are-we…

I’m at #chi2025 and today I’m presenting our paper on characterizing photorealism and artifacts in diffusion model-generated images (@ 2:10 PM, G318-319) Paper: dl.acm.org/doi/pdf/10.114… With @frogspitsimulat ,@aakriti1kumar, @chatzimparmpas, @mattgroh and @JessicaHullman

Decision studies appear in HCI, vis, & AI/ML, but how “good decision” is defined is often ad-hoc. My #CHI2025 talk today will answer Qs like: What's a decision problem? What's the best possible performance on a decision problem? What minimum info do participants need? 1/2