Marta Skreta

@martoskreto

currently interning @MSRNE | @UofTCompSci PhD Student in @A_Aspuru_Guzik's #matterlab and @VectorInst | prev. @Apple

🧵(1/6) Delighted to share our @icmlconf 2025 spotlight paper: the Feynman-Kac Correctors (FKCs) in Diffusion Picture this: it’s inference time and we want to generate new samples from our diffusion model. But we don’t want to just copy the training data – we may want to sample…

1/ Where do Probabilistic Models, Sampling, Deep Learning, and Natural Sciences meet? 🤔 The workshop we’re organizing at #NeurIPS2025! 📢 FPI@NeurIPS 2025: Frontiers in Probabilistic Inference – Learning meets Sampling Learn more and submit → fpiworkshop.org…

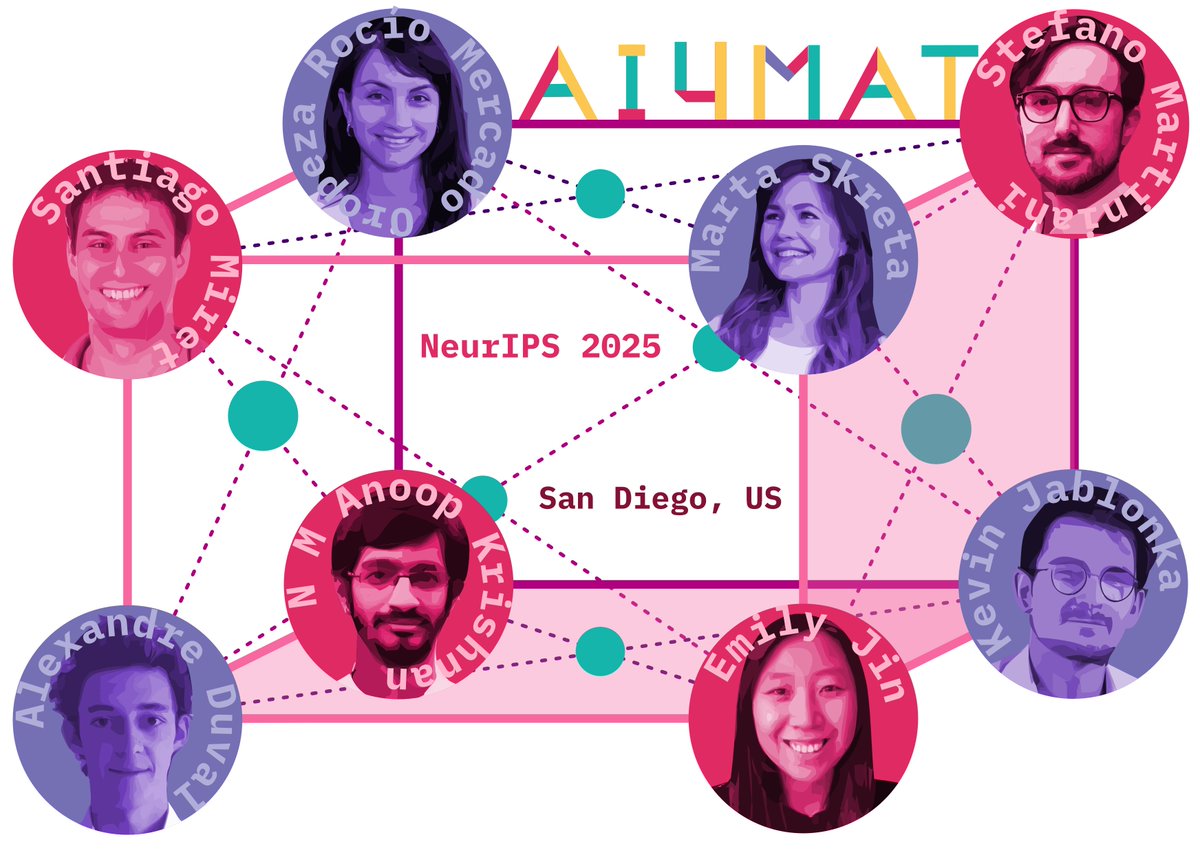

AI4Mat is back for NeurIPS! time to crystallize those ideas and make a solid-state submission by august 22, 2025 💪 new this year: opt-in your work for our Research Learning from Speaker Feedback program -- a new structured discussion format where spotlight presenters receive…

🚀 GenMol is now open‑sourced: you can now train and finetune on your data! It uses masked diffusion + a fragment library to craft valid SAFE molecules, from de novo design to lead optimization. #GenMol #DrugDiscovery #Biopharma

Check out SynCoGen-our new co-generation model for synthesizable small molecules! We define building block & reaction-level graphs which we learn via masked graph diffusion and couple this with flow matching to learn atomic coordinates in 3D space. 🧵

📢We are excited to share SynCoGen—the first generative model that co-generates 🔷building-block graphs,🔷reaction edges and 🔷full 3-D coordinates, so every molecule comes with both a synthesis plan and a physically plausible shape.

When designing AI scientist benchmarks, the challenge lies in how to simulate realistic experimental data. We find that the systems biology provide a great simulator for this! I am currently at ICML. Very excited to chat with anyone interested in this work!

What makes a great scientist? Most AI scientist benchmarks miss the key skill: designing and analyzing experiments. 🧪 We're introducing SciGym: the first simulated lab environment to benchmark #LLM on experimental design and analysis capabilities. #AI4SCIENCE #ICML25

Come check out SBG happening now! W-115 11-1:30 with @charliebtan @bose_joey Chen Lin @leonklein26 @mmbronstein

we’re not kfc but come watch us cook with our feynman-kac correctors, 4:30 pm today (july 16) at @icmlconf poster session — east exhibition hall #3109 @k_neklyudov @AlexanderTong7 @tara_aksa @OhanesianViktor

let’s gooooo

Really excited to share that we’re in the @ycombinator Combinator summer batch! I’m even more excited to be teaming up with @phil_fradkin and @ianshi3 on this next chapter. @blankbio_, we're building the next generation of foundation models for RNA.

👋 I'm at #ICML2025 this week, presenting several papers throughout the week with my awesome collaborators! Please do reach out if you'd like to grab a coffee ☕️ or catch up again! Papers in 🧵below 👇:

Our team spent a massive amount of time to provide a review of what we call "general purpose models" for the chemical sciences. We explain fundamentals and go through applications and broader implications. arxiv.org/abs/2507.07456

🚨Do you want to learn more about general purpose models for chemistry?🚨 Look no further! 👀👀 Our comprehensive review of how these models are currently used is now live on arXiv! arxiv.org/pdf/2507.07456

🎉Personal update: I'm thrilled to announce that I'm joining Imperial College London @imperialcollege as an Assistant Professor of Computing @ICComputing starting January 2026. My future lab and I will continue to work on building better Generative Models 🤖, the hardest…

(1/n) Sampling from the Boltzmann density better than Molecular Dynamics (MD)? It is possible with PITA 🫓 Progressive Inference Time Annealing! A spotlight @genbio_workshop of @icmlconf 2025! PITA learns from "hot," easy-to-explore molecular states 🔥 and then cleverly "cools"…

✨Cradle is hiring protein+ML researchers!✨ We operate ML for lab-in-the-loop lead optimization across all industries (pharma, synbio, ...), modalities (antibodies, enzymes, ...), properties (binding, activity, ...) We're a scaleup and already relied upon by 4 of the top 20…

Why do we keep sampling from the same distribution the model was trained on? We rethink this old paradigm by introducing Feynman-Kac Correctors (FKCs) – a flexible framework for controlling the distribution of samples at inference time in diffusion models! Without re-training…

🧵(1/6) Delighted to share our @icmlconf 2025 spotlight paper: the Feynman-Kac Correctors (FKCs) in Diffusion Picture this: it’s inference time and we want to generate new samples from our diffusion model. But we don’t want to just copy the training data – we may want to sample…

Check out FKCs! A principled flexible approach for diffusion sampling. I was surprised how well it scaled to high dimensions given its reliance on importance reweighting. Thanks to great collaborators @Mila_Quebec @VectorInst @imperialcollege and @GoogleDeepMind. Thread👇🧵

🧵(1/6) Delighted to share our @icmlconf 2025 spotlight paper: the Feynman-Kac Correctors (FKCs) in Diffusion Picture this: it’s inference time and we want to generate new samples from our diffusion model. But we don’t want to just copy the training data – we may want to sample…

Given q_t, r_t as diffusion model(s), an SDE w/drift β ∇ log q_t + α ∇ log r_t doesn’t sample the sequence of geometric avg/product/tempered marginals! To correct this, we derive an SMC scheme via PDE perspective Resampling weights are ‘free’, depend only on (exact) scores!

🧵(1/6) Delighted to share our @icmlconf 2025 spotlight paper: the Feynman-Kac Correctors (FKCs) in Diffusion Picture this: it’s inference time and we want to generate new samples from our diffusion model. But we don’t want to just copy the training data – we may want to sample…