Martina Vilas

@martinagvilas

CS PhD Student working on AI interpretability. Intern at @MSFTResearch AI Frontiers.

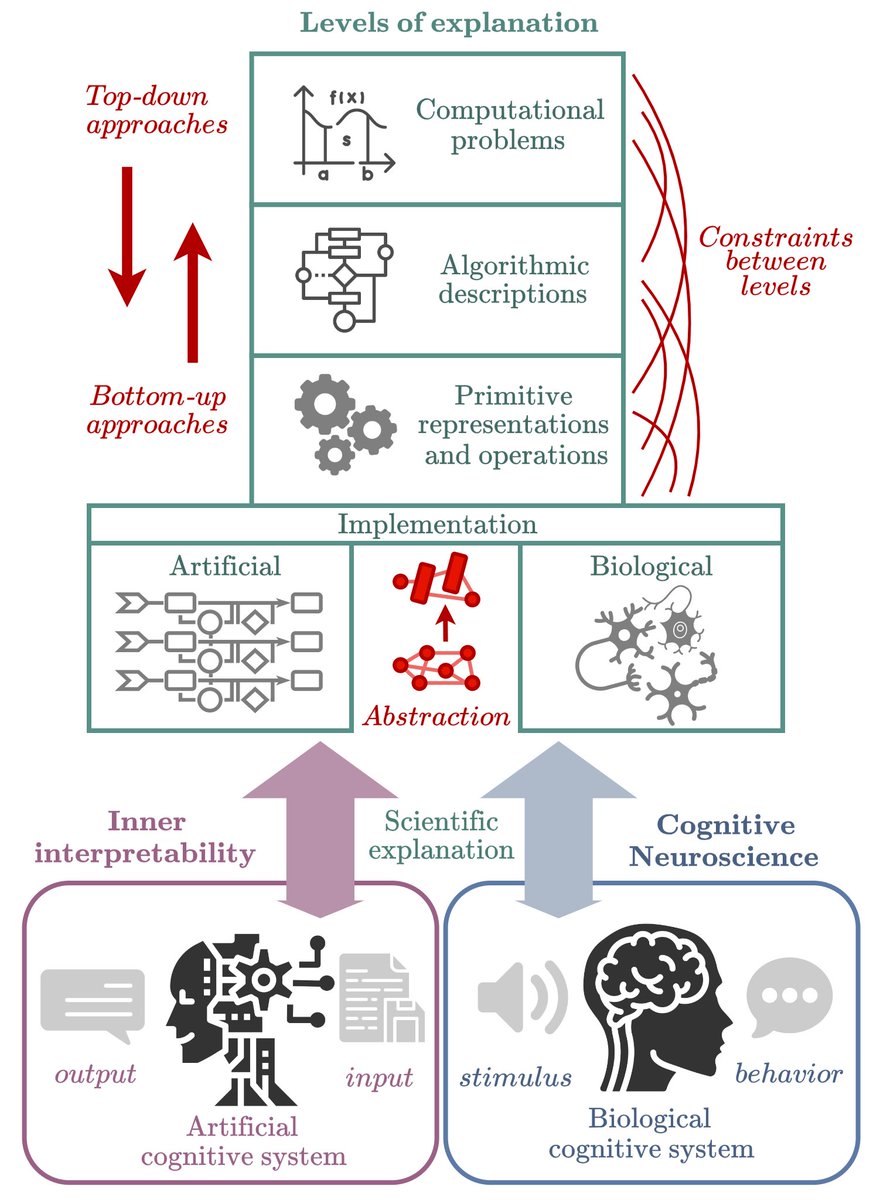

New position paper accepted at #ICML2024! 💻🧠 "An Inner Interpretability Framework for AI Inspired by Lessons from Cognitive Neuroscience" We show how many of the problems/discussions in the AI Inner Interpretability field are similar to those in Cognitive Neuroscience (1/2)

My team at Microsoft Research, working in multimodal, AI is hiring! Please apply if you are interested in working at the cutting edge of multimodal generative AI. jobs.careers.microsoft.com/global/en/job/…

Excited to have joined @MSFTResearch in Redmond as a PhD intern with the AI Frontiers Evaluation and Understanding team! This summer I'll be working on the interpretability of AI reasoning models, exploring how we can better understand, assess and control their behavior 💻🧠

I'm very excited that this work was accepted for an oral presentation @naacl! Come by at 10:45 on Thursday to hear how we can use mechanistic interpretability to better understand how LLMs incorporate context when answering questions.

The ability to properly contextualize is a core competency of LLMs, yet even the best models sometimes struggle. In a new preprint, we use #MechanisticInterpretability techniques to propose an explanation for contextualization errors: the LLM Race Conditions Hypothesis. [1/9]

We will be presenting this 💫 spotlight 💫 paper at #ICLR2025. Come say hi or DM me if you're interested in discussing AI #interpretability in Singapore! 📆 Poster Session 4 (#530) 🕰️ Fri 25 Apr. 3:00-5:30 PM 📝 openreview.net/forum?id=QogcG… 📊 iclr.cc/virtual/2025/p…

December 5th, join @mathildepapillo and our ML Theory group as they dive into "Beyond Euclid: An Illustrated Guide to Modern Machine Learning with Geometric, Topological, and Algebraic Structures." 🤔

Happening now! Visit @TiezziMatteo and me at the @eccvconf #hcv workshop if you are interested in visual #attention models and human-inspired #XAI @FAU_MaD_Lab @UniFAU @expertdotai @TU_Muenchen @goetheuni @IITalk @PAVIS_IIT @HelmholtzMunich @BjoernEskofier @gemmarono

Less than three days to go for the eXCV Workshop at #ECCV2024! Join us on Sunday from 14:00-18:00 in Brown 1 to hear about the state of XAI research from an exciting lineup of speakers! @orussakovsky, @vidal_rene, @sunniesuhyoung, @YGandelsman, @zeynepakata @eccvconf (1/4)

Super excited to start this mini cohort on geometric deep learning!!! The question about “why use convolutions instead of something else?” is actually one of the questions I wondered myself, that eventually led me to learn about GDL.

Have you ever wondered, "why use Convolutions instead of something else?" 🤔 Starting next week, join @aniervs and @martinagvilas with our Open Science Community’s ML Theory group for a 9 week cohort on Geometric Deep Learning!

✨Be sure to join us tomorrow! This is going to be really fun :) Our speakers will be pitching ideas from a wide range of ML sub-fields : ML theory, NLP interpretability, ML fairness and ML + Physical Sciences ✨

Tomorrow 9am PST/12pm EST join @martinagvilas @SurbhiGoel_ @AbdelZayed1 and Shruti Mishra with our community-led Research Connections group where the experienced researchers will pitch research ideas and invite collaboration.

Applications for @CohereForAI scholars program close tomorrow. Something special about the program is our commitment that a research scientist or engineer will read every application.

August 24th @martinagvilas , will be giving an exciting presentation with our community-led NLP group on "Probing the representations and capacities of Vision-Language Models," be sure to check it out! 🤩 Learn more: cohere.com/events/cohere-…

I’ll be presenting this work next week at #ICML👇 Come by or send a DM if you are interested in discussing these topics in Vienna! 📌 Hall C 4-9 #3004 🕜 Tuesday, July 23rd, at 11:30 a.m. - 1:00 pm CEST

New position paper accepted at #ICML2024! 💻🧠 "An Inner Interpretability Framework for AI Inspired by Lessons from Cognitive Neuroscience" We show how many of the problems/discussions in the AI Inner Interpretability field are similar to those in Cognitive Neuroscience (1/2)

This Thursday, check out the presentation on "LLM Processes: Numerical Predictive Distributions Conditioned on Natural Language" with James Requeima! Learn more: cohere.com/events/cohere-… Thank you @aniervs & @martinagvilas for organizing this talk! 👏

Our community-led ML Theory Group is looking forward to hosting @PetarV_93, Research Scientist at @GoogleDeepMind, next week on Thursday, April 25th for a presentation on "Categorical Deep Learning. An Algebraic Theory of Architectures." Learn more: cohere.com/events/c4ai-Pe…

Every time I get *yet another* rejection of my work analyzing existing models/datasets (because it "lacks novelty"), I worry that our obsession with novelty in ML will make us repeat the same mistakes, without ever understanding why.

🚀 Exciting News! 📚 Unveiling our latest Paper: "Concept-based Explainable Artificial Intelligence: A Survey" Delve into the world of #Concept-based #XAI with our comprehensive review of concept-based approaches! 🌐 @CiraGabriele @eliana__pastor arxiv.org/abs/2312.12936