marcel ⊙

@marceldotsci

Trying to build better machine-learning surrogate models for DFT. Postdoc @lab_cosmo 👨🏻🚀.

Our (@spozdn & @MicheleCeriotti) recent work on testing the impacts of using approximate, rather than exact, rotational invariance in a machine-learning interatomic potential of water has been published by @MLSTjournal! We find that, for bulk water, approximate invariance is ok..

Great new work by @marceldotsci @spozdn @MicheleCeriotti @lab_COSMO @nccr_marvel @EPFL_en - 'Probing the effects of broken symmetries in #machinelearning' - iopscience.iop.org/article/10.108… #compchem #materials #statphys #molecules #compphys #simulation #atomistic

happy to report that our paper is accepted for an oral presentation at ICML. amazing work by Filippo, who will present it in vancouver! final version, with some extra content, here: openreview.net/forum?id=OEl3L…

new work! we follow up on the topic of testing which physical priors matter in practice. this time, it seems that predicting non-conservative forces, which has a 2x-3x speedup, leads to serious problems in simulation. we run some tests and discuss mitigations!

👀

🚀 After two+ years of intense research, we’re thrilled to introduce Skala — a scalable deep learning density functional that hits chemical accuracy on atomization energies and matches hybrid-level accuracy on main group chemistry — all at the cost of semi-local DFT. ⚛️🔥🧪🧬

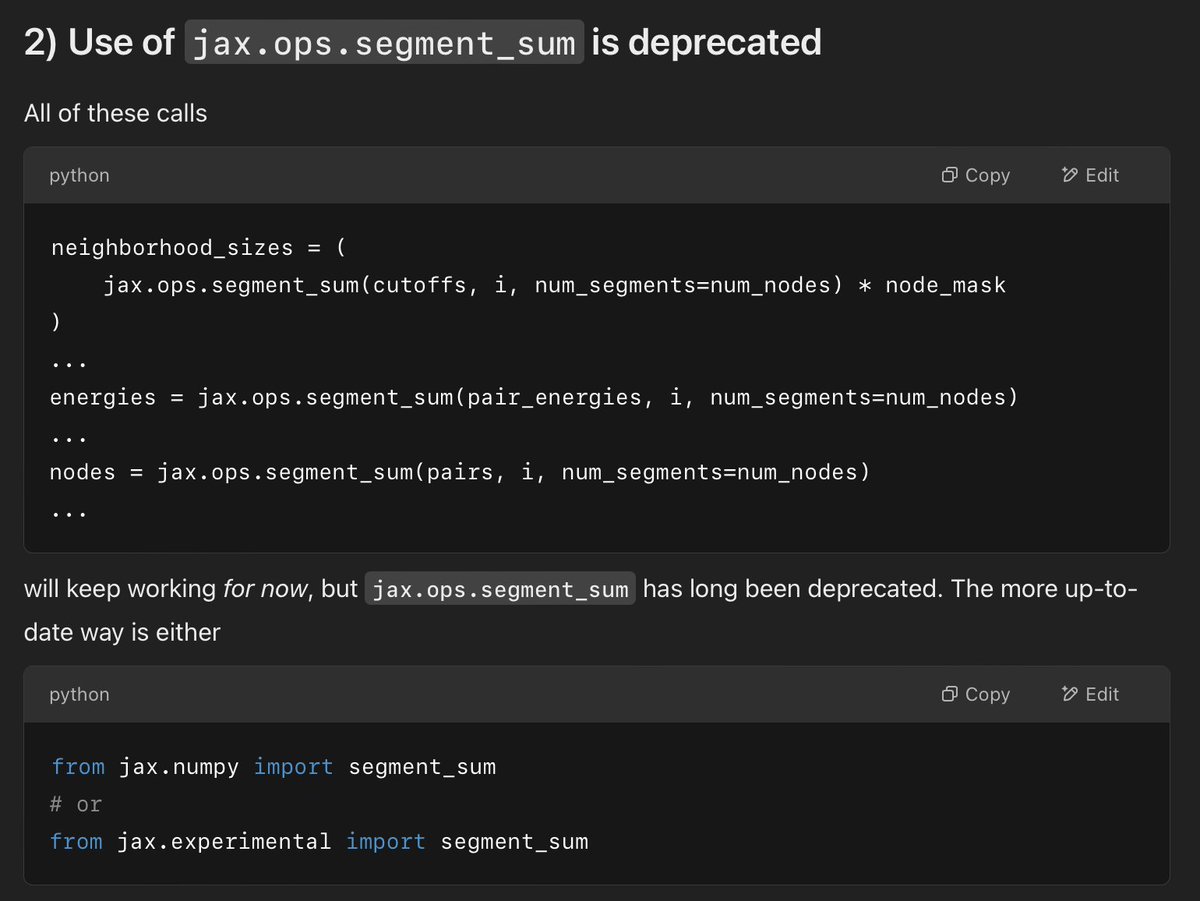

obvious in hindsight but news to me: accessing np.load-ed arrays by index is orders of magnitude slower than forcing them to RAM. notes.marcel.science/2025/numpy-loa…

ok maybe i just decline further requests to provide reviews then

this is rather sick

I am truly excited to share our latest work with @MScherbela, @GrohsPhilipp, and @guennemann on "Accurate Ab-initio Neural-network Solutions to Large-Scale Electronic Structure Problems"! arxiv.org/abs/2504.06087

👀

Excited to announce Orb-v3, a new family of universal Neural Network Potentials from me and my team at @OrbMaterials, led by Ben Rhodes and @sanderhaute! These new potentials span the Pareto frontier of models for computational chemistry.

👀

Guess what? By learning from energies and forces, machine learning interatomic potentials can now infer electrical responses like polarization and BECs! This means we can perform MLIP MD simulations under electric fields! arxiv.org/pdf/2504.05169

🤫 you can get a better universal #machinelearning potential by training on fewer than 100k structures. too good to be true? head to arxiv:2503.14118, to the atomistic cookbook, or to the better science social if you want to find out more about PET-MAD. 🧑🚀 over and out 👋

team @lab_COSMO has joined the universal FF arena: arxiv.org/abs/2503.14118 !

Chatty G seems very uninformed about JAX. Or it knows something I don't...

👀

1/5: Our new materials foundation model, HIENet, combines invariant and equivariant message passing layers to achieve SOTA performance and efficiency on materials benchmarks and downstream tasks. arxiv.org/abs/2503.05771

can stefan be stopped? it’s unclear. congrats!

We have a new paper on diffusion!📄 Faster diffusion models with total variance/signal-to-noise ratio disentanglement! ⚡️ Our new work shows how to generate stable molecules in sometimes as little 8 steps and match EDM’s image quality with a uniform time grid. 🧵

👀

1/ Machine learning force fields are hot right now 🔥: models are getting bigger + being trained on more data. But how do we balance size, speed, and specificity? We introduce a method for doing model distillation on large-scale MLFFs into fast, specialized MLFFs!

👀

We just shared our latest work with @CecClementi and @FrankNoeBerlin! arxiv.org/abs/2502.13797 RANGE addresses the "short-sightedness" of MPNNs via virtual aggregations, boosting the accuracy of MLFFs at long-range with linear time-scaling. Next-gen force-fields are here! 🧠🚀