Manifest AI

@manifest__ai

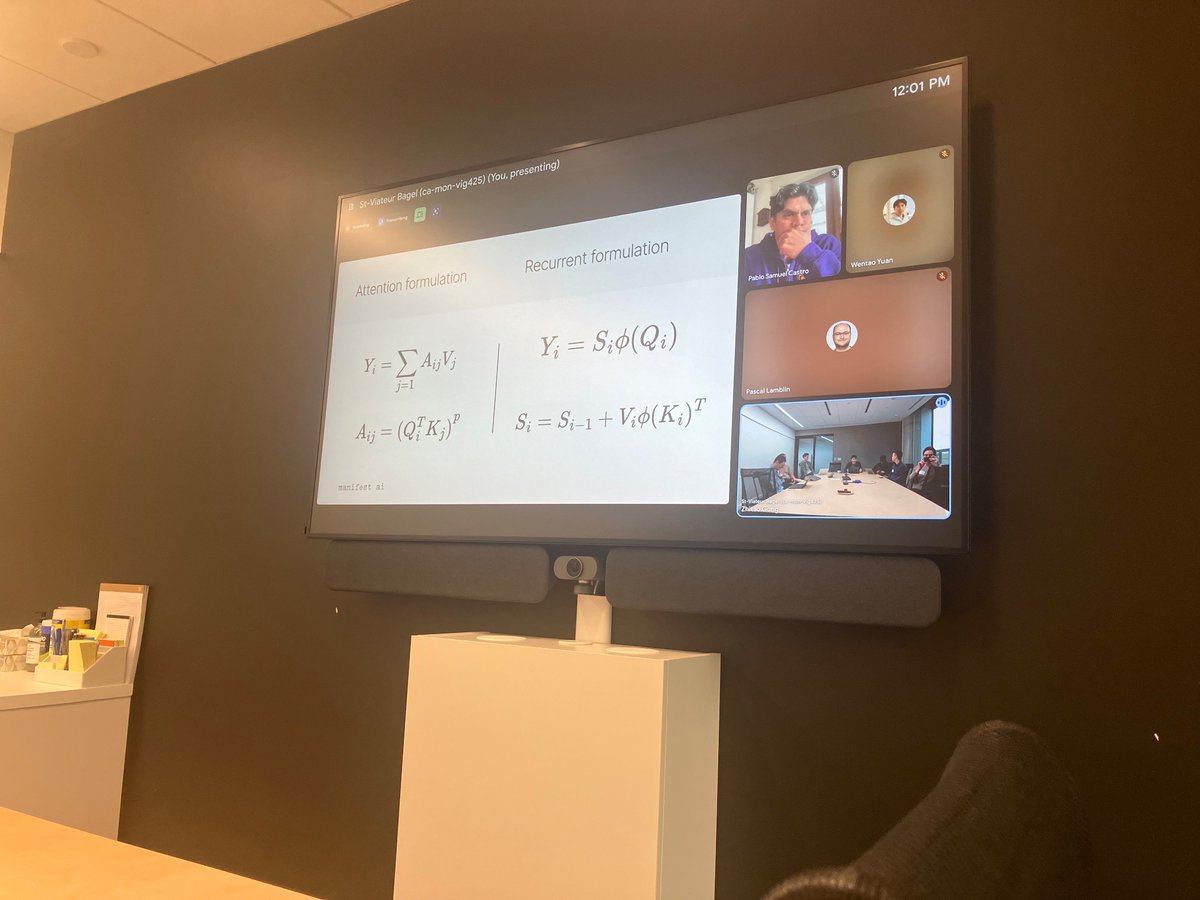

Had a great time talking Power Attention with the amazing folks at @GoogleDeepMind Montreal. Thanks @pcastr, Adrien, and Zhitao for hosting us!

Why gradient descent minimizes training loss: manifestai.com/articles/gd-mi…

Symmetric power transformers: manifestai.com/articles/symme…

In our latest article, we describe our methodology for research on extending context length. It’s not enough to train an LLM with a large context size. We must train LLMs with a large *compute-optimal* context size. manifestai.com/articles/compu…

In the 32nd session of #MultimodalWeekly, we will feature two speakers working with Transformers architecture research and LLMOps for generative AI applications.

Anyone who has trained a Transformer has viscerally felt its O(T^2) cost. It is not tractable to train Transformers end-to-end on long contexts. Here's a writeup of the research direction I believe is most likely to solve this: linear transformers. manifestai.com/blogposts/fast… 1/7

Sharing our work on how to efficiently implement linear transformers. We trained a GPT2 model with linear attention and observed a 32x speedup over FlashAttention on a 500k-token context. manifestai.com/blogposts/fast…