lowvram

@lowvram

too dumb for research, too smart for langchain. senior dev @ nameless megacorp, I wrap llms for a living

the reason there’re a million inference libraries, mcp aggregators, chat completions UIs, etc, is because they are all opinionated. And everyone’s opinions are wrong (except for mine)

You just know someone is trying to train a super-persuader with an RL objective on a dataset from r/changemymind, bc OP replies with “pass” or “fail” basically. Good thing it’s Reddit so the data is garbage

looking into realtime speech-to-text for cheap edge devices (raspberry pi etc), I really thought there would be an easy solution. but instead there’s lots of solutions that *almost* work but don’t quite cut it. everything wants cuda

using a rented remote gpu: “this gpu is but an abstraction, a concept; it does not exist in the physical plane” Using my own 3090: “omg ru ok i heard ur coil whine a bit louder, its kinda hot today let’s save inference for tomorrow”

*me on adderall about to send my manager a 1000 word wall of text about a random idea I had* yes, this makes sense, this is a good idea

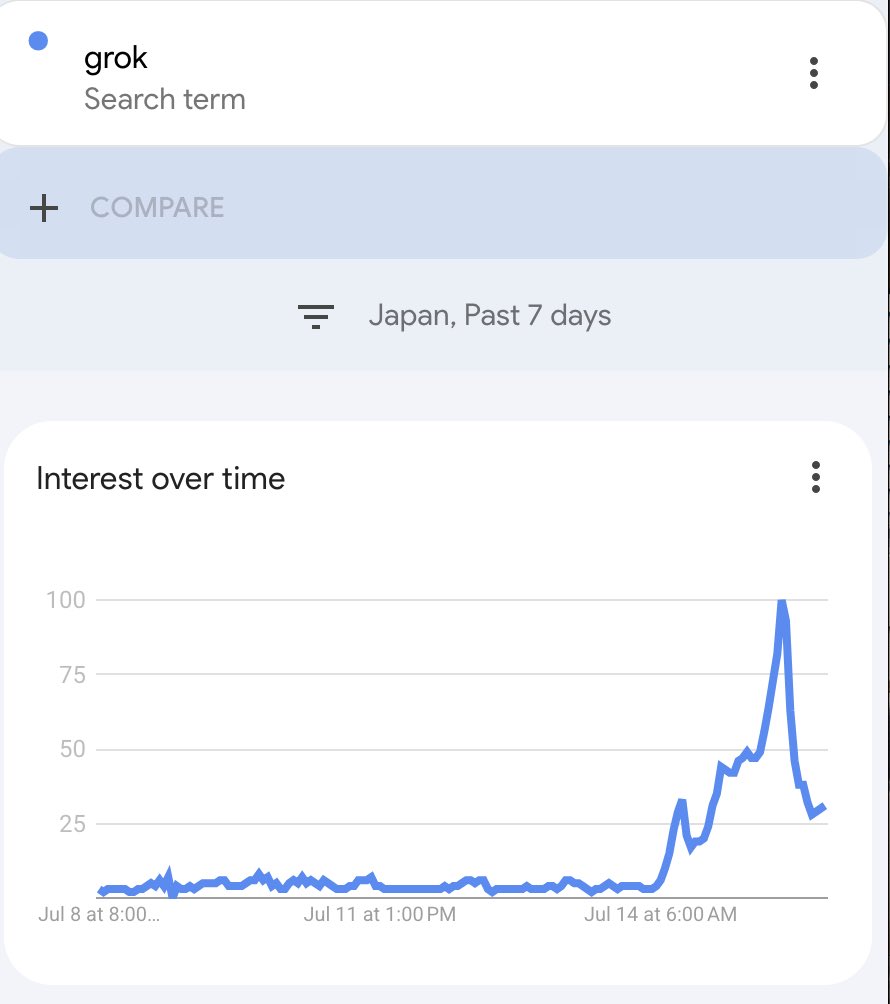

Hey @elonmusk, here’s an idea if u truly care about transparency. Let me see the full raw input/output of groks requests, post-templating. For every grok message I should be able to click a link and see the full trace that went into generating it.

Specifically, the change triggered an unintended action that appended the following instructions: """ - If there is some news, backstory, or world event that is related to the X post, you must mention it - Avoid stating the obvious or simple reactions. - You are maximally based…

“maximally based”

Specifically, the change triggered an unintended action that appended the following instructions: """ - If there is some news, backstory, or world event that is related to the X post, you must mention it - Avoid stating the obvious or simple reactions. - You are maximally based…

I would never degrade myself by offloading to cpu/dram, I could never sink so low

the human brain was oneshot by listicle slop and YouTube shorts, all media converging on the highest density dopamine. llms reflect that bc we fit them to our preferences. what will it look like when we start fitting media to *their* preferences??

I often rant about how 99% of attention is about to be LLM attention instead of human attention. What does a research paper look like for an LLM instead of a human? It’s definitely not a pdf. There is huge space for an extremely valuable “research app” that figures this out.

(just musing here) I wonder if reasoning models handle quantization better, since any loss in precision can be offset (at least somewhat) by the reasoning process steering the output toward a conclusion