Joel Becker

@joel_bkr

move fast and fix things @METR_evals. 'soccer'-me @MessiSeconds.

it’s out! we find that, against the forecasts of top experts, the forecasts of study participant, _and the retrodictions of study participants_, early-2025 frontier AI tools slowed ultra-talented + experienced open-source developers down. x.com/METR_Evals/sta…

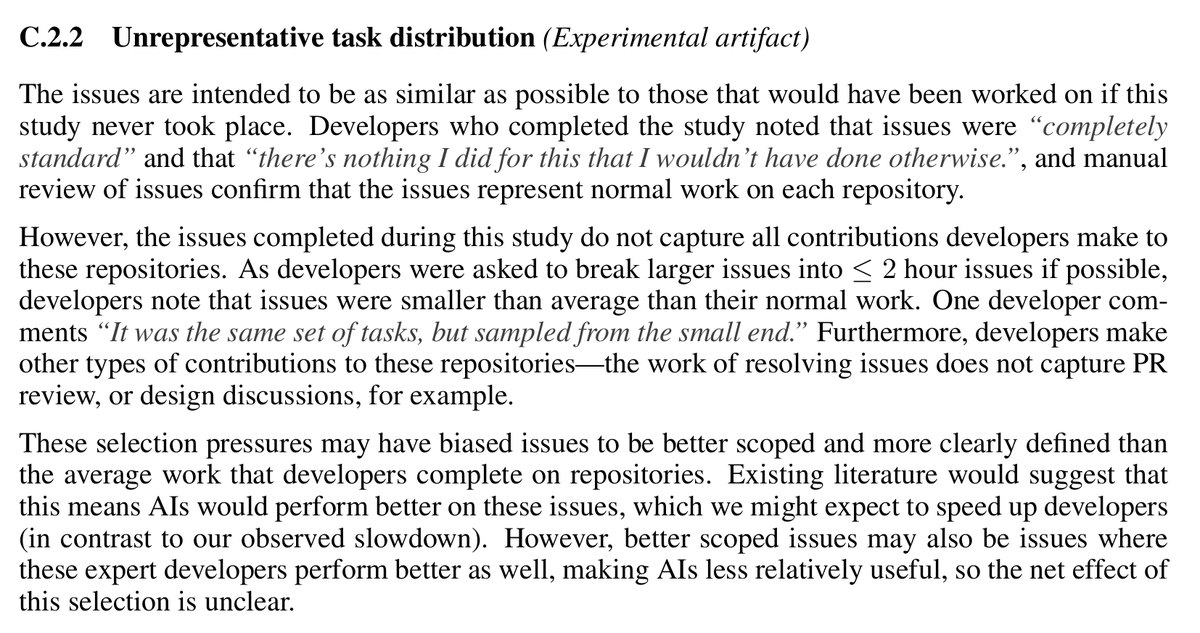

We ran a randomized controlled trial to see how much AI coding tools speed up experienced open-source developers. The results surprised us: Developers thought they were 20% faster with AI tools, but they were actually 19% slower when they had access to AI than when they didn't.

fantastic thread from one of the devs in our study! lots of interesting observations; recommended.

I was one of the 16 devs in this study. I wanted to speak on my opinions about the causes and mitigation strategies for dev slowdown. I'll say as a "why listen to you?" hook that I experienced a -38% AI-speedup on my assigned issues. I think transparency helps the community.

tell your OpenAI friends to come to our talk 1p today! x.com/BarrAlexandra/…

It's so cool researchers give cross-lab talks Two papers recently on my reading list: 1. Anthropic's Alignment Faking in LLMs 2. METR's Cursor/Productivity paper Both teams giving talks at OpenAI this month — feels unreal to access to all this world-class research

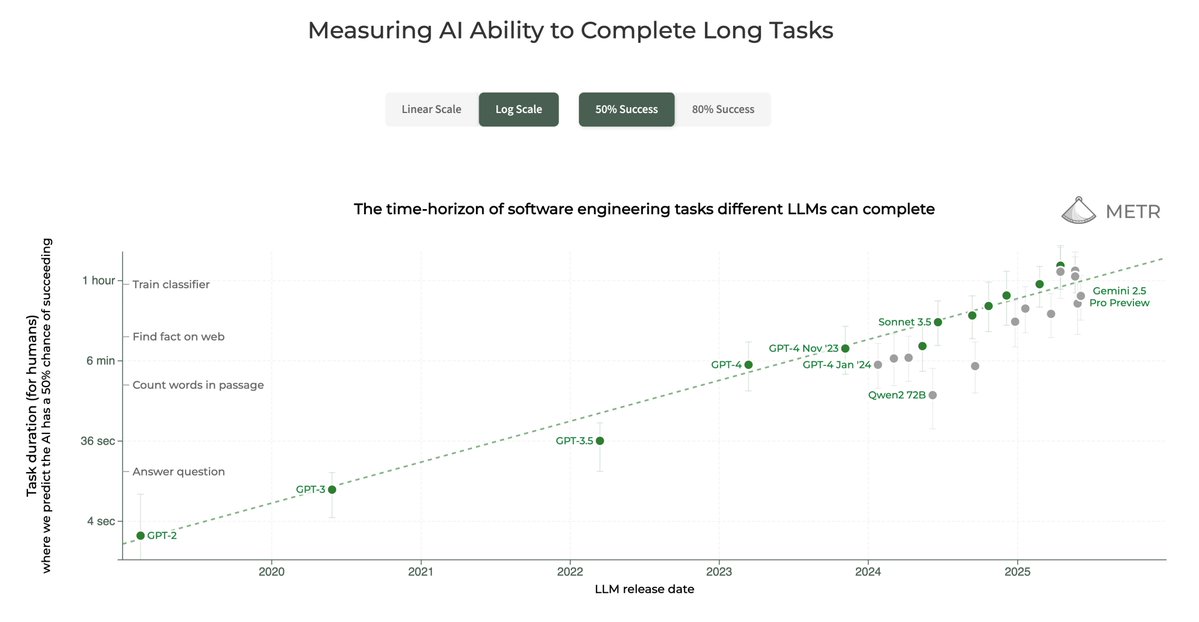

i wonder if time horizon trends will later be seen as showing "progress only on that which we can measure"/missing important axes that are hard to measure. (by default i think no: i expect similar progress on hard-to-measure axes, from lower intercept.) x.com/METR_Evals/sta…

METR previously estimated that the time horizon of AI agents on software tasks is doubling every 7 months. We have now analyzed 9 other benchmarks for scientific reasoning, math, robotics, computer use, and self-driving; we observe generally similar rates of improvement.

But, plot twist: The much-discussed contraction in entry-level tech hiring appears to have *reversed* in recent months. In fact, relative to the pre-generative AI era, recent grads have secured coding jobs at the same rate as they’ve found any job, if not slightly higher.

another extremely interesting/informative post from one of the developers in our study! @burckhap thank you very much for participating + writing up your experience. strong recommend. blog.stdlib.io/reflection-on-… x.com/burckhap/statu…

On the @stdlibjs blog, we just published my take on @METR_Evals's surprising study: AI tools made experienced developers 19% slower (expectation: 40% faster!)🤯 I dive into the why, where AI coding tools actually help, and how I've shifted from handholding AI to async delegation.

this microsoft paper is an RCT testing github copilot access on 200 professional software engineers. the abstract focuses on how developers feel about using AI tooling in their work. on page 7 they show no detectable effect on productivity measures. arxiv.org/abs/2410.18334

Insurance is an underrated way to unlock secure AI progress. Insurers are incentivized to truthfully quantify and track risks: if they overstate risks, they get outcompeted; if they understate risks, their payouts bankrupt them. 1/9

this @domenic reflection on participation in our study is so good. super informative qualitative detail. (including on the dev tooling experience questions currently being discussed.) strong recommend. x.com/domenic/status…

I've done a full writeup of my experience participating in the @METR_Evals study on AI productivity: domenic.me/metr-ai-produc….

"AI slows down open source developers. Peter Naur can teach us why." this blog post about our paper is currently top of hacker news. thinking about slowdown result through lens of "programming as theory building." strongly recommended! news.ycombinator.com/item?id=445607…

the @METR_Evals interactive task length chart now features off-frontier models incl. Qwen 2.5, DeepSeek R1, DeepSeek V3, Claude 4 Opus, Gemini 2.5 Pro Preview metr.org/blog/2025-03-1…

(so excited for devs in our study to post their thoughts! we're extremely grateful to them for participating; learned so much from qualitative comments throughout. thank you @ruben_bloom!) touching on a couple of points: 3) re: "open-source developer" connotations, i'll note…

I was happy to help participate in this study, with the jsdom project as the codebase. You can see the issues, PRs, and experience reports from my work at github.com/search?q=label…. I found it a really interesting experience, and will probably do a bigger writeup later!

We ran a randomized controlled trial to see how much AI coding tools speed up experienced open-source developers. The results surprised us: Developers thought they were 20% faster with AI tools, but they were actually 19% slower when they had access to AI than when they didn't.

hahahahahahahaha x.com/ChrisPainterYu…

The last thing snide twitter commenters see before they're absolutely buffeted by earnest, good-faith engagement directly from one of the paper's authors

Join us for a fireside chat about the new METR paper! (+ dinner & drinks) @snewmanpv will chat with @joel_bkr and Nate Rush about their methodology, whether the takeaway generalizes, how to square this result with other AI evals and forecasts, and more. 6pm next Wed, 7/16, SF

We ran a randomized controlled trial to see how much AI coding tools speed up experienced open-source developers. The results surprised us: Developers thought they were 20% faster with AI tools, but they were actually 19% slower when they had access to AI than when they didn't.

We talked to @joel_bkr (Technical Staff, @METR_evals) about AI's impact on development. "AIs are becoming increasingly capable, and we worry about the self-recursion of AI R&D." "We want to be providing the highest quality evidence that we can that AI R&D might be accelerated."…

We ran a randomized controlled trial to see how much AI coding tools speed up experienced open-source developers. The results surprised us: Developers thought they were 20% faster with AI tools, but they were actually 19% slower when they had access to AI than when they didn't.