InternLM

@intern_lm

InternLM has open-sourced a 7 billion parameter base model and a chat model tailored for practical scenarios. Discord: http://discord.gg/xa29JuW87d

Try our new multimodal reasoning model #InternS1😋 Model: huggingface.co/internlm/Inter… GitHub: github.com/InternLM/Inter… Chat here: chat.intern-ai.org.cn

🚀Introducing Intern-S1, our most advanced open-source multimodal reasoning model yet! 🥳Strong general-task capabilities + SOTA performance on scientific tasks, rivaling leading closed-source commercial models. 🥰Built upon a 235B MoE language model and a 6B Vision encoder.…

🚀 Introducing #POLAR: Bring Reward Model into a New Pre-training Era! ✨ Say goodbye to reward models with poor generalization! POLAR (Policy Discriminative Learning) is a groundbreaking pre-training paradigm that trains reward models to distinguish policy distributions,…

🥳Trained through #InternBootcamp, #InternThinker now combines pro-level Go skills with transparent reasoning. 😉In each game, it acts as a patient, insightful coach—analyzing the board, comparing moves, and clearly explaining each decision. 🤗Try it now: chat.intern-ai.org.cn/internthinker/…

🥳Introducing #InternBootcamp, an easy-to-use and extensible library for training large reasoning models. Unlimited automatic question generation and result verification. Over 1,000 verifiable tasks covering logic, puzzles, algorithms, games, and more. 🤗github.com/InternLM/Inter…

🥳Introducing #InternBootcamp, an easy-to-use and extensible library for training large reasoning models. Unlimited automatic question generation and result verification. Over 1,000 verifiable tasks covering logic, puzzles, algorithms, games, and more. 🤗github.com/InternLM/Inter…

🥳Thrill to release the full RL training code of #OREAL! 😊Now you can fully reproduce the results of OREAL-7B/32B. Using #DeepSeek-R1-Distill-Qwen-32B, you can further obtain a model has 95.6 on MATH-500! 🤗Code: github.com/InternLM/OREAL 🤗Based on: github.com/InternLM/xtuner

🥳Introducing #OREAL, a new RL method for math reasoning. 😊With OREAL, a 7B model achieves 94.0 pass@1 on MATH-500, matching many 32B models, while OREAL-32B achieves 95.0 pass@1, surpassing #DeepSeek-R1 Distilled models. 🤗Paper/Model/Data: huggingface.co/papers/2502.06…

🥳Introducing #OREAL, a new RL method for math reasoning. 😊With OREAL, a 7B model achieves 94.0 pass@1 on MATH-500, matching many 32B models, while OREAL-32B achieves 95.0 pass@1, surpassing #DeepSeek-R1 Distilled models. 🤗Paper/Model/Data: huggingface.co/papers/2502.06…

🥳With PowerServe, InternLM3-8B-Instruct runs on Android devices equipped with Qualcomm NPUs. 😉Feel free to try out our new model at internlm-chat.intern-ai.org.cn

📢 We've just integrated InternLM's models into the 🐫 CAMEL-AI framework! InternLM is a multilingual, multi-billion-parameter base model trained on trillions of tokens, and we now support their whole family of models. 🚀 Newly Supported Models: ✅ internlm3-latest ✅…

Now you can run InternLM3-8B in Qualcomm NPU with PowerServe! reddit.com/r/LocalLLaMA/s…

🥳InternLM3-8B-Instruct takes on the easy problem from LeetCode Weekly Contest 431. 😉Leetcode Weekly Contest 431:leetcode.com/contest/weekly… 😉Feel free to try out our new model at internlm-chat.intern-ai.org.cn

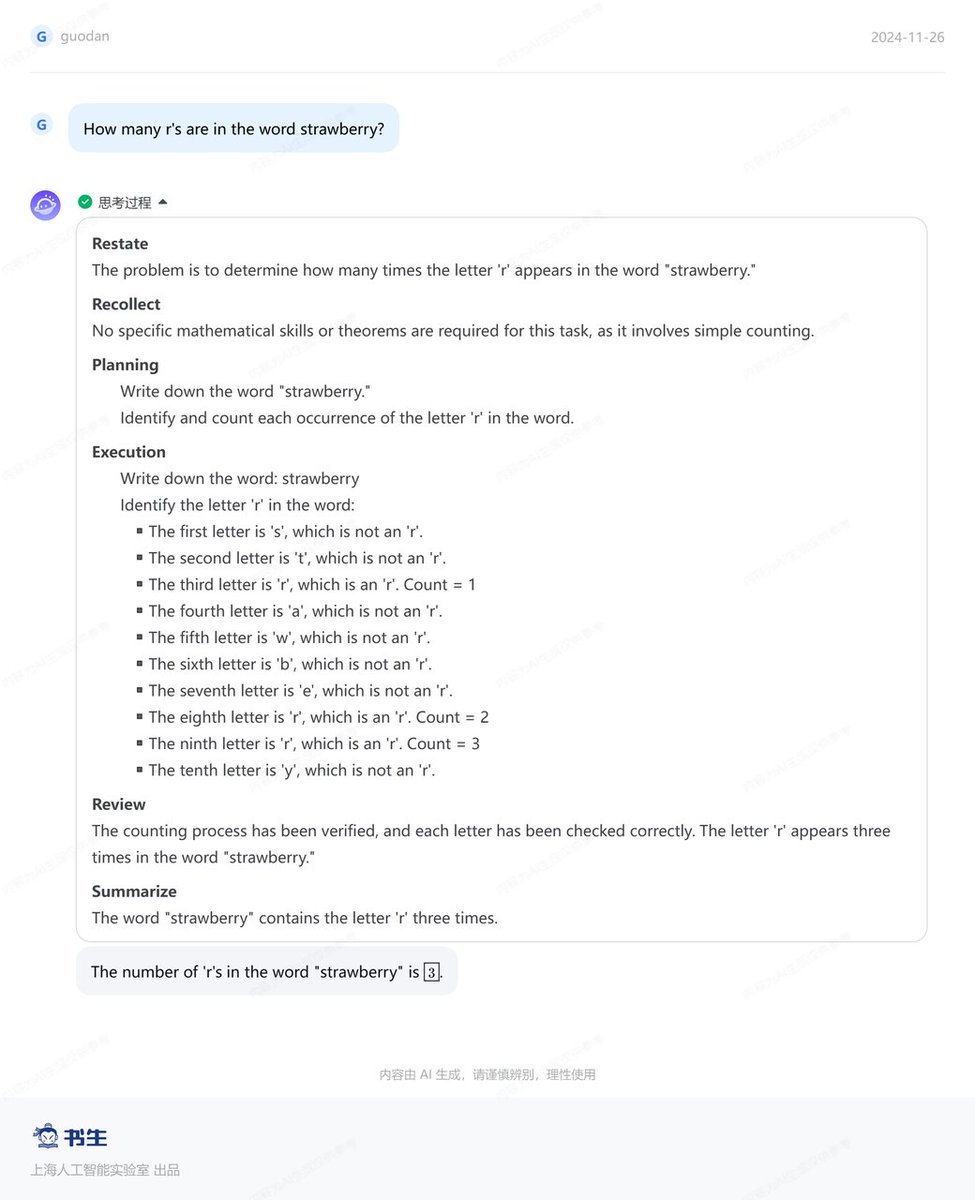

🥳Here are some popular examples from the InternLM3-8B-Instruct model. 😉Feel free to try out our new model at internlm-chat.intern-ai.org.cn.

I'm excited about the release of @intern_lm 3 8B Instruct. It's superior to Qwen 2.5 7B Instruct and Llama 3.1 8B Instruct, with an architecture same as Llama's. Feel free to use it with SGLang @lmsysorg . Cheers! github.com/InternLM/inter…

🥳🚀🥳Try it now on: internlm-chat.intern-ai.org.cn

🚀Introducing InternLM3-8B-Instruct with Apache License 2.0. -Trained on only 4T tokens, saving more than 75% of the training cost. -Supports deep thinking for complex reasoning and normal mode for chat. Model:@huggingface huggingface.co/internlm/inter… GitHub: github.com/InternLM/Inter…

🚀Introducing InternLM3-8B-Instruct with Apache License 2.0. -Trained on only 4T tokens, saving more than 75% of the training cost. -Supports deep thinking for complex reasoning and normal mode for chat. Model:@huggingface huggingface.co/internlm/inter… GitHub: github.com/InternLM/Inter…

🥳InternLM-XComposer2.5-OmniLive, a comprehensive multimodal system for long-term streaming video and audio interactions. Real-time visual & auditory understanding Long-term memory formation Natural voice interaction Code: github.com/InternLM/Inter… Model: huggingface.co/internlm/inter…

🥳Now, you can sign up and log in to try #InternThinker using your email or GitHub account! 🤗Direct link to InternThinker: internlm-chat.intern-ai.org.cn/internthinker 😊Tips: To start a new conversation with InternThinker, simply click on "InternThinker" in the left sidebar.

🥳Introducing #InternThinker. 🤗A Powerful Reasoning Model! 🤗Advanced long-term thinking capabilities. 🤗Self-reflection and correction during reasoning. Outperforms in complex tasks like math, coding, and logic puzzles. 🥳Try it now at internlm-chat.intern-ai.org.cn/internthinker

🥳Introducing #InternThinker. 🤗A Powerful Reasoning Model! 🤗Advanced long-term thinking capabilities. 🤗Self-reflection and correction during reasoning. Outperforms in complex tasks like math, coding, and logic puzzles. 🥳Try it now at internlm-chat.intern-ai.org.cn/internthinker

Excited to have hosted the SGLang @lmsysorg talk yesterday with @GPU_MODE 's @marksaroufim and @hsu_byron. Thanks to FlashInfer @ye_combinator and LMDeploy @intern_lm teams for their support! youtube.com/watch?v=XQylGy…

🥳We have released our InternLM2.5 new models in 1.8B and 20B on @huggingface. 😉1.8B: Ultra-lightweight, high-performance, with great adaptability. 😉20B: More powerful, ideal for complex tasks. 😍Explore now! Models: huggingface.co/collections/in… GitHub: github.com/InternLM/Inter…