Ankur Gupta

@getpy

Tweets on Python, Technology, Software Development, Programming.

Pre LLM era for a presentation at a meet-up or internal, I would have opened gimp, unsplash, imageMagick, write custom python code and spent hours to generate the equivalent of these images. Used sora and ChatGPT for these, the colour selection of logos will need tweaking…

Promise fulfilled! Took me 9 days of craziness but my Context Engineering with DSPy tutorial is out now on YouTube. My next goal: getting my sleep schedule back on track.

Mental note/Social Promise: I need to make a proper video on Context Engineering.

In 1999 I moved to Bombay. Internet craze was everywhere. Every hoarding and newspaper front page was occupied by Internet company. Y2K impact was a daily speculation. I did temp job for 3-4 months post college at egurucool writing html for some time and later be campus…

Generate and iterate on code instantly. 40x faster than Sonnet-4. Free to use. Get started with @cline and @cerebrassystems below 👇

🚀 Just discovered Sniffly – a powerful open-source dashboard that helps you analyze your Claude Code logs. co built by @chipro and team 🔗 github.com/chiphuyen/snif… 📊 Demo: sniffly.dev 1️⃣ Understand your usage patterns Sniffly gives you visual insights into how…

If you have a small dataset and want to do quick data analysis to find metrics and insights give @AskPrisma a try. In beta but promising.

i'm building @AskPrisma; for today's screencast i explored insights from a hypertension factors dataset available on kaggle let me know what you would love to see; anything that sparks your curiosity - i'm just a DM away / link to the full exploration below

Took 6 open models that can run on my humble 16 GB M2 Mac mini and ran it against the self acquired news dataset for summarisation, sentiment and entity detection. If entity detection can be forgotten then phi3 3.8b mini 128k instruct scores are as good as gemini 1.5 flash with…

If you are looking to do just summarisation, sentiment analysis, entity extraction on a budget gemini-1.5-flash-8b is dirt cheap vs a vi others and still accurate. My use-case has input token size averages being 4000-6000 tokens.

New SOTA for 26-circle packing: @ypwang61 achieved 2.635977 sum of radii using OpenEvolve (evolutionary optimization framework). Progress: AlphaEvolve paper reported 2.635, OpenEvolve made improvements, now new record at 2.635977. #CirclePacking #SOTA #Optimization #OpenEvolve

If you are looking to do just summarisation, sentiment analysis, entity extraction on a budget gemini-1.5-flash-8b is dirt cheap vs a vi others and still accurate. My use-case has input token size averages being 4000-6000 tokens.

Matt is on to something that @michaelryan207 and @kristahopsalong tinkered with in 2023 before building dspy.MIPROv2. He's saying: turn a small LLM into a prompt rewriter and use policy gradient RL (like PPO) to reinforce its prompt rewrites. This hasn't actually worked yet;…

Yes, we all know what happened when devs without understanding of how single GraphQL query translates to N backend API calls which in turn translate to N DB queries were given free rein.

apis are overrated. just let the frontend talk to the database. it's faster, simpler, and honestly it's just better.

For those of us who are broke on AI quotas remember it doesn’t cost a penny to get generous access to Gemini and Claude via @julesagent and @kirodotdev respectively. Offer till it last 😅

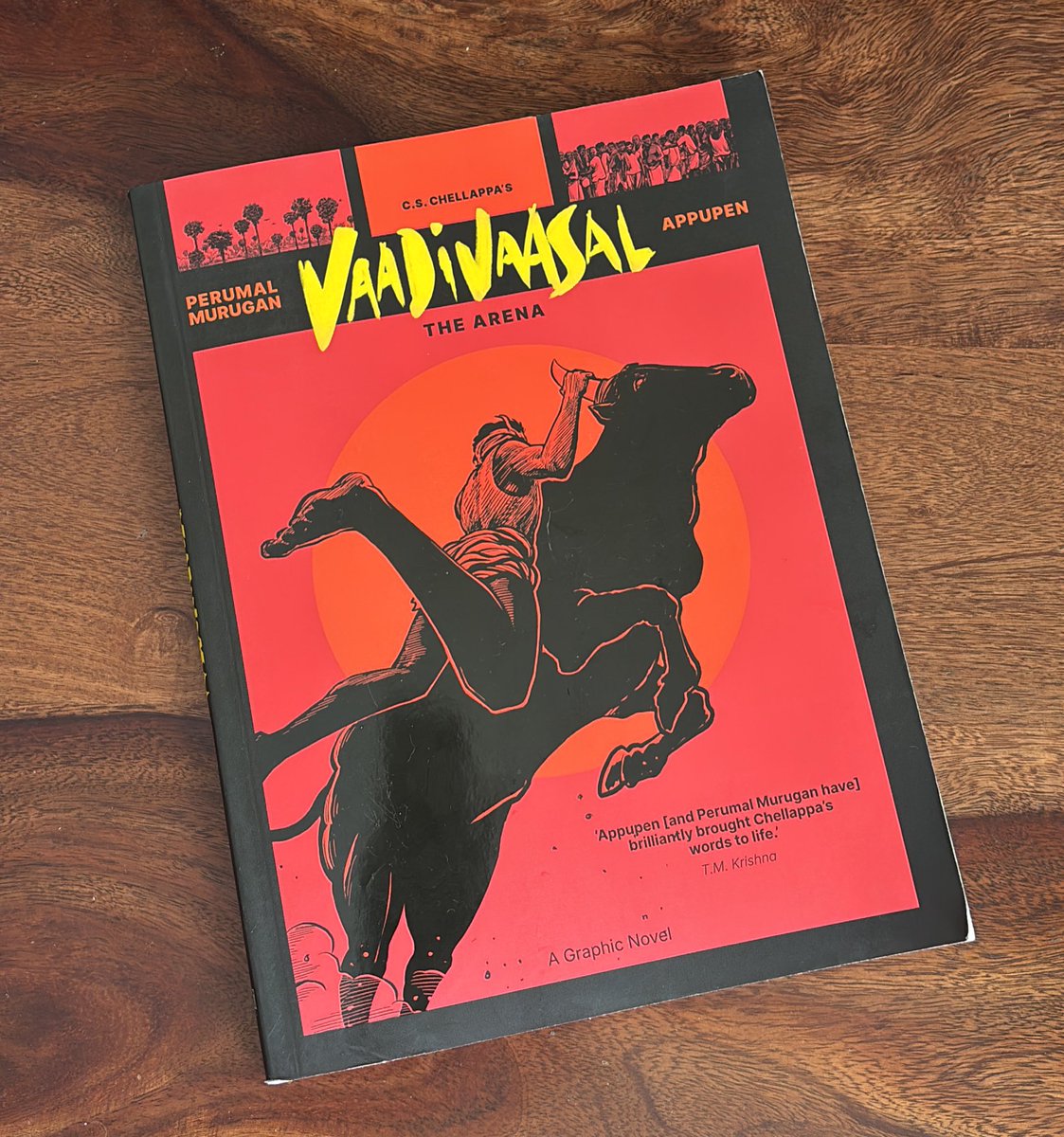

Hands down the best Indian 🇮🇳 graphic novel I have read till date. Authentic Rural Indian setting, linear story telling with one flashback. Art.

Naive question 🙋 What happens once ppl start moving to 64 to 128 GB RAM machines and one can run highly effective purpose built small models that serve one’s needs?. I think it will happen question is on what timeline.

NEWS: Mark Zuckerberg has just announced that Meta will be spending hundreds of billions of dollars to build massive GPU compute clusters. Mark: "We're building several multi-GW clusters. We're calling the first one Prometheus and it's coming online in '26. We're also building…

You can now run Kimi K2 locally with our Dynamic 1.8-bit GGUFs! We shrank the full 1.1TB model to just 245GB (-80% size reduction). The 2-bit XL GGUF performs exceptionally well on coding & passes all our code tests Guide: docs.unsloth.ai/basics/kimi-k2 GGUFs: huggingface.co/unsloth/Kimi-K…