Chen Geng

@gengchen01

CS Ph.D. Student @Stanford. Previously Hons. B.Eng. in CS @ZJU_China.

Ever wondered how roses grow and wither in your backyard?🌹 Our latest work on generating 4D temporal object intrinsics lets you explore a rose's entire lifecycle—from birth to death—under any environment light, from any viewpoint, at any moment. Project page:…

📷 New Preprint: SOTA optical flow extraction from pre-trained generative video models! While it seems intuitive that video models grasp optical flow, extracting that understanding has proven surprisingly elusive.

We prompt a generative video model to extract state-of-the-art optical flow, using zero labels and no fine-tuning. Our method, KL-tracing, achieves SOTA results on TAP-Vid & generalizes to challenging YouTube clips. @khai_loong_aw @KlemenKotar @CristbalEyzagu2 @lee_wanhee_…

🚀 We release SpatialTrackerV2: the first feedforward model for dynamic 3D reconstruction and 3D point tracking — all at once! Reconstruct dynamic scenes and predict pixel-wise 3D motion in seconds. 🔗 Webpage: spatialtracker.github.io 🔍 Online Demo: huggingface.co/spaces/Yuxihen…

In our #ICCV2025 WonderPlay, we study how to combine physical simulation and video generative prior to enable 3D action interaction with the world from a single image! Check the 🧵for more details!

#ICCV2025 🤩3D world generation is cool, but it is cooler to play with the worlds using 3D actions 👆💨, and see what happens! — Introducing *WonderPlay*: Now you can create dynamic 3D scenes that respond to your 3D actions from a single image! Web: kyleleey.github.io/WonderPlay/ 🧵1/7

🤖 Household robots are becoming physically viable. But interacting with people in the home requires handling unseen, unconstrained, dynamic preferences, not just a complex physical domain. We introduce ROSETTA: a method to generate reward for such preferences cheaply. 🧵⬇️

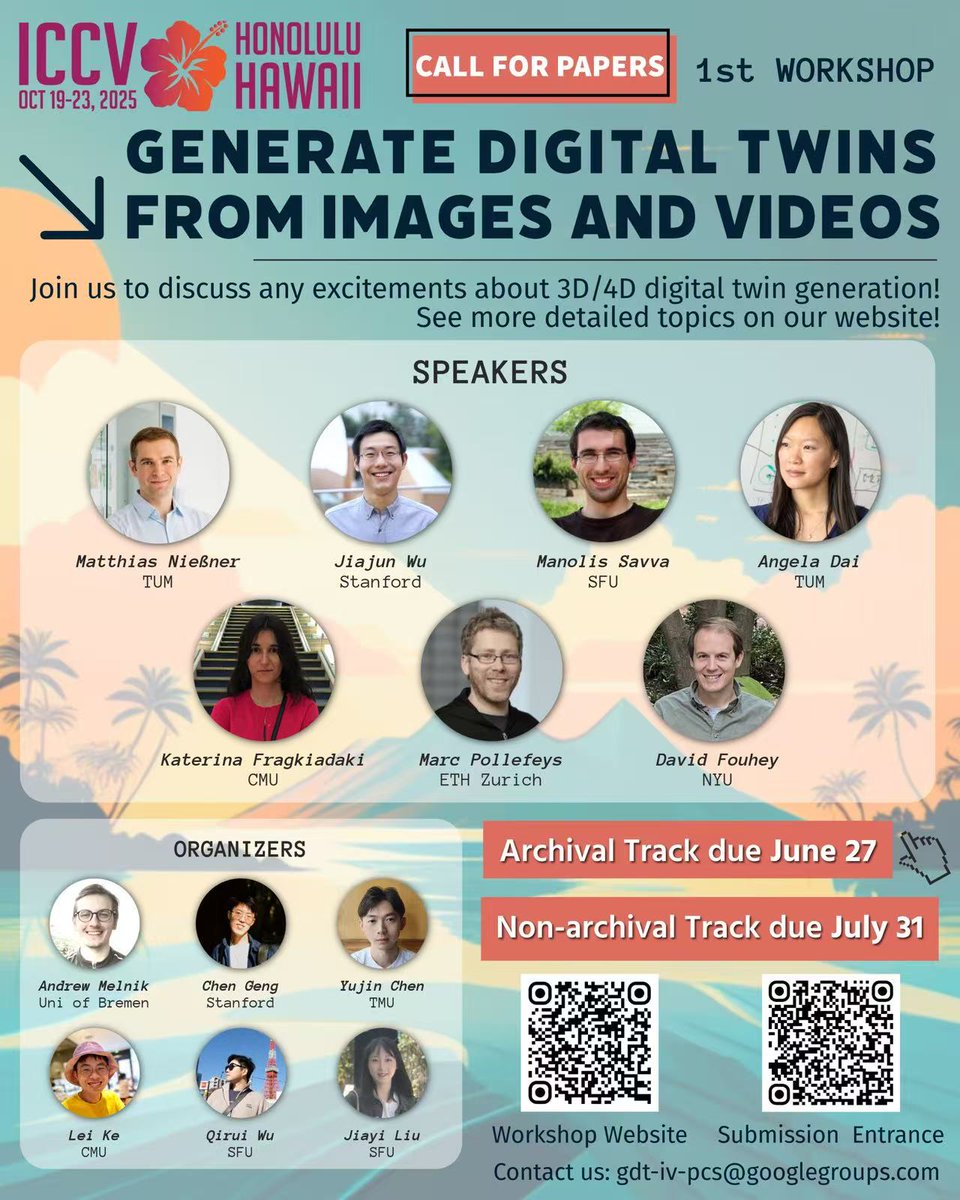

📢 Call for Papers - We are organizing @ICCVConference Workshop on Generating Digital Twins from Images and Videos (gDT-IV) at #ICCV2025! We welcome submissions in two tracks: 📅 Deadline for Archival Paper Track: June 27 ⏰ Deadline for Non-Archival Paper Track: July 31 🌐…

(1/n) Time to unify your favorite visual generative models, VLMs, and simulators for controllable visual generation—Introducing a Product of Experts (PoE) framework for inference-time knowledge composition from heterogeneous models.

No labels, no priors -- just learning from raw data. Our latest work learns unified 4D motion representations for dynamic objects in a fully self-supervised way. Check out this work led by our awesome intern @AlexHe00880585! 🚀

💫 Animating 4D objects is complex: traditional methods rely on handcrafted, category-specific rigging representations. 💡 What if we could learn unified, category-agnostic, and scalable 4D motion representations — from raw, unlabeled data? 🚀 Introducing CANOR at #CVPR2025: a…

🪄Introducing Anymate—a large-scale dataset of 230K 3D assets with rigging and skinning annotations! With this dataset, we trained an auto-rigging model and benchmarked a variety of architectures! 🔥Turn static assets into animatable ones in seconds: huggingface.co/spaces/yfdeng/…

How to scale visual affordance learning that is fine-grained, task-conditioned, works in-the-wild, in dynamic envs? Introducing Unsupervised Affordance Distillation (UAD): distills affordances from off-the-shelf foundation models, *all without manual labels*. Very excited this…

🤖Introducing TWIST: Teleoperated Whole-Body Imitation System. We develop a humanoid teleoperation system to enable coordinated, versatile, whole-body movements, using a single neural network. This is our first step toward general-purpose robots. 🌐humanoid-teleop.github.io

🔥Spatial intelligence requires world generation, and now we have the first comprehensive evaluation benchmark📏 for it! Introducing WorldScore: Unifying evaluation for 3D, 4D, and video models on world generation! 🧵1/7 Web: haoyi-duan.github.io/WorldScore/ arxiv: arxiv.org/abs/2504.00983

One day left before the submissions close!

Submission deadline has been extended by a week to April 4. Submit your latest 4D work to the workshop @CVPR: 4D Gaussians, point tracking, dynamic SLAMs, egocentric, human motion, multi-modal world models, embodied AI... you name it! 4dvisionworkshop.github.io

🎉 Our paper "PGC: Physics-Based Gaussian Cloth from a Single Pose" has been accepted to #CVPR2025! 👕 PGC uses a PBR + 3DGS representation to render simulation-ready garments under novel lighting and motion, all from a single static frame. ✨Web: phys-gaussian-cloth.github.io 🧵1/4

🔥Want to capture 3D dancing fluids♨️🌫️🌪️💦? No specialized equipment, just one video! Introducing FluidNexus: Now you only need one camera to reconstruct 3D fluid dynamics and predict future evolution! 🧵1/4 Web: yuegao.me/FluidNexus/ Arxiv: arxiv.org/pdf/2503.04720

Extracting structure that’s implicitly learned by video foundation models _without_ relying on labeled data is a fundamental challenge. What’s a better place to start than extracting motion? Temporal correspondence is a key building block of perception. Check out our paper!

New paper on self-supervised optical flow and occlusion estimation from video foundation models. @sstj389 @jiajunwu_cs @SeKim1112 @Rahul_Venkatesh tinyurl.com/dpa3auzd @

Can we reconstruct relightable human hair appearance from real-world visual observations? We introduce GroomLight, a hybrid inverse rendering method for relightable human hair appearance modeling. syntec-research.github.io/GroomLight/

Spatial reasoning is a major challenge for the foundation models today, even in simple tasks like arranging objects in 3D space. #CVPR2025 Introducing LayoutVLM, a differentiable optimization framework that uses VLM to spatially reason about diverse scene layouts from unlabeled…

Modern generative models of images and videos rely on tokenizers. Can we build a state-of-the-art discrete image tokenizer with a diffusion autoencoder? Yes! I’m excited to share FlowMo, with @kylehkhsu, @jcjohnss, @drfeifei, @jiajunwu_cs. A thread 🧵: