Divyashree Sreepathihalli

@divyasheess

Founder KerasHub | Keras Lead AI @google

Of the top 20 US tech companies by market cap, only 1 is headquartered in SF (Salesforce). That's less than Austin (which has 2). The bulk of US tech companies are headquartered in Santa Clara county (11 out of the top 20). The nearest major city (by a lot) is San Jose. SF is…

There are only four tier-1 cities in the 🇺🇸: New York (finance) DC (government) San Francisco (tech) LA (media & entertainment) No other cities are power centers for aspirational talent. Sorry.

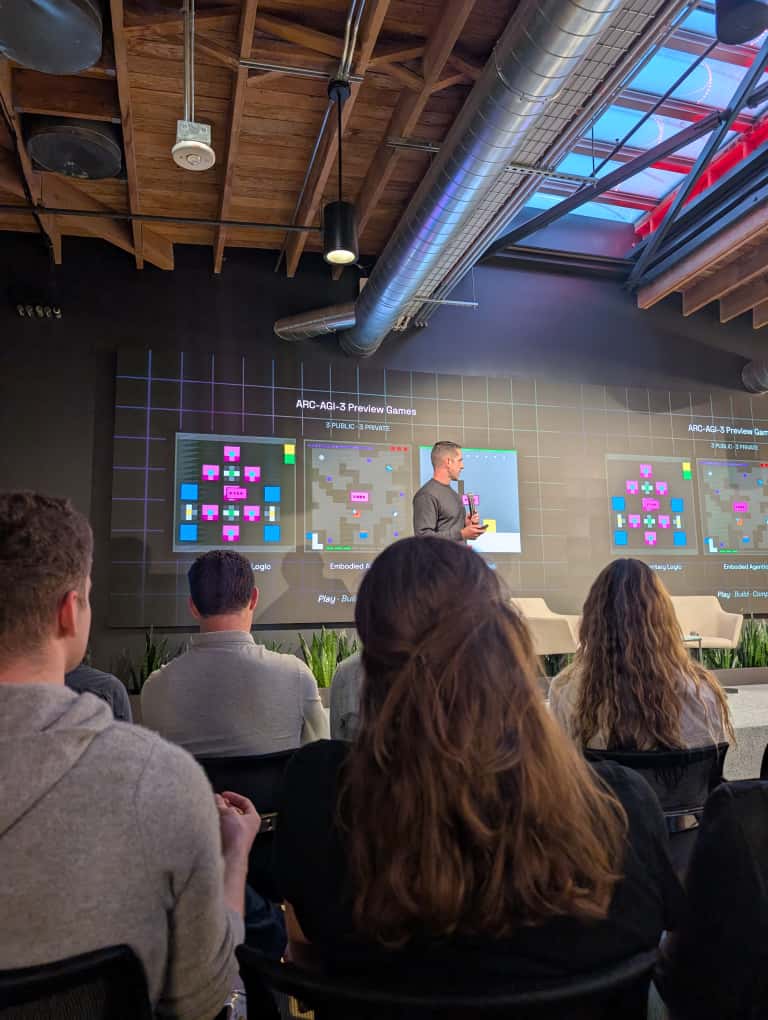

Hear @GregKamradt talk about ARC-AGI-3 with @swyx and @FanaHOVA on @latentspacepod * Why interactive benchmarks? * Defining Intelligence * Play through of ARC-AGI-3 games

KerasHub now includes HGNetV2! We’re excited to bring the high-efficiency, high-accuracy HGNetV2 image classification backbone into KerasHub’s model family. Model details and quickstart notebook are available on Kaggle: kaggle.com/models/keras/h… #keras #kerashub #HGNet

Today, we're announcing a preview of ARC-AGI-3, the Interactive Reasoning Benchmark with the widest gap between easy for humans and hard for AI We’re releasing: * 3 games (environments) * $10K agent contest * AI agents API Starting scores - Frontier AI: 0%, Humans: 100%

Today we're releasing a developer preview of our next-gen benchmark, ARC-AGI-3. The goal of this preview, leading up to the full version launch in early 2026, is to collaborate with the community. We invite you to provide feedback to help us build the most robust and effective…

A problem well-defined is a problem half-solved. The ARC-AGI 3 benchmark itself really stood out in how precisely it frames the challenge to achieve AGI. Now for the other half....📚🔍🧐 @arcprize @fchollet @GregKamradt @mikeknoop #AI #ARCAGI #AGIRevolution

The best part of the Superman movie? Krypto 😍 #mvp

Excited to attend ARC AGI 3 developer preview 🤩

Btw we're hosting a private event for the launch of the ARC-AGI 3 developer preview, next week in SF. July 17th, 6pm. Open to sponsors, donors, and researchers. Several big announcements coming. Also @dwarkesh_sp will be hosting our fireside chat. Link in next tweet.

Trying to integrate NNX to Keras is great! . . If you are into gaslighting but from your stack trace 😛!

Did you know that you can load checkpoints from Hugging Face Hub directly into a KerasHub model? That means you can load model weights from other frameworks, like using PyTorch weights with JAX or TensorFlow. Check out this blog post to learn how → goo.gle/4llxPIY

KerasHub lets you use any Hugging Face checkpoint for all top models like Llama, Gemma, Mistral, etc... Run your workflows in JAX, PyTorch, TensorFlow - inference, LoRA fine-tuning, large-scale training from scratch Blog post: developers.googleblog.com/en/load-model-…

On Monday, a United States District Court ruled that training LLMs on copyrighted books constitutes fair use. A number of authors had filed suit against Anthropic for training its models on their books without permission. Just as we allow people to read books and learn from them…

Here's more detail on how to load a Hugging Face checkpoint into a KerasHub model. Thanks for the walkthrough, @yufengg , @divyasheess, and @monicadsong ! developers.googleblog.com/en/load-model-…

You can find performance & scale optimized JAX models in MaxText and MaxDiffusion: * github.com/AI-Hypercomput… * github.com/AI-Hypercomput… You can also use Keras / JAX to tune many Hugging Face Transformers model checkpoints by loading them into a KerasHub model. It's pretty cool!…

Want to use HuggingFace Transformers checkpoint in JAX and on TPUs? Checkout this blog post! developers.googleblog.com/en/load-model-…

🙈😂

Credit to @divyasheess for being the catalyst for this joke 🤣

You can find performance & scale optimized JAX models in MaxText and MaxDiffusion: * github.com/AI-Hypercomput… * github.com/AI-Hypercomput… You can also use Keras / JAX to tune many Hugging Face Transformers model checkpoints by loading them into a KerasHub model. It's pretty cool!…

KerasHub is a collection of over 70 popular pretrained model architectures -- LLMs, VLMs, image generation models, etc -- that work with JAX, TF, PyTorch. They all support HuggingFace checkpoints -- you can load any HF model with them for the corresponding architecture.

Need a transformer equivalent in JAX, TF and PyTorch? That can load from a HF checkpoint? Checkout KerasHub google.com/url?sa=t&sourc…

I have bittersweet news to share. Yesterday we merged a PR deprecating TensorFlow and Flax support in transformers. Going forward, we're focusing all our efforts on PyTorch to remove a lot of the bloating in the transformers library. Expect a simpler toolkit, across the board.

The next Keras community online meetup will be this Friday at at 11AM PT! The team will be presenting the latest developments, in particular Keras Recommenders.

Docs: keras.io/api/rematerial… You can use keras.remat inside your own custom layers, or you can use keras.remat_scope(mode) to selectively apply it to any existing layer / model you're using