Carlos De la Guardia

@dela3499

🎙️ Podcast: AGI with Carlos. Fellow at @ConjectureInst. https://carlosd.substack.com/

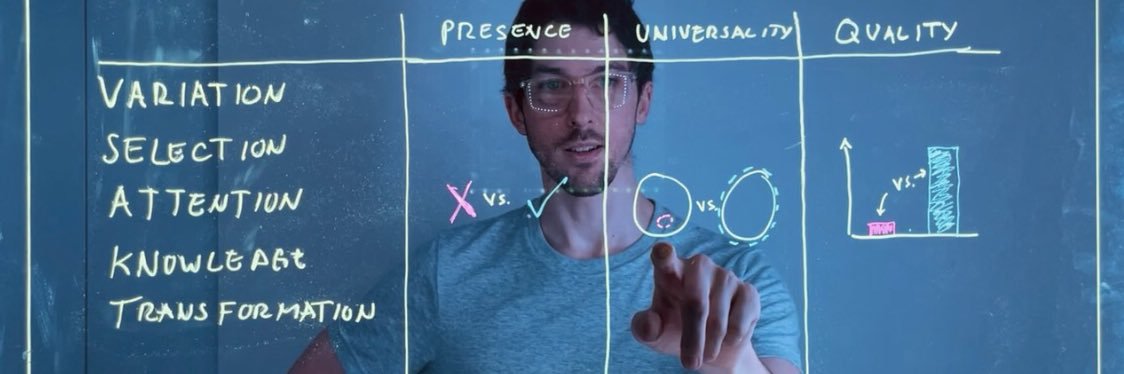

Had a great chat with @ToKTeacher about artificial general intelligence!

Conjecture Institute Fellow Carlos De La Guardia @dela3499 explains his unique Karl Popper/@DavidDeutschOxf inspired approach to AGI, thoughts about AI and intelligence more broadly and other topics. youtu.be/X61kFjB2XGg

When writing a book, it's much easier to discover, invent, and refine... *a* structure for *some* your ideas than *the* structure for *all* your ideas. In other words, it's far easier to continually discover little pockets of structure than to construct a complete…

The first thing you're supposed to do when writing a book is create an outline. I suspect that's wrong. An outline specifies a single, good ordering and grouping of all your ideas. But, that's devilishly hard to produce upfront. I think it makes more sense to say: I don't know…

Austin Lyft experiences today: “Please let me know if I can improve your experience in any way.” Then BLASTS random podcast at full volume. Then, jerks the car toward destination by tapping-then-releasing the gas every 2 seconds.

LLM weights, neural connections in brains, and the code for any program are ALL meaningless unless you know how to interpret them. If you do, they’re ALL meaningful. It’s possible to do this for the brain. It’s not magic. Kurzweil (and everybody else) may upload and live.

Information is scale-independent. Your name can be written with atoms or planets. Makes no difference. Some of your information is written on paper, or displayed on screens, so your eyes can see it. The same information is often stored in ultra-tiny things in your brain or hard…

Explanatory theories are instantiated in programs. If a system can create any kind of program, then it's therefore capable of creating the subset that is "explanatory". So, a random program generator could in principle produce *any* explanatory theory.

Whenever I have a good research day, as I did today, I feel rich. Rich in the sense of feeling close to a wealth of things yet to be discovered and created. Without that, the world can begin to seem known, small, and unpleasant. Hard to expand. Hard to move.

something kinda neat about ai slop is it starts to give people an actual vision into what a radical uploadcore simulationist future could look like. u say shit like "u could make ur reality whatever u want" and people have no idea what to imagine. goldfish keyboards that's what

He’s right about the importance of ideas. But he’s wrong about universality. Humans are indeed universal, and *any* baby could grow up to become a member of *any* culture. Once you have ideas and culture, however, they’re tremendously important and difficult to change.

Humans may seem to sit still, but our minds are like cheetahs - extremely fast, agile, and flexible. It's just that our prey - and our pursuit of it - are all *abstract* rather than physical. Btw, that mastery over the abstract leads to mastery over the physical. If we chose,…