David Clark (david-g-clark.bsky.social)

@d_g_clark

Theoretical neuroscience grad student at Columbia (he/him) 🏳️🌈

Very happy to share a preprint: “Symmetries and Continuous Attractors in Disordered Neural Circuits” Detailed accompanying thread is on the bluer, more celestial app 😊 biorxiv.org/content/10.110…

New preprint! "Simplified derivations for high-dimensional convex learning problems" Accompanying tweetprint is available EXCLUSIVELY on david-g-clark.bsky.social (I'll probably post from there going forward) arxiv.org/abs/2412.01110

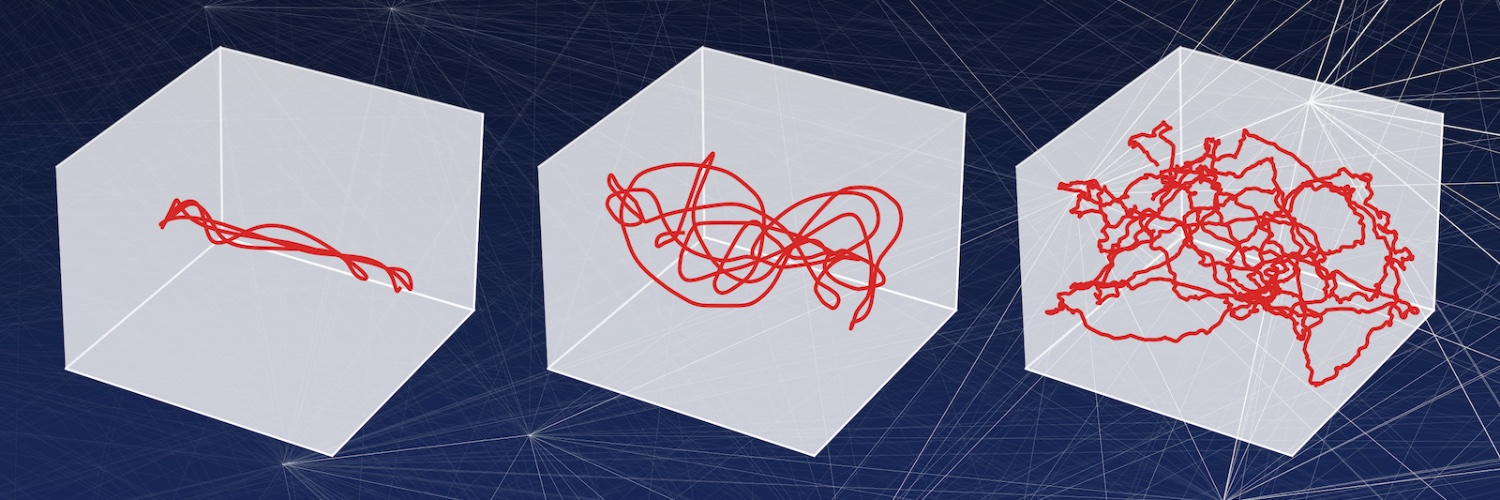

Even very small networks can be tuned to maintain continuous internal representations, but this comes at the cost of sensitivity to noise and variations in tuning @MarcellaNoorman et al. nature.com/articles/s4159…

Our work with Kenneth Kay, on training plastic neural networks to discover a plausible neural model of relational learning and transitive inference, has been accepted in @NatureNeuro! Preprint and code: github.com/thomasmiconi/t…

New paper! We meta-train plastic networks to do transitive inference (learn A>B & B>C, infer A>C) Training discovers a new neural learning algorithm, that reinstates past items in memory to quickly reconfigure existing knowledge upon learning a new item biorxiv.org/content/10.110…

Thrilled to share our new preprint on optimal replay protocols for continual learning using stat phys + control theory! Very excited about this direction 🚀

Starting too many projects and forgetting the ones you still need to wrap up? Don’t worry, neural networks do that too! In our new preprint (arxiv.org/abs/2409.18061), @stefsmlab, @framigna, and I study catastrophic forgetting in high-dimensional continual learning.

New work with @Jack_W_Lindsey, Larry Abbott, Dmitriy Aronov, and @selmaanchettih! We propose a model of episodic memory where memories are bound to “barcode” activity patterns, enabling precise and flexible memory. biorxiv.org/content/10.110…