Bohang Zhang @ICLR 2024

@bohang_zhang

Phd in @pku1898, focusing on topics in ML, GNN and LLM through an expressivity perspective

Thrilled to see our work honored with #ICLR2023 𝗢𝗨𝗧𝗦𝗧𝗔𝗡𝗗𝗜𝗡𝗚 𝗣𝗔𝗣𝗘𝗥 𝗔𝗪𝗔𝗥𝗗! Camera-ready paper: openreview.net/forum?id=r9hNv… Code: github.com/lsj2408/Grapho… Our paper covers: GNNs, graph transformers, expressivity, graph positional encodings, subgraph GNNs, and more!

Announcing ICLR 2023 outstanding paper award: blog.iclr.cc/2023/03/21/ann…. Congratulations to the authors!

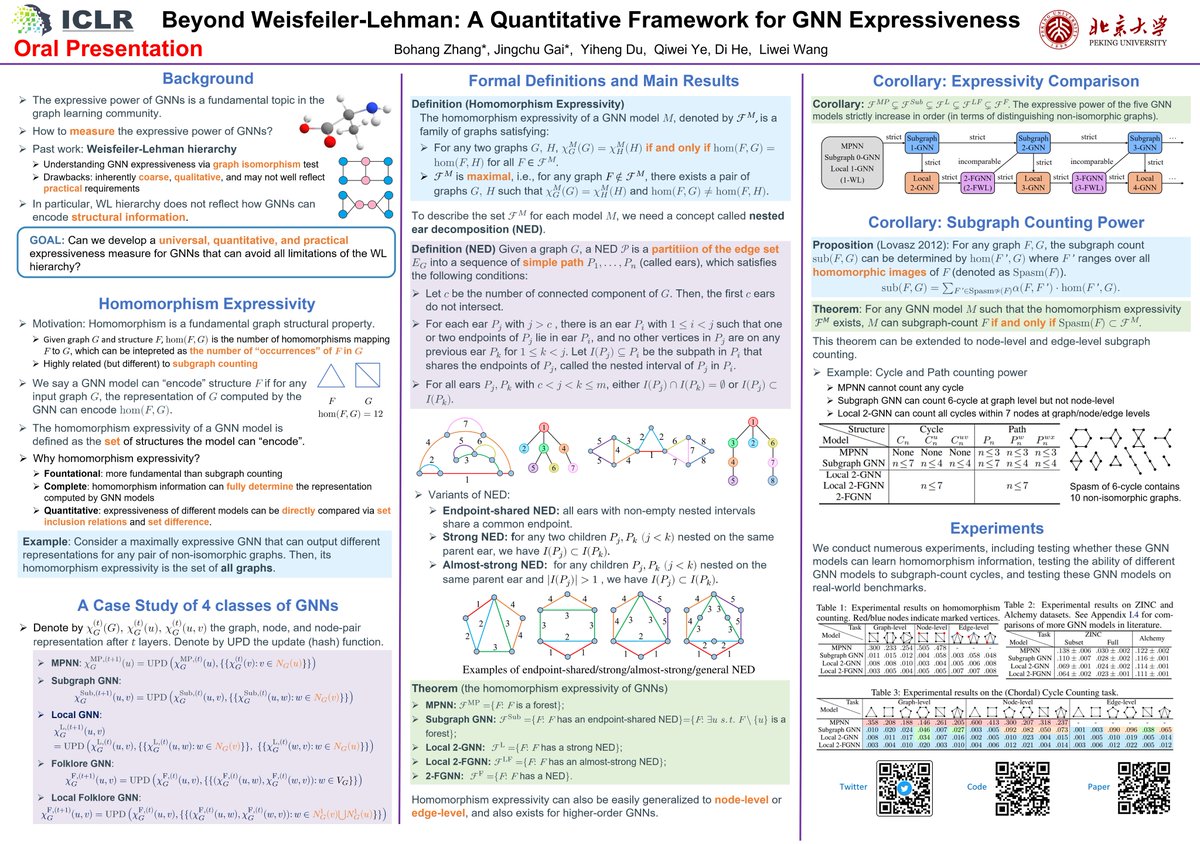

#ICLR2024 Just arrived in Vienna! Don't miss our oral presentation tomorrow afternoon in room Halle A3, focusing on 𝗚𝗡𝗡𝘀 and their 𝗲𝘅𝗽𝗿𝗲𝘀𝘀𝗶𝘃𝗲 𝗽𝗼𝘄𝗲𝗿! Also, swing by our poster session (Poster272, Halle B). See you there! 🌟

Great work!

#ICLR2024 Arrived Vienna! Happy to share our recent work 𝘁𝗼𝘄𝗮𝗿𝗱𝘀 𝗲𝗳𝗳𝗶𝗰𝗶𝗲𝗻𝘁 𝗮𝗻𝗱 𝗲𝗳𝗳𝗲𝗰𝘁𝗶𝘃𝗲 𝗴𝗲𝗼𝗺𝗲𝘁𝗿𝗶𝗰 𝗱𝗲𝗲𝗽 𝗹𝗲𝗮𝗿𝗻𝗶𝗻𝗴 𝗳𝗼𝗿 𝘀𝗰𝗶𝗲𝗻𝗰𝗲! With incredible CTL and @ask1729! May 9 10:45am-12:45am (Poster254, Halle B). Details⬇️ (1/n)

To reduce human bias in model architecture, we propose a simple, yet effective LLM-like visual framework, called GiT, applicable for various vision tasks (e.g., VL tasks and segmentation) only with a vanilla ViT. :) Code: github.com/Haiyang-W/GiT arxiv.org/abs/2403.09394

RNNs are popular but some limitations are known — RNNs cannot solve some algorithmic problems that Transformers can. Our new paper "RNNs Are NOT Transformers (Yet)" explores the representation gap between constant-memory RNNs and Transformers and potential ways to bridge the gap.

Join us at our #ICLR2024 workshop: "Bridging the Gap Between Practice and Theory in Deep Learning"! Workshop website: sites.google.com/view/bgpt-iclr…

🎉 Excited for #ICLR2024? Join us at our vibrant workshop: "Bridging the Gap Between Practice and Theory in Deep Learning"! 🚀Dive into a melting pot of groundbreaking theories 📘 and empirical discoveries 🧪 that illuminate the enigmatic world of deep learning. (1/3)