Bishop Fox

@bishopfox

A leading provider of #offensivesecurity solutions & contributor to the #infosec community. #pentesting #hacking VC @forgepointcap @carrickcapital @WestCap8

Tune in: @AletheDenis joins #TheHackersCache podcast to talk about the ethical limits of red teaming, psychology, and what it takes to social engineer at the highest level: bfx.social/4odNZ9F

Missed Breaking AI live? It’s now on demand. Brian D. breaks down how to actually test LLMs by breaking the mind, not the code. Watch it here → bfx.social/4lLbxB6

Running Sitecore 10.1–10.3? Update now. A new vuln chain starts with a hardcoded “b” password and ends in full system compromise. Here’s how it works and how to fix it: bfx.social/40yhHvO

Still staging Sliver implants the hard way? Watch the VOD of our latest workshop on automation, staging, and SliverPy. Hosted by Senior Red Teamer Tim Makram Ghatas → bfx.social/4nYIzz9

Last month, @AletheDenis virtually joined the inaugural Predictive Cyber Day at @Sorbonne_Univ_ in Paris. Her session, Beware of Social Engineering Traps, was part of a packed security track alongside leaders from @Airbus, @Credit_Agricole, and more. Catch her live at 8 p.m. PST…

Today we’re proud to sponsor BSides Mexico City 🇲🇽 We’ve got three sessions, including hands-on workshops on cloud security and mobile bypasses, plus lessons from real-world breaches. If you’re there, swing by and meet Juan Jasso, @Steeven_Rod17, @M41w4r31, and Abdel Bolivar.…

Superhero throwdowns, cyberpunk classics, and yes, even the eternal Betty or Veronica? debate. Ahead of @AletheDenis’s Inside the Hacker’s Mind panel at #SDCC, we asked the team: What comics left a mark on you? Our picks reveal a lot about how we think, hack, and see the world:…

🔴We’re live! Join us now for Breaking AI: Inside the Art of LLM Pen Testing. Tap in: bfx.social/40l6bDT #AIsecurity #LLM

Last call! Join us tomorrow for Breaking AI: Inside the Art of LLM Pen Testing. Learn how attackers are actually manipulating LLMs and why prompt engineering isn’t enough: bfx.social/3IwIodX

LLMs don’t break like traditional software. Pen testing AI means understanding narrative, tone, and context, not just prompt injection. Security Consultant Brian D. breaks it down: bfx.social/4kDRgfq

Sneakers hits the screen in Brooklyn tonight! Join us at 9 p.m. at @littlefieldnyc for a special @SummerC0n screening of the cult classic that inspired our name. Candy bar included. Chaos encouraged.

We’re heading to @bsidescdmx as a proud Silver Sponsor! Catch Bishop Fox consultants all day long, from a hands-on Cloud Pentesting 101 workshop with Juan Jasso, to advanced mobile bypass tactics from @Steeven_Rod17 and @M41w4r31, to real-world intrusion case studies with Abdel…

LLMs don’t break like apps. They break like people. Join us July 16 for "Breaking AI" and learn how to pen test LLMs using behavioral manipulation, not payloads. Save your seat: bfx.social/464DezR

Tomorrow on Discord: Live demo of Sliver staging & automation. 50+ already registered - save your spot! bfx.social/4kpCIji

.@defcon Black Badge winner and Senior Security Consultant @AletheDenis joins #CybHER for a conversation about her path into cybersecurity, her philosophy on ethical hacking, and how social engineering shaped her career: bfx.social/3IeyQ7o

New from Red Team Practice Director @tetrisguy in @SecurityWeek: "Like Ransoming a Bike" explores why IR plans fail and what actually builds organizational resilience in the face of ransomware. bfx.social/4lH29y1

Gearing up for Sliver 1.6? Next week Timothy Makram Ghatas goes into staging & automation tricks that speed up ops in our latest Discord Workshop! Save your seat: bfx.social/44IPpkx #Sliver #C2 #Discord #RedTeam

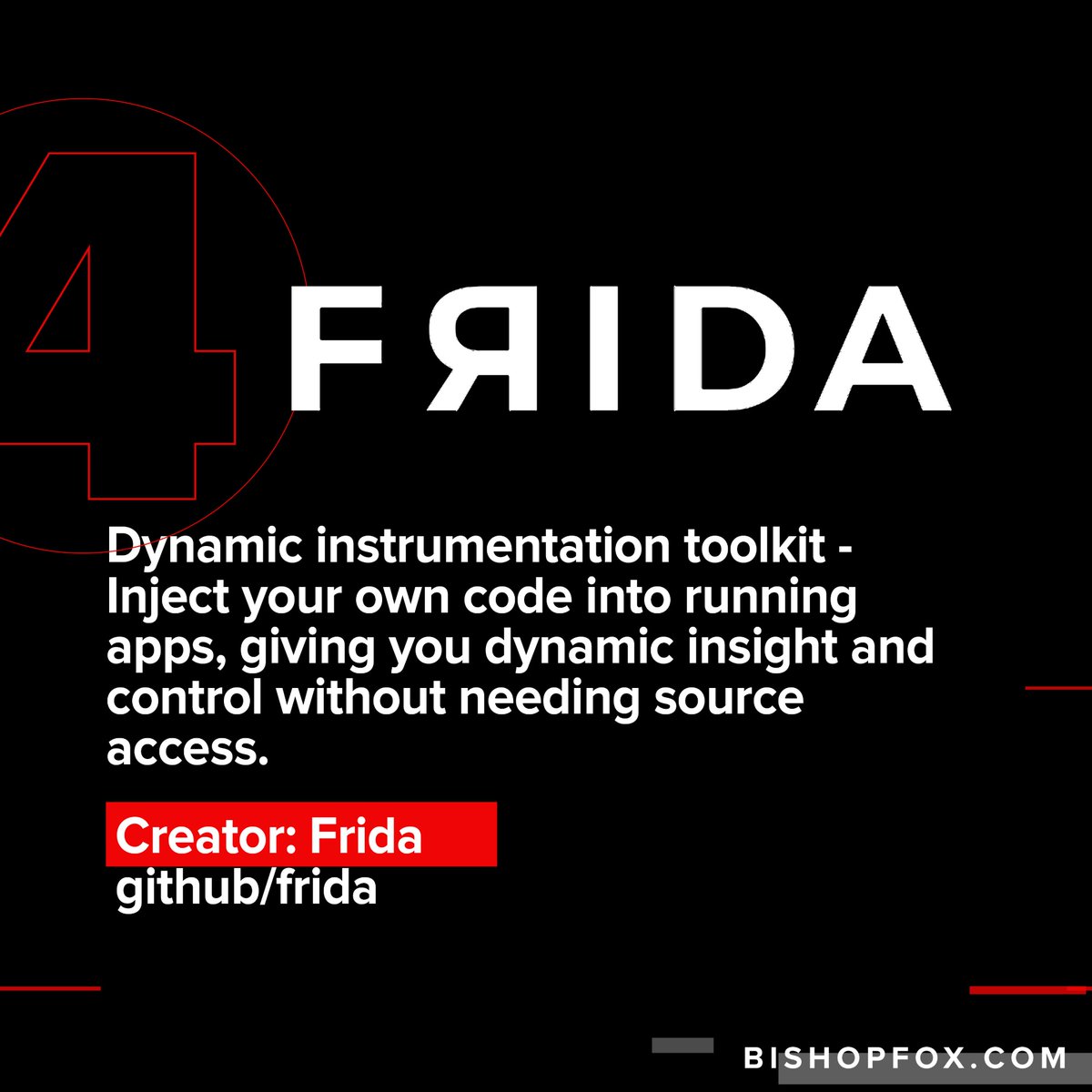

Part 2 of our Red Team Tools series is live, and it’s loaded with tools for evasion, identity exploitation, and dev libraries our Red Teamers rely on in the field. bfx.social/3I9jdOu

Real-world risk ≠ CVSS score. Our latest blog by Nate Robb breaks down how Bishop Fox distinguishes signal from noise in the CVE firehose: bfx.social/3Gq7k6j

Echo-chamber prompt attack shows LLM guardrails can still be warped. Neural Trust got GPT & Gemini to jailbreak ~90% of the time with subtle multi-turn cues. Good reminder: context poisoning ≠ solved. Read the Dark Reading write-up: bfx.social/45LsRAP