Binghao Huang

@binghao_huang

CS PhD student @Columbia, advised by @YunzhuLiYZ. Research Intern @Nvidia. Previous MechE @UCSD, advised by @xiaolonw. Embodied AI/Robot Learning.

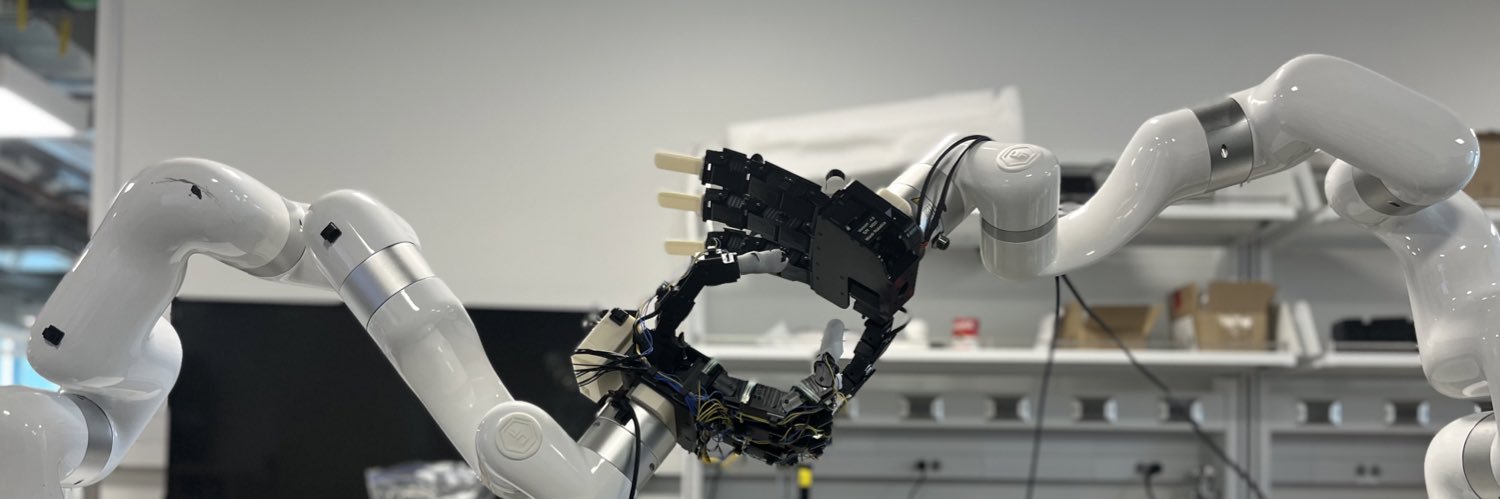

Tactile interaction in the wild can unlock fine-grained manipulation! 🌿🤖✋ We built a portable handheld tactile gripper that enables large-scale visuo-tactile data collection in real-world settings. By pretraining on this data, we bridge vision and touch—allowing robots to:…

🚀 Introducing RIGVid: Robots Imitating Generated Videos! Robots can now perform complex tasks—pouring, wiping, mixing—just by imitating generated videos, purely zero-shot! No teleop. No OpenX/DROID/Ego4D. No videos of human demonstrations. Only AI generated video demos 🧵👇

How to generate billion-scale manipulation demonstrations easily? Let us leverage generative models! 🤖✨ We introduce Dex1B, a framework that generates 1 BILLION diverse dexterous hand demonstrations for both grasping 🖐️and articulation 💻 tasks using a simple C-VAE model.

Don’t miss this! 🔥 Submit your latest work on dexterous manipulation to our CoRL 2025 workshop ✋🤖 Amazing talks, great community—and a chance to win a LEAP Hand or cash prize! 📅 Deadline: Aug 20 🔗 dex-manipulation.github.io/corl2025/ #CoRL2025 #DexterousManipulation #RobotLearning

📢 Call for Papers: 4th Workshop on Dexterous Manipulation at CoRL 2025! Submit your dexterous work and come participate in our workshop! ✋🤖 📅Deadline: Aug 20 dex-manipulation.github.io/corl2025/ There will be a cash prize sponsored by Dexmate or a LEAP Hand up for grabs! 😃

📢 Call for Papers: 4th Workshop on Dexterous Manipulation at CoRL 2025! Submit your dexterous work and come participate in our workshop! ✋🤖 📅Deadline: Aug 20 dex-manipulation.github.io/corl2025/ There will be a cash prize sponsored by Dexmate or a LEAP Hand up for grabs! 😃

I was really impressed by the UMI gripper (@chichengcc et al.), but a key limitation is that **force-related data wasn’t captured**: humans feel haptic feedback through the mechanical springs, but the robot couldn’t leverage that info, limiting the data’s value for fine-grained…

Tactile interaction in the wild can unlock fine-grained manipulation! 🌿🤖✋ We built a portable handheld tactile gripper that enables large-scale visuo-tactile data collection in real-world settings. By pretraining on this data, we bridge vision and touch—allowing robots to:…

Appreciate you sharing this, Ilir! Nicely summarized.

What if robots could actually feel what they touch, and get things right... even when vision fails? [📍Bookmark paper & code for later] Robots usually struggle with delicate tasks like inserting test tubes or transferring fluids. This new handheld gripper might change that. A…

Check out @binghao_huang ‘s great work on scaling up tactile interaction in the wild!

Tactile interaction in the wild can unlock fine-grained manipulation! 🌿🤖✋ We built a portable handheld tactile gripper that enables large-scale visuo-tactile data collection in real-world settings. By pretraining on this data, we bridge vision and touch—allowing robots to:…

You can find Chipotle in the method figure

Tactile interaction in the wild can unlock fine-grained manipulation! 🌿🤖✋ We built a portable handheld tactile gripper that enables large-scale visuo-tactile data collection in real-world settings. By pretraining on this data, we bridge vision and touch—allowing robots to:…

It is soooooo awesome to see UMI + Tactile comes to life! I am very impressed how quickly the whole hardware + software system is built. Meanwhile, they even collected lots of the data in the wild! Very amazing work!!!

Tactile interaction in the wild can unlock fine-grained manipulation! 🌿🤖✋ We built a portable handheld tactile gripper that enables large-scale visuo-tactile data collection in real-world settings. By pretraining on this data, we bridge vision and touch—allowing robots to:…

We acknowledge the concurrent work ViTaMIn(chuanyune.github.io/ViTaMIn_page/), which also proposes a portable visuo-tactile data collection system and a cross-modal learning framework for contact-rich manipulation. Both of our work shares high-level goals, but the system design,…

Tactile interaction in the wild can unlock fine-grained manipulation! 🌿🤖✋ We built a portable handheld tactile gripper that enables large-scale visuo-tactile data collection in real-world settings. By pretraining on this data, we bridge vision and touch—allowing robots to:…

6/21-6/25 RSS@LA!Happy to meet up and chat if you are around!

Great Work Venkatesh! I love this structure design. Would happy to have a try in the future!

Making touch sensors has never been easier! Excited to present eFlesh, a 3D printable tactile sensor that aims to democratize robotic touch. All you need to make your own eFlesh is a 3D printer, some magnets and a magnetometer. See thread 👇and visit e-flesh.com

🔥🔥🔥

Can we learn a 3D world model that predicts object dynamics directly from videos? Introducing Particle-Grid Neural Dynamics: a learning-based simulator for deformable objects that trains from real-world videos. Website: kywind.github.io/pgnd ArXiv: arxiv.org/abs/2506.15680…

I like this system design! Using active perception in complex bimanual task is a cool novelty. Great work Haoyu!

Your bimanual manipulators might need a Robot Neck 🤖🦒 Introducing Vision in Action: Learning Active Perception from Human Demonstrations ViA learns task-specific, active perceptual strategies—such as searching, tracking, and focusing—directly from human demos, enabling robust…