Behrad Moniri

@bemoniri

Working on the foundations of machine learning @Penn. QR Intern @ Cubist Systematic Strategies (Point72). Previously studied EE @ Sharif in Iran.

Accepted at #icml2025!

Check out our recent paper on layer-wise preconditioning methods for optimization and feature learning theory:

Deep learning theorists study simple NNs (e.g., two-layer model, or linear networks) in idealized settings (e.g., isotropic inputs). There are very nice results showing that SGD can learn "good" features in these settings.

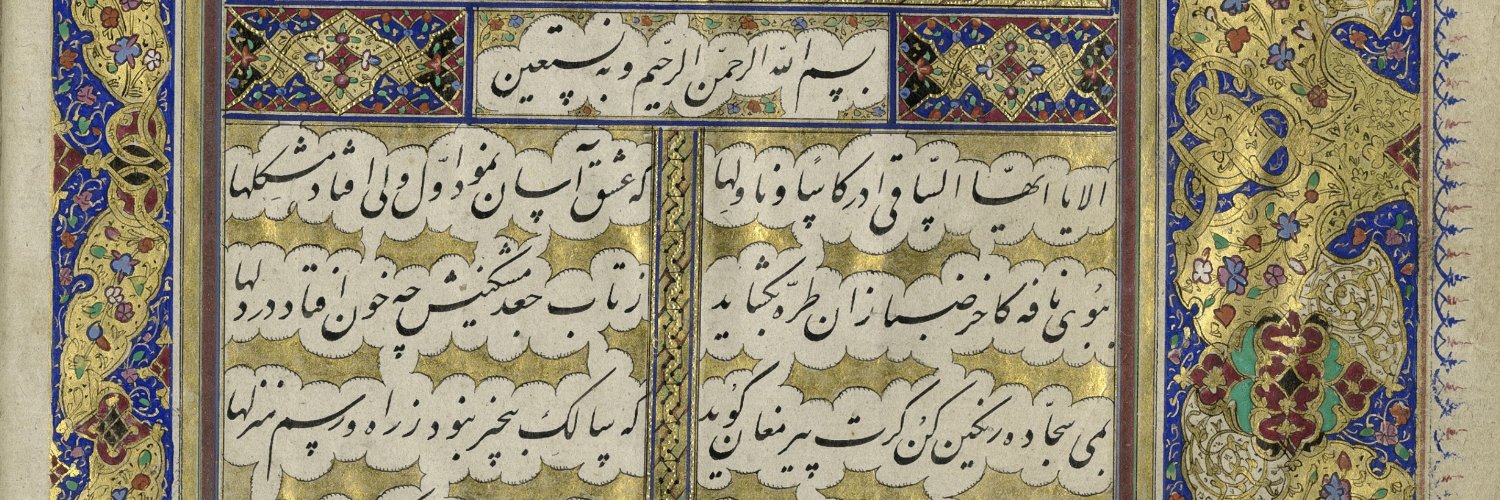

«ز رقیبِ دیوسیرت، به خدای خود پناهم / ...» ـ حافظ ganjoor.net/hafez/ghazal/s…

👀 Cool recent work on uncertainty quantification of LLMs.

How can we quantify uncertainty in LLMs from only a few sampled outputs? The key lies in the classical problem of missing mass—the probability of unseen outputs. This perspective offers a principled foundation for conformal prediction in query-only settings like LLMs.

On the Mechanisms of Weak-to-Strong Generalization: A Theoretical Perspective. arxiv.org/abs/2505.18346

I was really grateful to have the chance to speak at @Cohere_Labs and @ml_collective last week. My goal was to make the most helpful talk that I could have seen as a first-year grad student interested in neural network optimization. Sharing some info about the talk here... (1/6)

Super excited to announce our ICML workshop on highlighting the power (and limitations?) of small-scale in the era of large-scale ML. You can submit just a Jupyter notebook, Jupyter notebook + paper, or a survey/position paper. Do submit your work and help us spread the word!

Announcing the 1st Workshop on Methods and Opportunities at Small Scale (MOSS) at @icmlconf 2025! 🔗Website: sites.google.com/view/moss2025 📝 We welcome submissions! 📅 Paper & jupyter notebook deadline: May 22, 2025 Topics: – Inductive biases & generalization – Training…

I am hugely indebted to Prof. Strogatz. His book on Nonlinear Dynamics and Chaos literally changed my life in undergrad. Otherwise I would have been doing electromagnetic theory (although he doesn't even know me).

Congratulations Steven Strogatz of @Cornell, newly inducted #NASmember! #NAS162 #mathematics

Cool software package by Subramonian and @dohmatobelvis for free-probability computations in ML theory.

auto-fpt: Automating Free Probability Theory Calculations for Machine Learning Theory. arxiv.org/abs/2504.10754

With $840K in funding from @awscloud, @PennAsset is supporting 12 Ph.D. students conducting cutting-edge research in AI safety, robustness and interpretability. bit.ly/422Nfeo #AIMonth2025 #TrustworthyAI

Circles of Hell: Circle 8: "Thanks for the response. My concerns are addressed. I wish to keep my score." Circle 9: "Acknowledgement: I confirm that I have read the author response to my review and will update my review in light of this response as necessary."

Check out our recent paper on layer-wise preconditioning methods for optimization and feature learning theory:

On The Concurrence of Layer-wise Preconditioning Methods and Provable Feature Learning ift.tt/LvMb2yw

A new generation of jailbreaks are rolling out by our team at @robusthq and in collaboration with @PennEngineers. We jailbreak @deepseek_ai R1 model with a %100 attack success rate. To know more, see our blog post on @CiscoSecure and the corresponding @WIRED article. amazing…