Aviral Kumar

@aviral_kumar2

Assistant Professor of CS & ML at @CarnegieMellon. Part-time Research Scientist Google. PhD from UC Berkeley.

Since R1 there has been a lot of chatter 💬 on post-training LLMs with RL. Is RL only sharpening the distribution over correct responses sampled by the pretrained LLM OR is it exploring and discovering new strategies 🤔? Find answers in our latest post ⬇️ tinyurl.com/rlshadis

Don't forget to check out @QuYuxiao & @matthewyryang's poster on dense rewards for test-time scaling & why it matters Today at 11 am in East Exhibition Hall, poster E-2712.

Heading to @icmlconf #ICML2025 this week! DM me if you’d like to chat ☕️ Come by our poster sessions on: 🧠 Optimizing Test-Time Compute via Meta Reinforcement Fine-Tuning (arxiv.org/abs/2503.07572) 🔍 Learning to Discover Abstractions for LLM Reasoning (drive.google.com/file/d/1Sfafrk…)

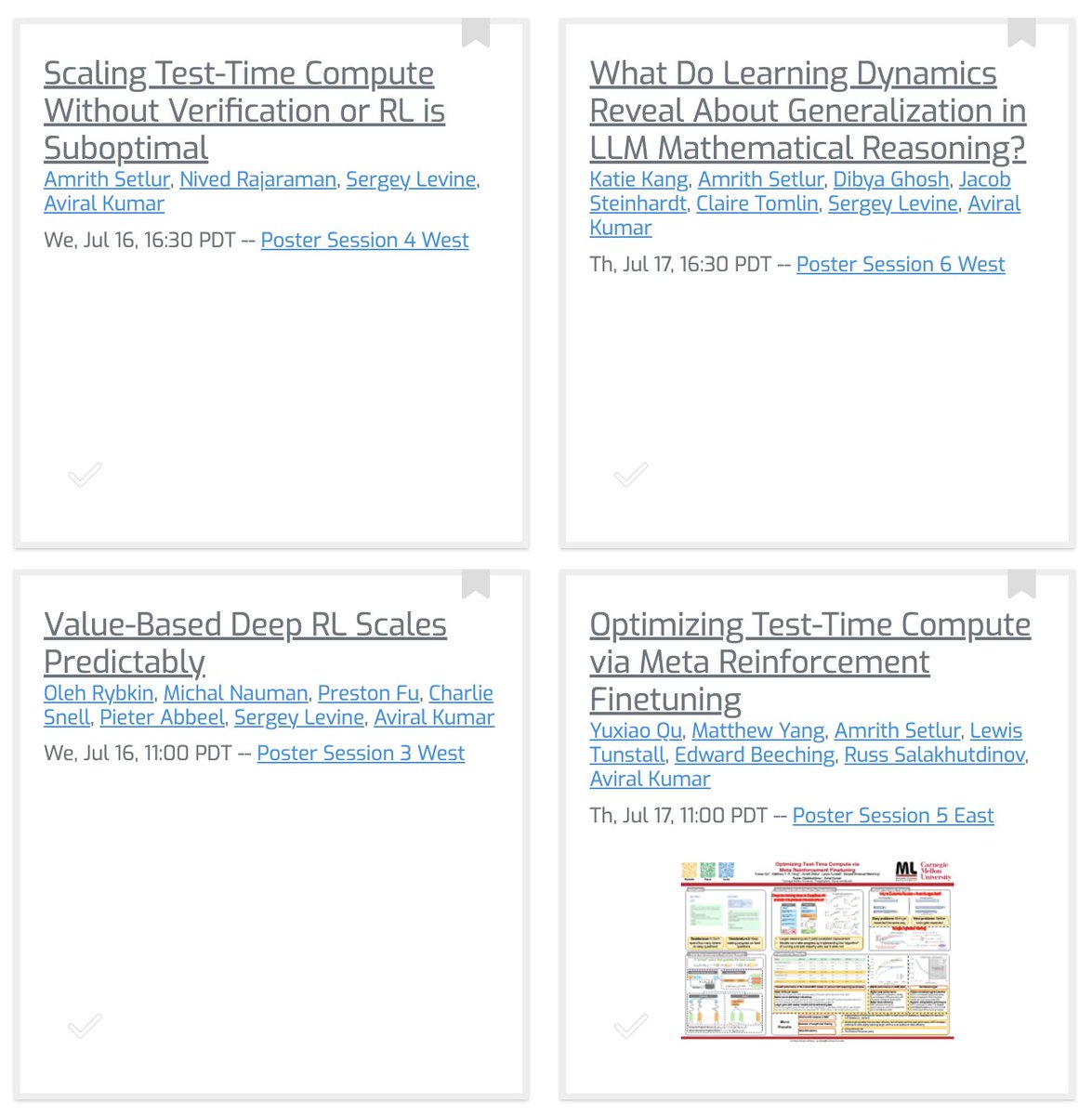

If you are at #icml25 and are interested in RL algorithms, scaling laws for RL, and test-time scaling (& related stuff), come talk to us at various poster sessions (details ⬇️). We are also presenting some things at workshops later in the week, more on that later.

Checkout these awesome new real-robot online RL fine-tuning results that @andy_peng05 and @zhiyuan_zhou_ got with our WSRL method. WSRL appeared at ICLR earlier this year -- check this out for more details: zhouzypaul.github.io/wsrl/ 👇

We tested WSRL (Warm-start RL) on a Franka Robot, and it leads to really efficient online RL fine-tuning in the real world! WSRL learned the peg insertion task perfectly with only 11 minutes of warmup and *7 minutes* of online RL interactions 👇🧵

🚨 NEW PAPER: What if LLMs could tackle harder problems - not by explicitly training on longer traces, but by learning how to think longer? Our recipe e3 teaches models to explore in-context, enabling LLMs to unlock longer reasoning chains without ever seeing them in training.…

Introducing e3 🔥 Best <2B model on math 💪 Are LLMs implementing algos ⚒️ OR is thinking an illusion 🎩.? Is RL only sharpening the base LLM distrib. 🤔 OR discovering novel strategies outside base LLM 💡? We answer these ⤵️ 🚨 arxiv.org/abs/2506.09026 🚨 matthewyryang.github.io/e3/