Arcee.ai

@arcee_ai

Optimize cost & performance with AI platforms powered by our industry-leading SLMs: Arcee Conductor for model routing, & Arcee Orchestra for agentic workflows.

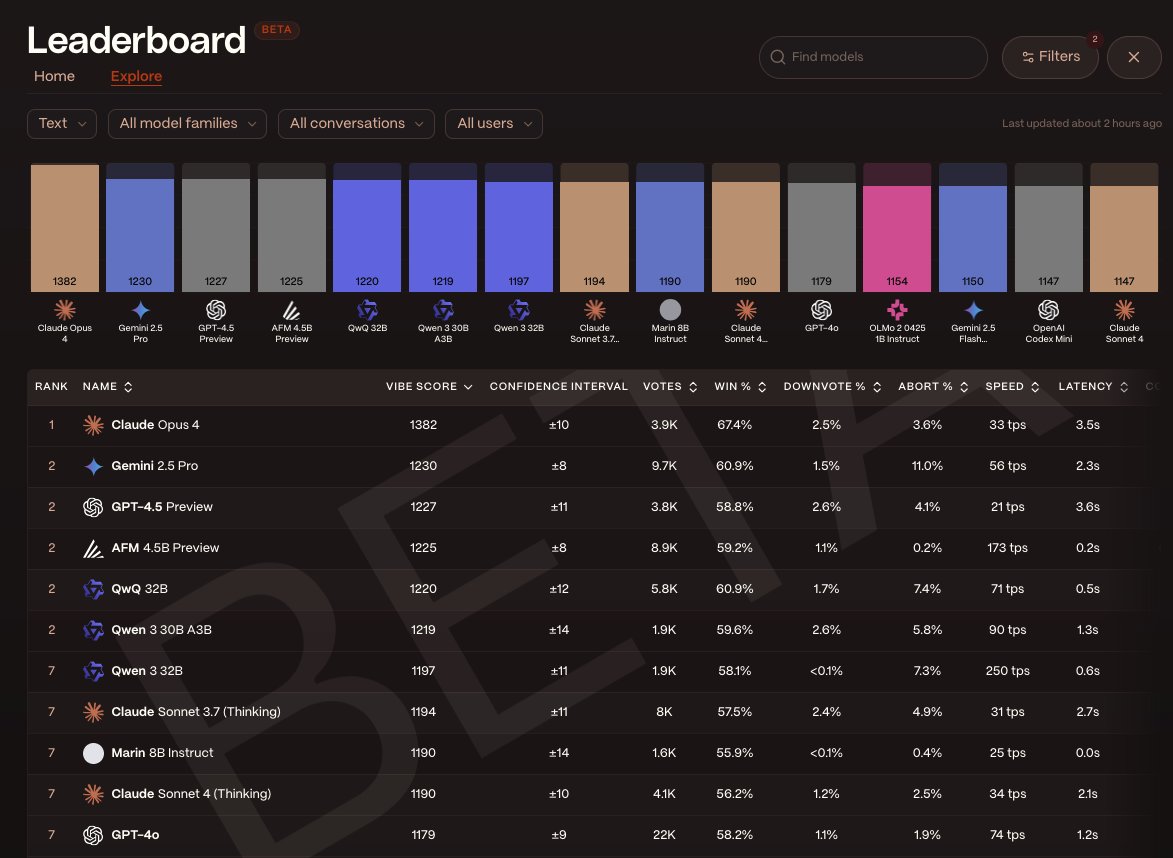

We knew it all along, but it's great to get user validation: through real-user usage and evaluation, several of our small models—Maestro, Coder, and AFM-4.5B-Preview—are topping the charts on @yupp_ai , the only platform that determines AI quality through real-user pairwise…

As generative AI becomes increasingly central to business applications, the cost, complexity, and privacy concerns associated with language models are becoming significant. At @arcee_ai, we’ve been asking a critical question: Can CPUs actually handle the demands of language…

In this fun demonstration, you can witness the impressive capabilities of @arcee_ai AFM-4.5B-Preview, Arcee's first foundation model, across diverse domains. The demo showcases the model tackling complex knowledge questions, creating sophisticated creative writing, and…

In this new video, I introduce two new research-oriented models that @arcee_ai recently released on @huggingface Face. Homunculus is a 12 billion-parameter instruction model distilled from Qwen3-235B onto the Mistral AI Nemo backbone. It was purpose-built to preserve Qwen’s…

In this video, I introduce and demonstrate three production-grade models that @arcee_ai recently opened and released on @huggingface . Arcee-SuperNova-v1 (70B) is a merged model built from multiple advanced training approaches. At its core is a distilled version of…

Save the date! A week from now, please join us live to discover how @Zerve_AI is leveraging our model routing solution, Arcee Conductor, to improve its agentic platform for data science workflows. This should be a super interesting discussion, and of course, we'll do demos!…

Today, we're happy to announce the open-weights release of five language models, including three enterprise-grade production models that have been powering customer workloads through our SaaS platform (SuperNova, Virtuoso-Large, Caller), and two cutting-edge research models…

Today, we're excited to announce the integration of @arcee_ai Conductor, our SLM/LLM model routing solution, into the @Zerve_AI platform, an agent-driven operating system for Data & AI teams 😃 This collaboration enables data scientists, engineers, and AI developers to build,…

We’re beyond thrilled to share that Arcee AI Conductor has been named “LLM Application of the Year” at the 2025 AI Breakthrough Awards. This recognition isn’t just a shiny badge—it’s a celebration of a vision we’ve been chasing for years: making AI smarter, more accessible, and…

“First of many blogs” from Arcee. AFM-4.5B scaled from 4K → 64K context. ⮕ arcee.ai/blog/extending… 𝑱𝑼𝑺𝑻 𝑴𝑬𝑹𝑮𝑬, 𝑫𝑰𝑺𝑻𝑰𝑳𝑳, 𝑹𝑬𝑷𝑬𝑨𝑻. Proof it scales: Same merge–distill cycle applied to GLM-4-32B. Fixes 8K degradation in the 0414 release. +5% overall, strong…

In this post, Mariam Jabara, one of our Field Engineers, walks you through three real-life use cases for model merging, recently published in research papers: ➡️ Model Merging in Pre-training of Large Language Models ➡️ PatientDx: Merging Large Language Models for Protecting…

Congratulations to the @datologyai team on powering the data for AFM-4B by @arcee_ai - competitive with Qwen3 - using way way less data! This is exactly why I'm so excited to be joining @datologyai this summer to push the frontier of data curation 🚀

Congrats to @LucasAtkins7 and @arcee_ai on a fantastic model release! DatologyAI powers the data behind AFM-4.5B, and we're just getting started.

Last week, we launched AFM-4.5B, our first foundation model. In this post by @chargoddard , you will learn how we extended the context length of AFM-4.5B from 4k to 64k context through aggressive experimentation, model merging, distillation, and a concerning amount of soup. Bon…

this release is pure class. arcee using their data to do some short-term continued pretraining on GLM 32b. long context support has gone from effectively 8k -> 32k, and all base model evaluations (including short context ones) have improved

You can't overstate how impressive this is. Arcee took one of the strongest base models, GLM-4, a product of many years of Tsinghua R&D (THUDM/THUKEG/Z.ai, GLM-130B was maybe *the first* real open weights attack on OpenAI, Oct 2022)… and made it plain better. And told us how.

this release is pure class. arcee using their data to do some short-term continued pretraining on GLM 32b. long context support has gone from effectively 8k -> 32k, and all base model evaluations (including short context ones) have improved

Our first foundation model, AFM-4.5B, is not even 24 hours old, and our users are already going wild. "Don't sleep on Arcee" seems to be the motto. We love that, because we haven't slept much lately 😃 You can try the model in our playground (afm.arcee.ai/#Chat-UI) and on…