Anmol Kabra

@anmolkabra

ML PhD at @Cornell: LLM reasoning, agents, and AI for Science. Previously at @business, @TTIC_Connect, and @ASAPP.

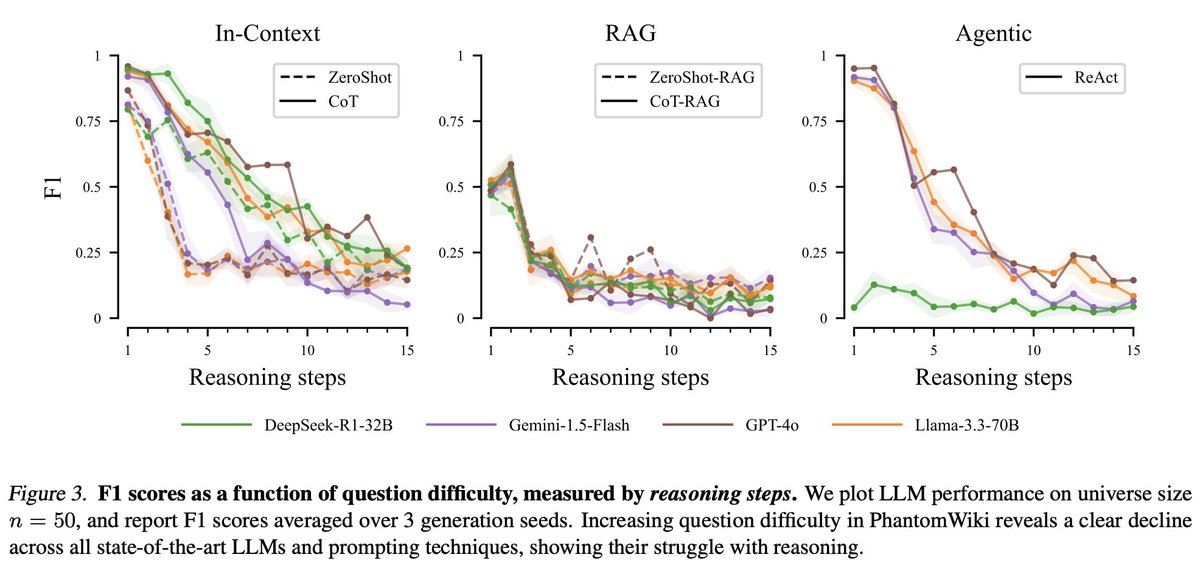

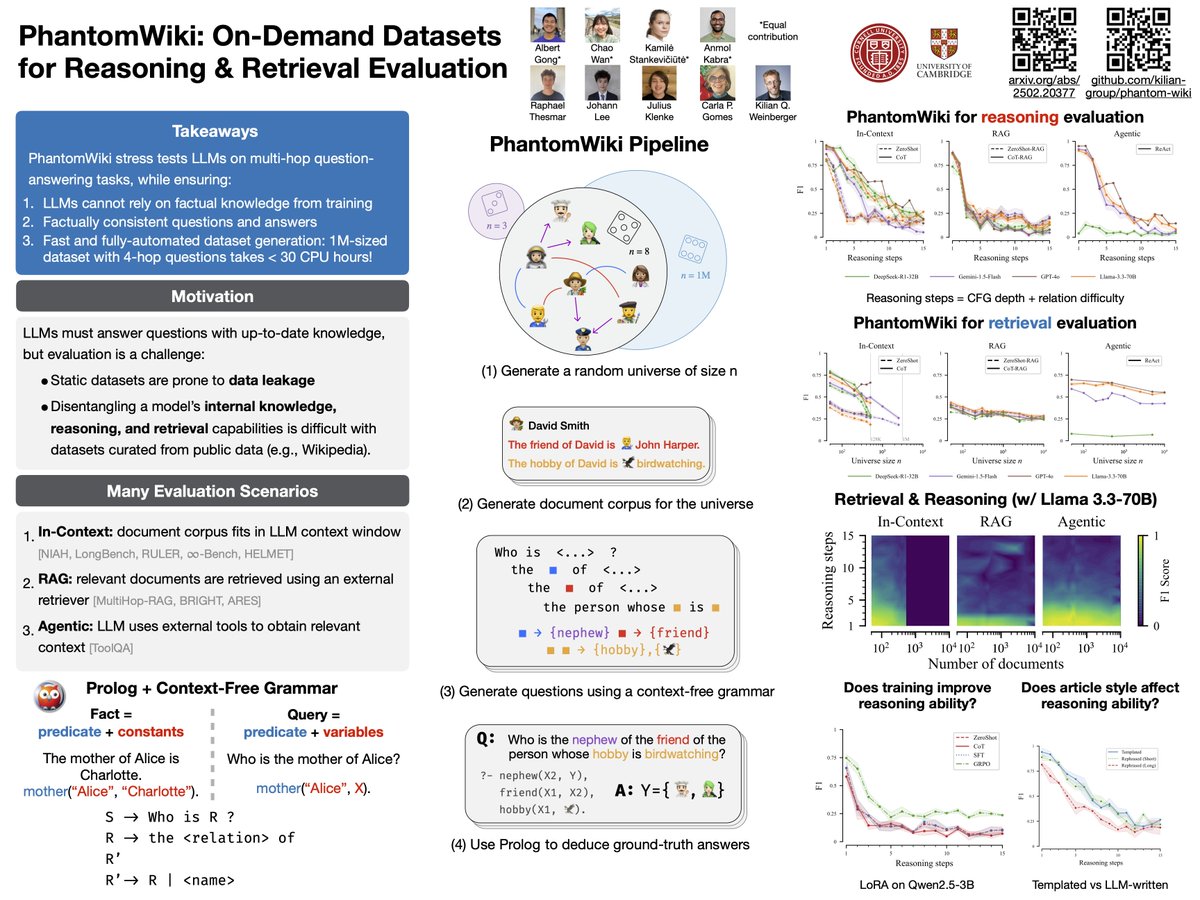

🚀 📢 Releasing PhantomWiki, a reasoning + retrieval benchmark for LLM agents! If I asked you "Who is the friend of father of mother of Tom?", you'd simply look up Tom -> mother -> father -> friend and answer. 🤯 SOTA LLMs, even DeepSeek-R1, struggle with such simple reasoning!

Presenting PhantomWiki with Albert and Johann at #ICML2025 on Tuesday 11am + an oral talk at Long Context Workshop on Saturday! Come say hi/chat about LLM reasoning and retrieval evaluation! icml.cc/virtual/2025/p…

🚀Excited to share our latest work: LLMs entangle language and knowledge, making it hard to verify or update facts. We introduce LMLM 🐑🧠 — a new class of models that externalize factual knowledge into a database and learn during pretraining when and how to retrieve facts…

🎉 PhantomWiki is accepted to the @iclr_conf DATA-FM workshop! Come chat with us in Singapore 🦁 🧠 The reasoning + retrieval benchmark comes right on the heels of new @RealAAAI presidential report: AI Reasoning and Agents research front and center!

🚀 📢 Releasing PhantomWiki, a reasoning + retrieval benchmark for LLM agents! If I asked you "Who is the friend of father of mother of Tom?", you'd simply look up Tom -> mother -> father -> friend and answer. 🤯 SOTA LLMs, even DeepSeek-R1, struggle with such simple reasoning!

Everyone's excited about reasoning, but how can we evaluate it? Check out a cool work from our group 👀 super easy to use (trust me, I'm using it now!)

PhantomWiki generates datasets of wiki pages and reasoning questions about the universe of people, on the scale of Wikipedia 🌐 🚨The universe of people and their relationships are generated randomly. So by construction, LLMs cannot memorize/cheat on PhantomWiki evaluation.