Anand Bhattad

@anand_bhattad

Incoming Assistant Professor @JHUCompSci, @HopkinsDSAI | RAP @TTIC_Connect | PhD @SiebelSchool | UG @surathkal_nitk | Exploring Knowledge in Generative Models

I’m thrilled to share that I will be joining Johns Hopkins University’s Department of Computer Science (@JHUCompSci, @HopkinsDSAI) as an Assistant Professor this fall.

🚀 Introducing RIGVid: Robots Imitating Generated Videos! Robots can now perform complex tasks—pouring, wiping, mixing—just by imitating generated videos, purely zero-shot! No teleop. No OpenX/DROID/Ego4D. No videos of human demonstrations. Only AI generated video demos 🧵👇

Research arc: ⏪ 2 yrs ago, we introduced VRB: learning from hours of human videos to cut down teleop (Gibson🙏) ▶️ Today, we explore a wilder path: robots deployed with no teleop, no human demos, no affordances. Just raw video generation magic 🙏 Day 1 of faculty life done! 😉…

🚀 Introducing RIGVid: Robots Imitating Generated Videos! Robots can now perform complex tasks—pouring, wiping, mixing—just by imitating generated videos, purely zero-shot! No teleop. No OpenX/DROID/Ego4D. No videos of human demonstrations. Only AI generated video demos 🧵👇

Welcome to JHU CS! 💙

I’m thrilled to share that I will be joining Johns Hopkins University’s Department of Computer Science (@JHUCompSci, @HopkinsDSAI) as an Assistant Professor this fall.

🥳 Excited to share that I’ll be joining the CS Department at UNC-Chapel Hill (@unccs @unc_ai_group) as an Assistant Professor starting Fall 2026! Before that, I’ll be working at Ai2 Prior (@allen_ai @Ai2Prior) and UW (@uwcse) on multimodal understanding and generation.

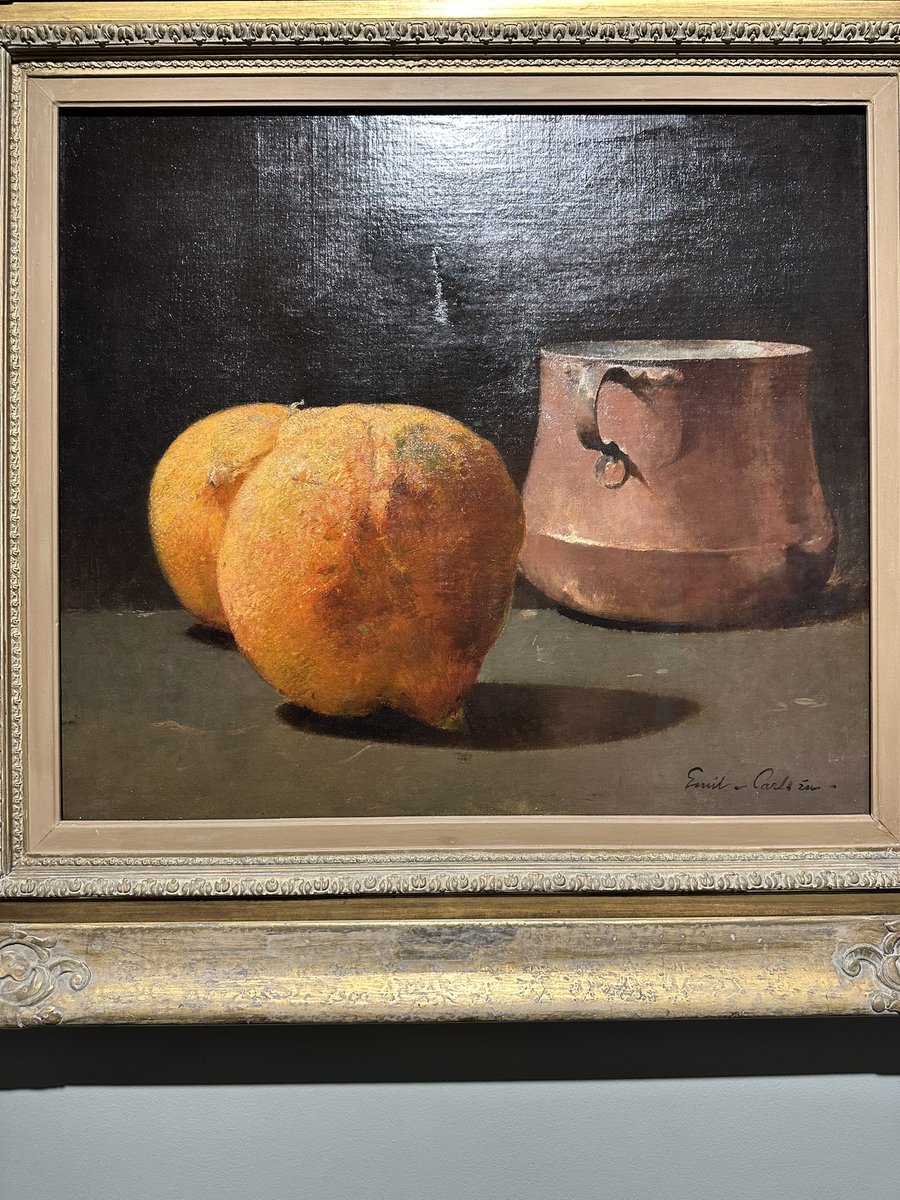

Excellent material for a computer vision class (compute optical flow, human pose, focuse of expansion, ...) @CSProfKGD @anand_bhattad. All videos are downloadable. nytimes.com/interactive/20…

All slides from the #cvpr2025 (@CVPR) workshop "How to Stand Out in the Crowd?" are now available on our website: sites.google.com/view/standoutc…

In this #CVPR2025 edition of our community-building workshop series, we focus on supporting the growth of early-career researchers. Join us tomorrow (Jun 11) at 12:45 PM in Room 209 Schedule: sites.google.com/view/standoutc… We have an exciting lineup of invited talks and candid…

🌟Excited to share our paper - “Zero Shot Depth-Aware Image Editing with Diffusion Models” is accepted at #ICCV2025!! paper - rishubhpar.github.io/DAEdit/main_pa… 🙌🏻 Kudos to amazing collaborators - @sachidanand8036, @rvbabuiisc Stay tuned for more details!

We’re proud to announce three new tenure-track assistant professors joining TTIC in Fall 2026: Yossi Gandelsman (@YGandelsman), Will Merrill (@lambdaviking), and Nick Tomlin (@NickATomlin). Meet them here: buff.ly/JH1DFtT

What would a World Model look like if we start from a real embodied agent acting in the real world? It has to have: 1) A real, physically grounded and complex action space—not just abstract control signals. 2) Diverse, real-life scenarios and activities. Or in short: It has to…

Why More Researchers Should be Content Creators Just trying something new! I recorded one of my recent talks, sharing what I learned from starting as a small content creator. youtu.be/0W_7tJtGcMI We all benefit when there are more content creators!

#CVPR2025 is a wrap! Amazing contributions on lighting-related tasks this year. Now it’s time to look ahead to #ICCV2025 in beautiful Hawai’i. Let’s keep the momentum going and join us to explore and discuss how to evaluate lighting across diverse tasks!

Lighting hasn’t received as much attention as 3D geometry in the vision community, but that’s changing. A wave of exciting papers in recent venues (#ICLR2025, #SIGGRAPH2025 and #CVPR2025) reflects growing momentum, and the rise is just beginning. But before the field scales…

#CVPR2025 is a wrap! Amazing contributions on lighting-related tasks this year. Now it’s time to look ahead to #ICCV2025 in beautiful Hawai’i. Let’s keep the momentum going and join us to explore and discuss how to evaluate lighting across diverse tasks!

🚀 Excited to introduce SimWorld: an embodied simulator for infinite photorealistic world generation 🏙️ populated with diverse agents 🤖 If you are at #CVPR2025, come check out the live demo 👇 Jun 14, 12:00-1:00 pm at JHU booth, ExHall B Jun 15, 10:30 am-12:30 pm, #7, ExHall B

Highly recommend checking out the Parthenon if you’re in Nashville for #CVPR2025. It’s an exact 1:1 replica of the original in Athens.

Ready to go!! Poster #26 at #CVPR2025 Sadly, @RealXiaoyanXing’s visitor visa was canceled last minute, and he couldn’t make it. This work is a result of his hard work and effort. Code: github.com/xyxingx/LumiNet Project: luminet-relight.github.io Paper: arxiv.org/abs/2412.00177

[1/7] I'm super stoked to share LumiNet! 🎉 💡LumiNet combines our latent intrinsic image representations with a powerful generative image model. It lets us relight complex indoor images by transferring lighting from one image to another! Most importantly, it is completely…

Latent Intrinsics are powerful representations. When smartly combined with diffusion-based generators, they enable compelling image relighting without any labeled data or semantics. Catch our LumiNet poster tomorrow (Jun 13) at #CVPR2025 in the first poster session at 10:30 AM.

[1/7] I'm super stoked to share LumiNet! 🎉 💡LumiNet combines our latent intrinsic image representations with a powerful generative image model. It lets us relight complex indoor images by transferring lighting from one image to another! Most importantly, it is completely…

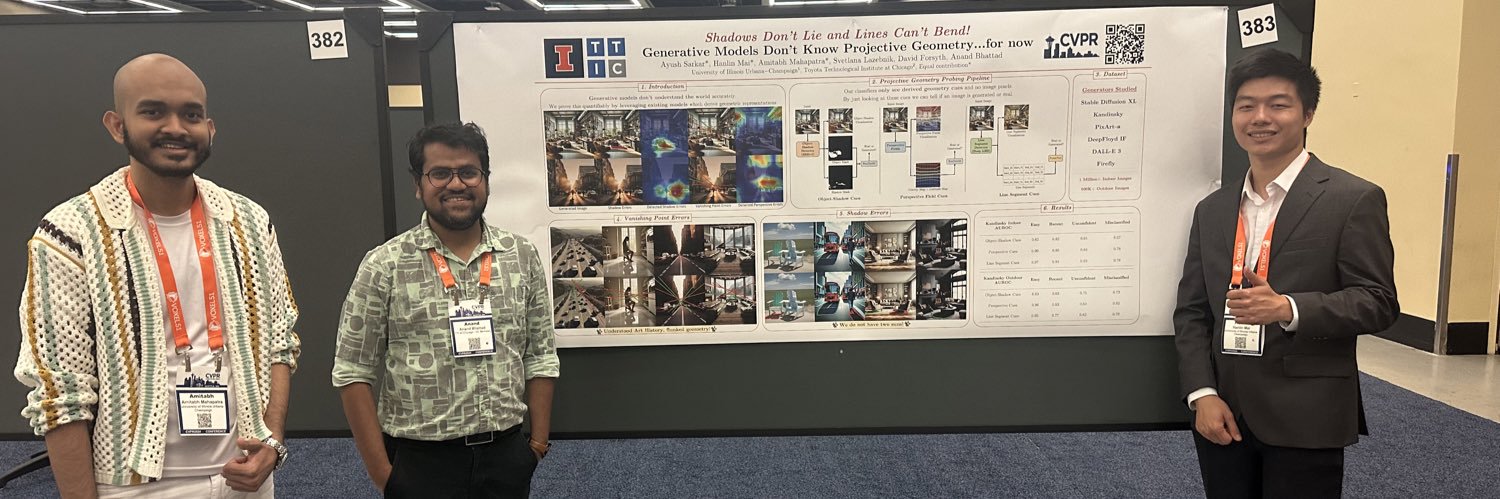

My amazing PhD advisor kicked things off with a roaring “Generators are DUMB!” Talking about some of Vaibhav’s exciting PhD work, and a project we’ve been quietly working on for over two years now! #CVPR2025

And finally, talk by the great David Forsyth!

Talk by @KostasPenn happening now!

🔍 3D is not just pixels—we care about geometry, physics, topology, and functions. But how to balance these inductive biases with scalable learning? 👀 Join us at Ind3D workshop @CVPR (June 12, afternoon) for discussions on the future of 3D models! 🌐 ind3dworkshop.github.io/cvpr2025