Alex Rutherford

@alexrutherford0

PhD in AI & 🤖 @GOALS_oxford @FLAIR_Ox | also @TolkienLecture 🧙♂️ | he/him | 🦋amacrutherford

1/ 🕵️ Algorithm discovery could lead to huge AI breakthroughs! But what is the best way to learn or discover new algorithms? I'm so excited to share our brand new @rl_conference paper which takes a step towards answering this! 🧵

Excited to announce my first paper, with @j_foerst and @FLAIR_Ox, was accepted into @rl_conference 2025! We establish a new UED method called NCC that obtains strong performance based on principles of optimisation theory.

✨ Tickets are out now for the 2025 Tolkien Lecture, delivered by Zen Cho (@zenaldehyde)! 🎟️ Visit Eventbrite to reserve your seat: tinyurl.com/2025TolkienLec…. 🐈⬛ The lecture will be held on Monday 19 May at 6:00 pm, in the Pichette Auditorium, Pembroke College, Oxford.

🧚🏼♂️We are thrilled to announce that the 2025 J.R.R. Tolkien Lecture on Fantasy Literature will be delivered by Zen Cho (@zenaldehyde)! 🧙 The lecture will take place on Monday 19 May at 6:00 pm, in the Pichette Auditorium, Pembroke College, Oxford (@PembrokeOxford)

It's great to see people starting to explore automatic curriculum learning for LLMs. There's a lot of untapped potential here for teaching agents new skills without affecting their other benchmark scores. Syllabus has an implementation of SFL if you want to try it out!

Learning to Reason at the Frontier of Learnability "we adapt a method from the reinforcement learning literature—sampling for learnability—and apply it to the reinforcement learning stage of LLM training. Our curriculum prioritises questions with high variance of success, i.e.…

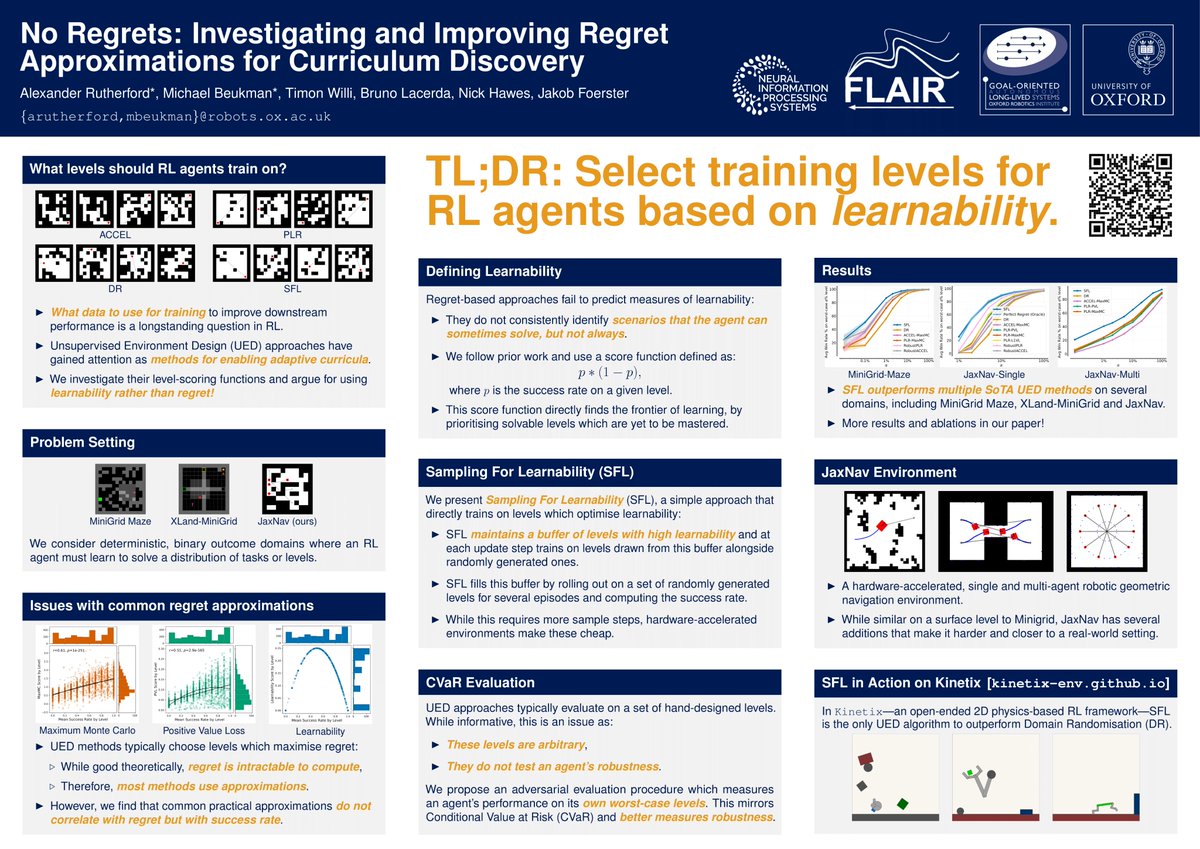

Our paper No Regrets, and some other great FLAIR work, was featured on @computer_phile! Read more about it here: sampling-for-learnability.github.io

Some time ago @computer_phile came to visit @FLAIR_Ox. I didn't have tons of time to prepare (too busy doing research!) so turning my verbiage into something semi coherent must have been difficult for the team. Here's the result -- judge for yourself: youtube.com/watch?v=fN3gdU…

I’m pleased to announce our work which studies complexity phase transitions in neural networks! We track the Kolmogorov complexity of networks as they “grok”, and find a characteristic rise and fall of complexity, corresponding to memorization followed by generalization. 🧵

I’ll be presenting today in West Ballroom A-D from 11-2, #6301! Come alone to talk about OPEN 😊

🇨🇦 I'll be at #NeurIPS2024 next week to present our spotlight paper on learned optimisation for RL! If you're interested in (meta-)RL or the potential of learned algorithms for ML, I'd love to chat! 📨 DM if you'd like to get a coffee!

🔬 FLAIR has a bunch of great papers being presented today at NeurIPS! Come along to learn more about the work! 👀 Keep your eyes peeled for more work being presented over the week!

Come say hi at #NeurIPS today! Morning: 🎓 Sampling for Learnability (SFL) with @mcbeukman, a new approach for UED! Afternoon: ⚡️JaxMARL with @benjamin_ellis3 @_chris_lu_, MARL but super super fast! Both West Ballroom A - D, #6408 and #6407 respectively.

Me and @alexrutherford0 will be presenting SFL today at 11:00! Come by our poster, located in West Ballroom A-D #6408 to chat about UED and curricula :)

🔥 OPEN shows that learned optimisation produces huge performance improvements in RL, and can generalise zero-shot (without any hyperparameter tuning)!

🇨🇦 I'll be at #NeurIPS2024 next week to present our spotlight paper on learned optimisation for RL! If you're interested in (meta-)RL or the potential of learned algorithms for ML, I'd love to chat! 📨 DM if you'd like to get a coffee!

🇨🇦 I'll be at #NeurIPS2024 next week to present our spotlight paper on learned optimisation for RL! If you're interested in (meta-)RL or the potential of learned algorithms for ML, I'd love to chat! 📨 DM if you'd like to get a coffee!

I'll also be at NeurIPS, keen to chat about UED, SFL, Kinetix, or anything in open-ended RL :)

Hello! I'll be at NeurIPS next week presenting our work on using learnability to select levels for RL autocurricula. If you're there, I would love to chat about curricula and RL generalisation more broadly. Please DM if you'd like to grab a coffee :)

Hello! I'll be at NeurIPS next week presenting our work on using learnability to select levels for RL autocurricula. If you're there, I would love to chat about curricula and RL generalisation more broadly. Please DM if you'd like to grab a coffee :)

🎉 Excited to share our paper "The Edge-of-Reach Problem in Offline MBRL" has been accepted to #NeurIPS! 🌟 Looking forward to Vancouver! We reveal why offline MBRL methods work (or fail) and introduce a robust solution: RAVL 🚀 🧵 Let's dive in! [1/N]

Joao Henriques (joao.science) and I are hiring a fully funded PhD student (UK/international) for the FAIR-Oxford program. The student will spend 50% of their time @UniofOxford and 50% @AIatMeta (FAIR), while completing a DPhil (Oxford PhD). Deadline: 2nd of Dec AOE!!

Check out what sampling for learnability can do! If you want to hear more, @mcbeukman and I will be at #NeurIPS next month

📈 One big takeaway from this work is the importance of autocurricula. In particular, we found significantly improved results by dynamically prioritising levels with high 'learnability'. 7/

We are very excited to announce Kinetix: an open-ended universe of physics-based tasks for RL! We use Kinetix to train a general agent on millions of randomly generated physics problems and show that this agent generalises to unseen handmade environments. 1/🧵