Jason Eisner

@adveisner

Professor of CS at Johns Hopkins University, ACL Fellow. My tweets speak only for me.

Congratulations to all @JohnsHopkins researchers participating in #ICLR2025! Check out all @JohnsHopkins accepted papers, tutorials, and workshops at ai.jhu.edu/news/johns-hop….

Who wants to come to JHU and do a postdoc with me?? I'm always enthusiastic about new modeling / inference / algorithmic ideas in NLP/ML. Also selected applications.

We’re thrilled to announce the #HopkinsDSAI Postdoctoral Fellowship Program! We’re looking for candidates across all areas of data science and AI, including science, health, medicine, the humanities, engineering, policy, and ethics. Apply today! ai.jhu.edu/postdoctoral-f…

New #ACL2024 paper: LLMs in the Imaginarium: Tool Learning through Simulated Trial and Error (@BoshiWang2's internship work at Microsoft Semantic Machines) I like this work because it takes home an important insight: synthetic data + post-training is critical for agents.…

Thanks @_akhaliq for sharing our work led by @BoshiWang2 from @osunlp, so let's chat about how LLMs should learn to use tools, a necessary capability of language agents. Tools are essential for LLMs to transcend the confines of their static parametric knowledge and…

Great last-minute summer opportunity on LLMs for social good / democracy! (If you're an #NLProc PhD student.) Please retweet. (Team includes @adveisner @DanielKhashabi @ZiangXiao @AndrewJPerrin + students. 8 weeks alongside 3 other teams: fun, meaningful, educational, social.)

WHAT: "AI-Curated Democratic Discourse," a JSALT hackathon team this summer (Jun 10-Aug 2) GOAL: Redesign the social media UI to raise the quality of reading and posting, with the help of LLMs🤯 WHO: Looking for 1 more funded, in-person NLP PhD student! DM me with yr skillz.

Thrilled to (finally) be able to share our paper: 🐑 Do language models know when they're hallucinating? TL;DR answer: yes! 🔗: arxiv.org/abs/2312.17249, and a🧵 w/ Semantic Machines @MSFTResearch: @ben_vandurme & @adveisner & Chris Kedzie

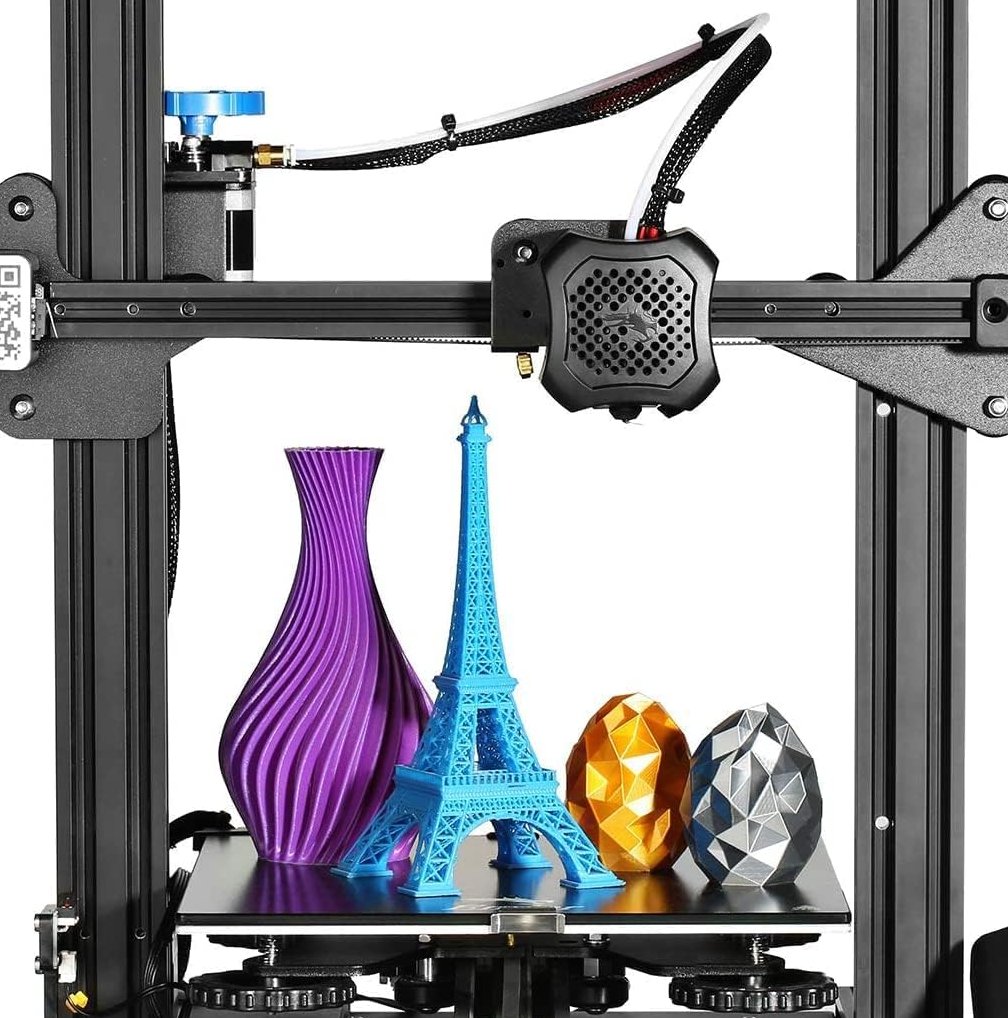

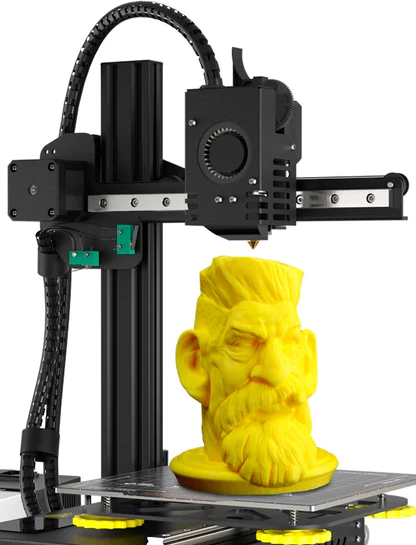

If you can break your AI problem down, an LLM is the 3D printer that can quickly create modules for your subtasks. No module should bear too much load, as they're all made of cheap plastic. But a 3D printer has countless uses. (Our previous 3D printer was MTurk.)

Dear ACL community, ACL is considering multiple proposals to change its anonymity period policy. It seeks immediate feedback from the community about the proposed changes. Please add your voice until Friday, September 22nd (AOE): aclweb.org/portal/content… #NLProc

Read this whole thread, and apply to join us!

@JohnsHopkins Engineering @HopkinsEngineer is making a massive investment in AI. The plan is so ambitious and unique that it’s worth breaking down the announcement in detail. This will transform the landscape of engineering research and education. 🧵 hub.jhu.edu/2023/08/03/joh…

🧵 A recap of JHU CS at #ACL2023! (1/10) Congrats to @DanielKhashabi & team for their Outstanding Paper Award for "The Tail the Wagging the Dog," an investigation into benchmarking social bias in datasets. Read more here: arxiv.org/pdf/2210.10040…

We tried a whole bunch of sensible ways to improve large language model decoding, and this was the one that actually worked. Virtual poster today, Wed, 11am ET at #ACL2023. virtual2023.aclweb.org/paper_P511.html

arxiv.org/abs/2210.15097 We propose contrastive decoding (CD), a more reliable search objective for text generation by contrasting LMs of different sizes. CD takes a large LM (expert LM e.g. OPT-13b) and a small LM (amateur LM e.g. OPT-125m) and maximizes their logprob difference