Kevin Lu

@_kevinlu

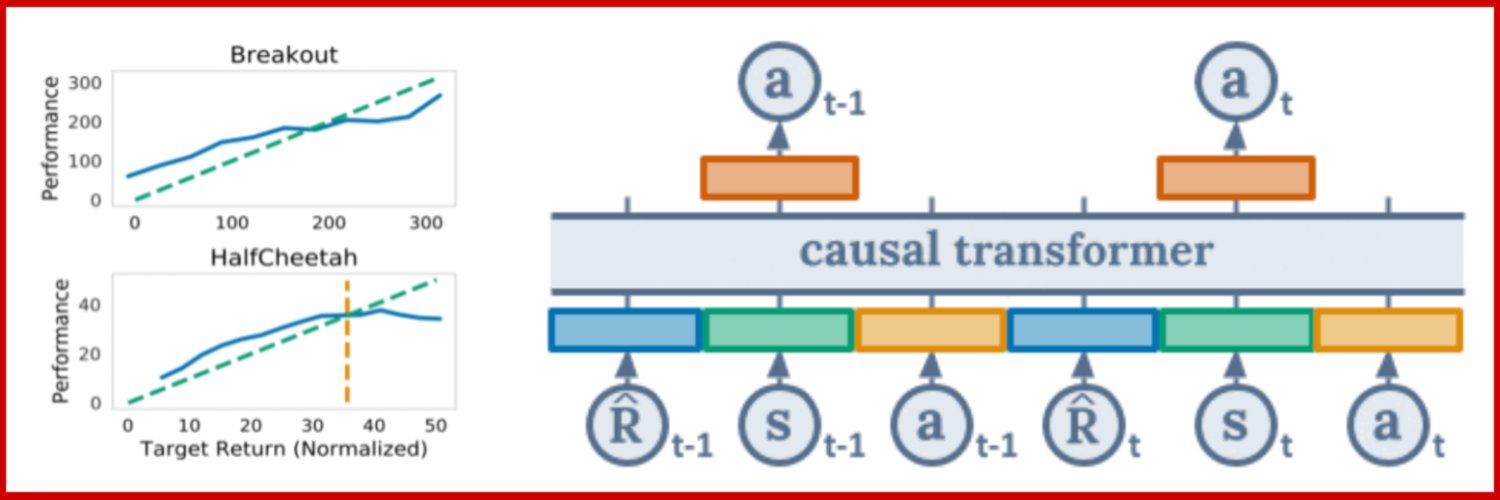

formerly: - @openai: RL, synthetic data, efficient models - @weHRTyou: sequential decision making - @berkeley_ai: decision transformer, universal computation

Come check out o1-mini: SoTA math reasoning in a small package openai.com/index/openai-o… with @ren_hongyu @shengjia_zhao @Eric_Wallace_ & the rest of the OpenAI team

I surveyed 30+ AI researchers, builders, and VCs on what actually matters in AI right now: what what they pay for, companies to invest in, hot takes, etc. Here's what AI insiders think 2025+ will bring:

exciting progress in rich, multi-step, tool use environments for RL on your codebase! we should think of more ways to connect your personal use cases to frontier models to ground them in real tasks excited to try out Asimov, congrats to @MishaLaskin Ioannis & co for the…

Code understanding agents are kind of the opposite of vibe coding -- users actually end up with *more* intuition. It's also a problem ripe for multi-step RL. Contexts are huge, so there's large headroom for agents that "meta" learn to explore effectively. Exciting to push on!

Exciting implications for environment design, search, and science 🙂 A lot of good science is smart experimental design resulting in a precise hypothesis that can be clearly verified Today we have systems like AlphaEvolve that can optimize easy-to-verify systems -- how can we…

New blog post about asymmetry of verification and "verifier's law": jasonwei.net/blog/asymmetry… Asymmetry of verification–the idea that some tasks are much easier to verify than to solve–is becoming an important idea as we have RL that finally works generally. Great examples of…

one of the things we need for ai to mature is a more efficient capital allocation: - what if i buy a large cluster but find no use for it? - what if i sign a nine figure contract with a company that fails to deliver me a good model? - what if I pay $100M for a researcher who…

AI companies are the new utilities. Compute goes in → intelligence comes out → distribute through APIs. But unlike power companies who can stockpile coal, and hedge natural gas futures, OpenAI can't stockpile compute. Every idle GPU second = money burned. Use it or lose it

The race for LLM "cognitive core" - a few billion param model that maximally sacrifices encyclopedic knowledge for capability. It lives always-on and by default on every computer as the kernel of LLM personal computing. Its features are slowly crystalizing: - Natively multimodal…

I’m so excited to announce Gemma 3n is here! 🎉 🔊Multimodal (text/audio/image/video) understanding 🤯Runs with as little as 2GB of RAM 🏆First model under 10B with @lmarena_ai score of 1300+ Available now on @huggingface, @kaggle, llama.cpp, ai.dev, and more

Exciting to share what i've been working on in the past few months! o3 and o4-mini are our first reasoning models with full tool support, including python, search, imagegen, etc. it also comes with the best VISUAL reasoning performance up-to-date!

Introducing OpenAI o3 and o4-mini—our smartest and most capable models to date. For the first time, our reasoning models can agentically use and combine every tool within ChatGPT, including web search, Python, image analysis, file interpretation, and image generation.