H

Hanlin Zhang

@_hanlin_zhang_

CS PhD student @Harvard, @googleai

Joined September 2019

329Following

1KFollowers

Pinned

H

Hanlin Zhang@_hanlin_zhang_ · Nov 22

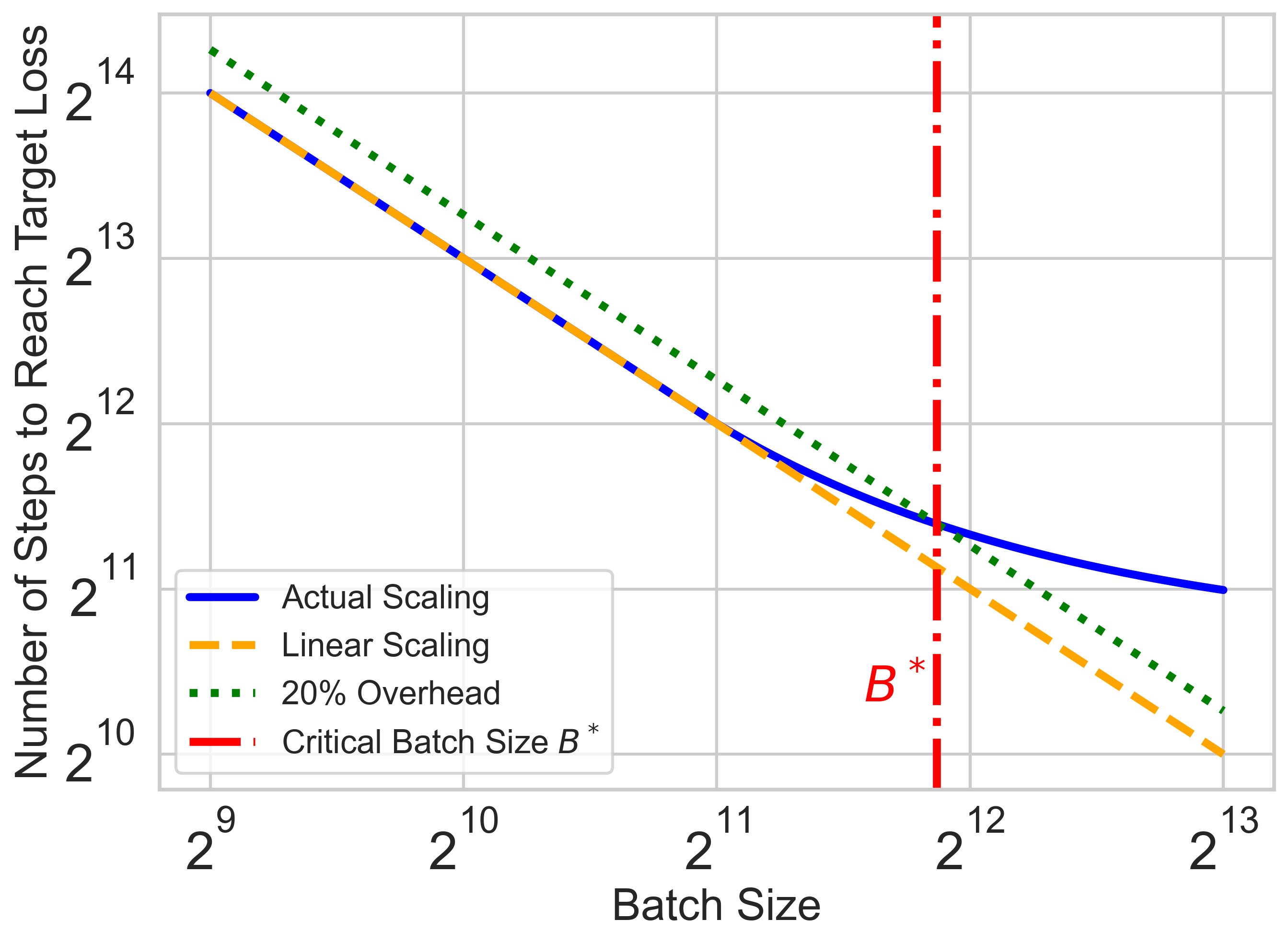

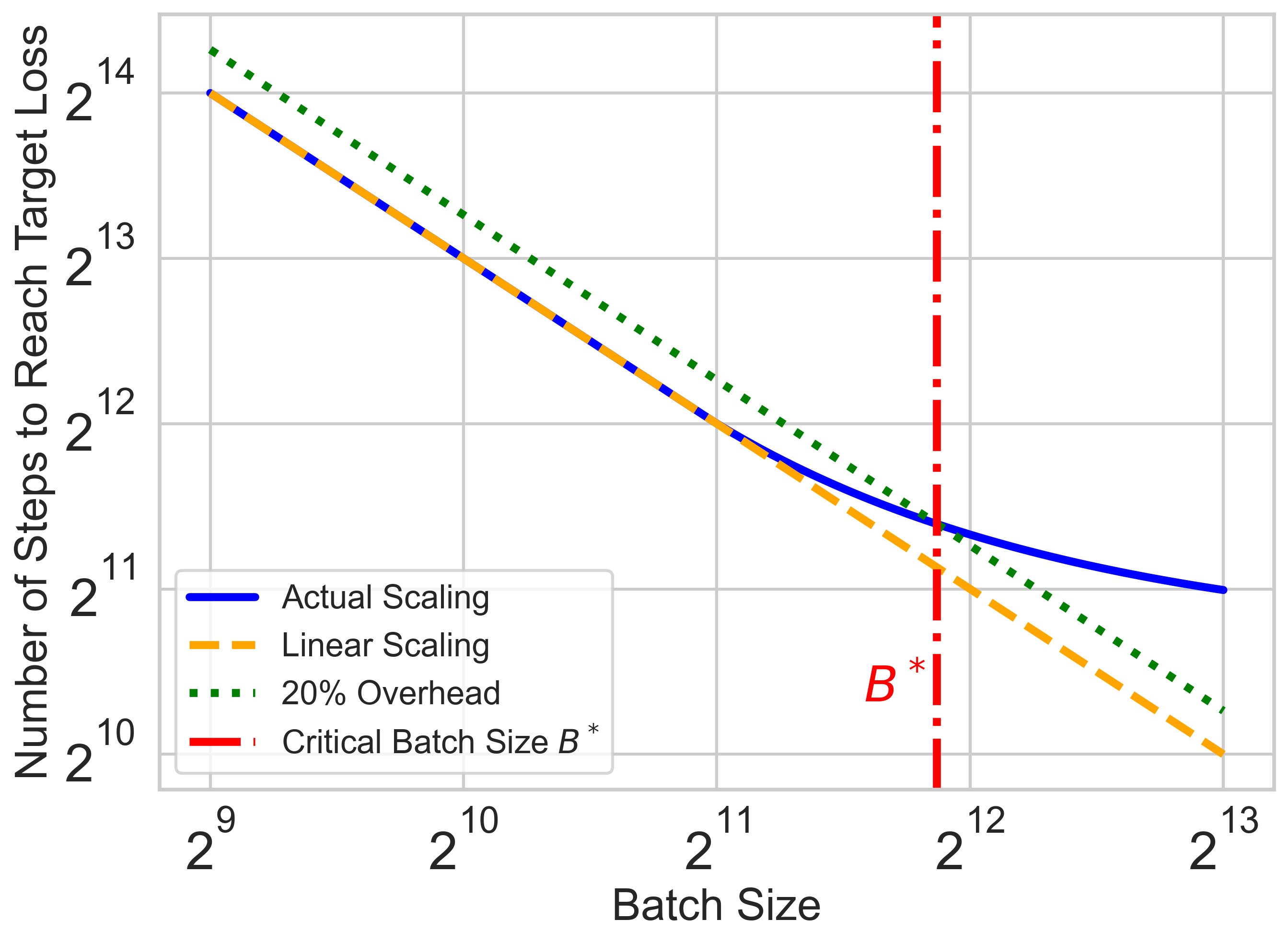

Critical batch size is crucial for reducing the wall-clock time of large-scale training runs with data parallelism. We find that it depends primarily on data size. 🧵 [1/n] Paper 📑: arxiv.org/abs/2410.21676 Blog 📝: kempnerinstitute.harvard.edu/research/deepe…

1

13

120

26

17.0K

Hanlin Zhang Retweeted

O

Ori Press@ori_press · Jul 2

Do language models have algorithmic creativity? To find out, we built AlgoTune, a benchmark challenging agents to optimize 100+ algorithms like gzip compression, AES encryption and PCA. Frontier models struggle, finding only surface-level wins. Lots of headroom here!🧵⬇️

6

59

155

66

22.0K