Thomas Schranz 🍄

@__tosh

🚜 building - 🌌 Atlas, tools to help you get most out of llms - ♟️ Chess Cats - 🧇 Waffle, messaging w/ friends - 🍓 Jam, open source Clubhouse (@jam_systems)

early stage product: 🪵 collect tinder, start fire before freeze later stage product: 🔥 keep fire alive, grow fire, defend fire very different h/t @christineluc

TIL: tailwind puts all color definitions into output.css whether they are used or not not trivial to remove them in tailwind v4

still wonder if agents like claude code or amp are useful? git clone anything you find interesting ask questions about the codebase see how they translate your questions into ripgrep + give you answers from the code they read and their vast knowledge investigate

Claude uptime looks a bit wild right now status.anthropic.com

👀🔥 new Qwen3

Bye Qwen3-235B-A22B, hello Qwen3-235B-A22B-2507! After talking with the community and thinking it through, we decided to stop using hybrid thinking mode. Instead, we’ll train Instruct and Thinking models separately so we can get the best quality possible. Today, we’re releasing…

everyone wondering about when AGI me wondering when reply spam is finally becoming interesting to read

Just launched LLM SEO EEAT on Product Hunt and currently #1. Check how trustworthy Google thinks your content is - get an instant EEAT score + AI-powered SEO tips. @lovable_dev scored only 40/100 👀 👉 producthunt.com/products/llm-s… Upvotes appreciated 💛

I don't think the endgame of agentic coding UI will be jules, codex, or claude code in github PRs Slack and discord won over bulletin boards for collaboration Chat is faster, more direct As long as we iterate we want to be as close to what's happening as possible

Is there a "System Design" podcast, where the host talks to the engineers who built the systems we know and love, and go deep on them? i.e "Building Google Maps with the founder of Google Maps" etc

Speed’s gonna be the next frontier and @AnthropicAI isn’t great at it.

Kimi K2 Providers: Groq is serving Kimi K2 at >400 output tokens/s, 40X faster than Moonshot’s first-party API Congratulations to a number of providers to being quick to launch APIs for Kimi K2, including @GroqInc , @basetenco , @togethercompute, @FireworksAI_HQ, @parasail_io,…

Any reason why @GroqInc doesn't serve any of the larger foundation models yet? No Openai, Anthropic, Grok 👀 Wen Grok on Groq?

TIL: Anthropic models are on Google and Amazon but not (yet?) on Azure

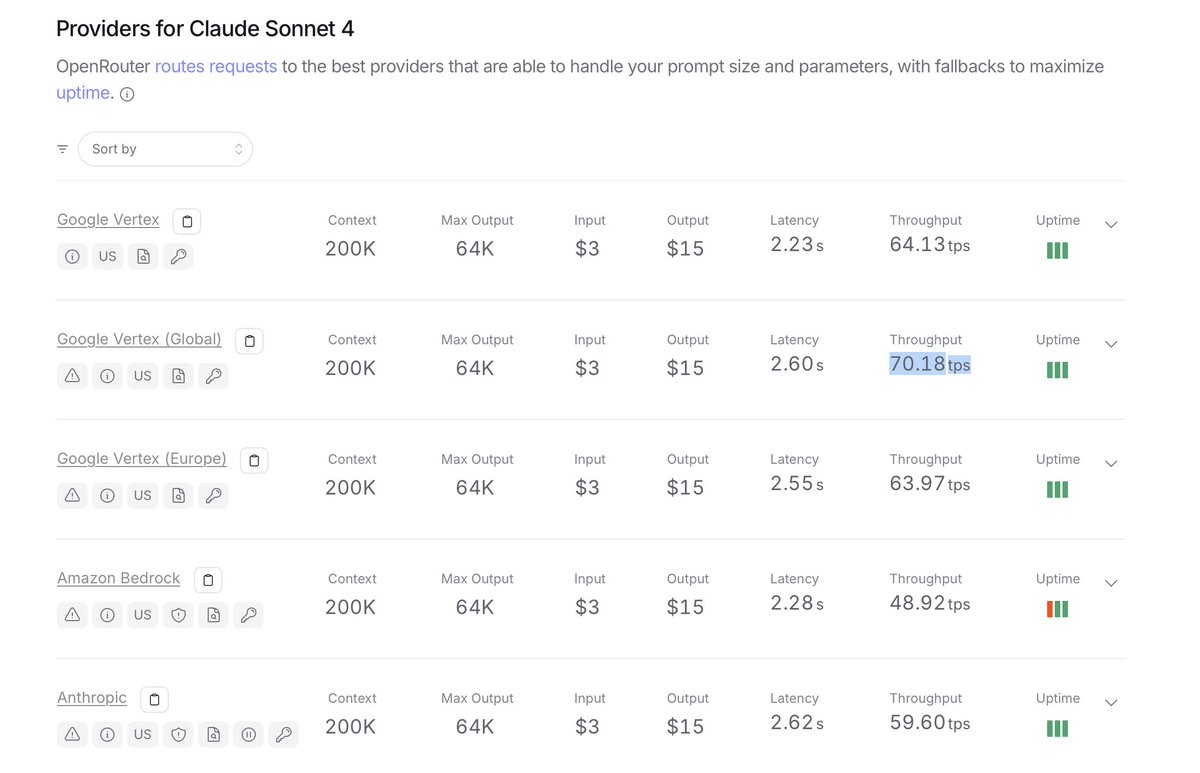

Claude Sonnet 4 inference in tokens / s Google Vertex: 70 Anthropic: 60 Amazon: 50 note: this is not about inference speed but also about demand & varies over time just a current snapshot via openrouter