the tiny corp

@__tinygrad__

We make tinygrad and sell tinybox, the best perf/$ AI computer. Our mission is to commoditize the petaflop.

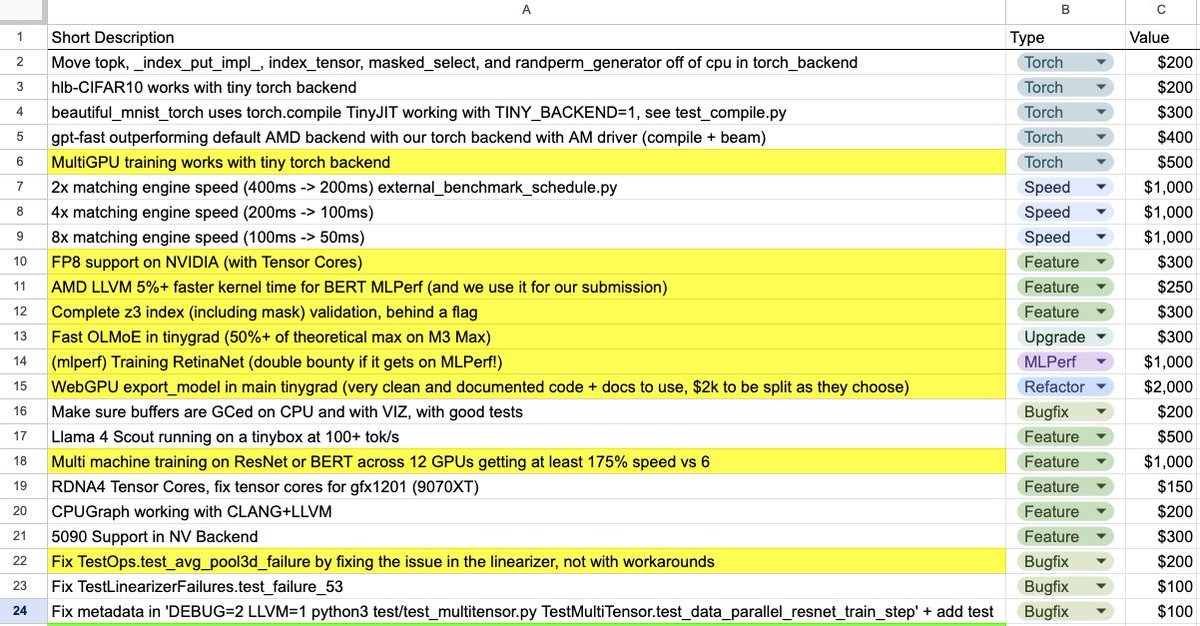

In uncertain times, tinygrad always has bounties for you to solve. Over $50k paid out to date. And the only path to a job here, all hiring will look like this soon. bounties dot tinygrad dot org

Who thinks tinygrad is going to win?

what if you could take a deep learning program, search the space of possible equivalent programs, expose the hardware architecture to your search, and find the verifiable optimum program to run on that hardware

Python is an amazing language. It needs more work on its type system, but no language is close for the ability to express raw ideas with minimal overhead.

i discovered astral and tinygrad at approximately the same time. changed my views towards python completely

Every week we have a meeting open to all in our Discord, and it's meeting time now!

Check out our complete zero dep 139 line Llama implementation. github.com/tinygrad/tinyg…

looking to implement a minimal / educational llm inference engine on top of tinygrad, but pounders the question: what optimization should be implemented by hand, and which part will be supposed to be optimized by @__tinygrad__ itself?

This is tinygrad's description of the tensor cores of all the major GPUs. No per GPU dialects, just a spec for what they each are.

tinygrad is a bet against irreducible complexity. When you look at Triton and similar, so many things are GPU specific. The task in reverse engineering is ignoring the labels, what is that really? Forget the abstractions, search for the perfect program that runs on the hardware.

America has once again put up roadblocks to us getting a visa. And I'm sure it's not just us. Every decision made like this slowly chips away at America's lead in AI. Most Americans have never lived in a country that wasn't rich, but this is the road to that.

All of tinygrad, including non existent dependencies, fits in the context window. Seed AI type vibes.

You can cut & paste your entire source code file into the query entry box on grok.com and @Grok 4 will fix it for you! This is what everyone @xAI does. Works better than Cursor.

之前在安装 tinygrad 的时候,选择了错误的 cuda 版本,导致一直 generated code ptx 版本有问题,不断反复安装都没有解决,让 claude-code 接手之后,他找了到 cache 成功解决了问题

Got my company laptop and the first thing I did after convincing IT to give me WSL..? Install cuda and ran beautiful_mnist.py @__tinygrad__

tinygradのコードとかドキュメントとか参考にしながらやってるんだけど、これマジですごいな。読めば読むほど感動が生えてくる。 洗練された設計は読んでいて楽しいな。

Our MI350X machines are here, thanks @AMD! They are just two racks down from their MI300X friends.

end to end will win neural network frameworks just like it is winning self driving cars. if you have a Conv3D guy, you are going to lose. he's the new cone guy.

Try tinygrad’s webgpu backend on windows: github.com/tinygrad/tinyg…