Zeyuan Allen-Zhu, Sc.D.

@ZeyuanAllenZhu

physics of language models @ Meta (FAIR, not GenAI, not MSL) 🎓:Tsinghua Physics — MIT — Princeton/IAS 🏅:IOI x 2 — ACM-ICPC — USACO — Codejam — math MCM

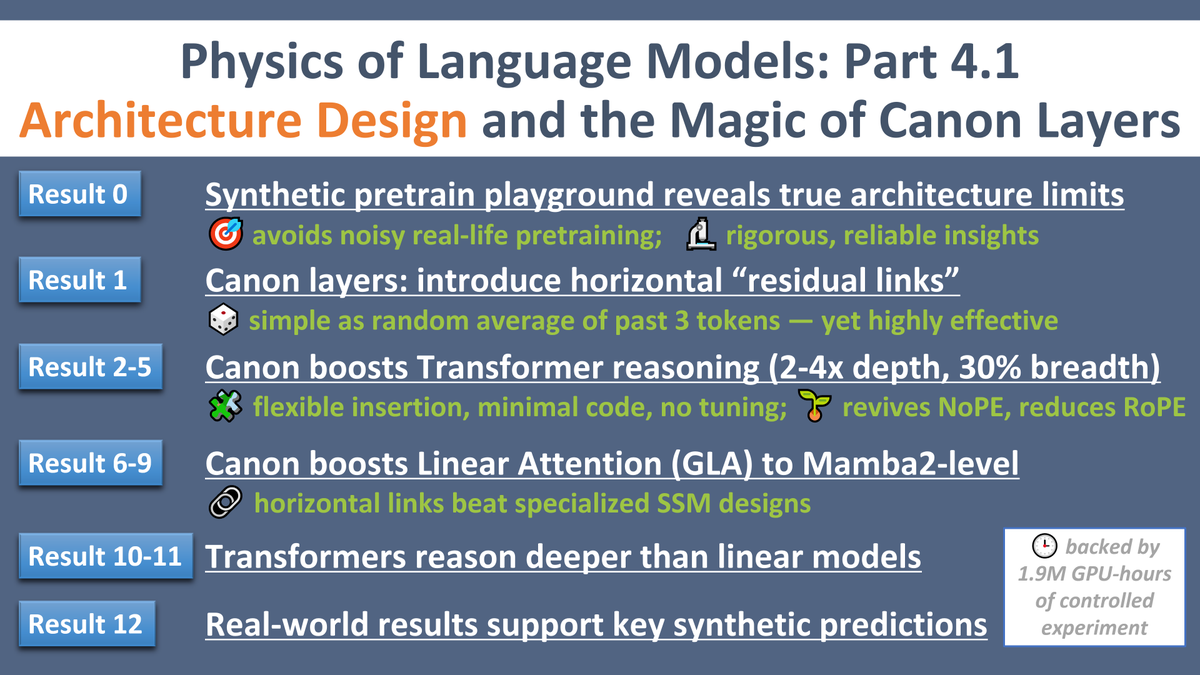

(1/8)🍎A Galileo moment for LLM design🍎 As Pisa Tower experiment sparked modern physics, our controlled synthetic pretraining playground reveals LLM architectures' true limits. A turning point that might divide LLM research into "before" and "after." physics.allen-zhu.com/part-4-archite…

Congratulations and I'm proud to have contributed @zichengxu42 to your team :)

Proud to announce an official Gold Medal at #IMO2025🥇 The IMO committee has certified the result from our general-purpose Gemini system—a landmark moment for our team and for the future of AI reasoning. deepmind.google/discover/blog/… (1/n) Highlights in thread:

Just arrived at ICML. Please drop me a message if you are here and like to chat. We are hiring.

Recent media misreport — about Meta’s AI orgs and (oddly) myself — clarifications: 🧪 FAIR is Meta’s long-term research lab — not GenAI, not MSL 🔍 We do open research with public data, no access to GenAI/MSL infra 😅 I’m not bald ⏳ No complaint — just asking folks to be patient

No matter how AI evolves overnight—tech, career, how it may impact me—I remain committed to using "physics of language models" approach to predict next-gen AI. Due to my limited GPU access at Meta, Part 4.1 (+new 4.2) are still in progress, but results on Canon layers are shining

Facebook AI Research (FAIR) is a small, prestigious lab in Meta. We don't train large models like GenAI or MSL, so it's natural that we have limited GPUs. GenAI or MSL's success or failure, past or future, doesn't reflect the work of FAIR. It is important to make this distinction

No matter how AI evolves overnight—tech, career, how it may impact me—I remain committed to using "physics of language models" approach to predict next-gen AI. Due to my limited GPU access at Meta, Part 4.1 (+new 4.2) are still in progress, but results on Canon layers are shining

No matter how AI evolves overnight—tech, career, how it may impact me—I remain committed to using "physics of language models" approach to predict next-gen AI. Due to my limited GPU access at Meta, Part 4.1 (+new 4.2) are still in progress, but results on Canon layers are shining

(1/8)🍎A Galileo moment for LLM design🍎 As Pisa Tower experiment sparked modern physics, our controlled synthetic pretraining playground reveals LLM architectures' true limits. A turning point that might divide LLM research into "before" and "after." physics.allen-zhu.com/part-4-archite…

I've wasted too much energy on X, naively thinking any of it mattered. Now I'm truly disillusioned—but finally awake. I'm shedding distractions, returning fully to research and meaningful work. No more replies, only occasional updates. Thanks to the few who truly supported me.

Please stop spreading false rumors. This full arxiv paper underwent peer review. After 30 minutes of discussion, you’ve made no effort to verify the truth or retract the false claim despite my repeated requests. If you retract, I treat this as a misunderstanding, but you haven’t.

a very important clarification: while the original arxiv versions, that people like, did not go through review, shortened versions did go through review, and 4 papers were accepted to ICLR 2025, some of them as a spotlight! (the screenshot above is from one of them).

This person seems stressed and is spreading false rumors on our project. To clarify: this PDF is from our peer-reviewed spotlight paper accepted at ICLR 2025. We have 4 papers accepted at ICLR'25 (Parts 2.1, 2.2, 3.2, 3.3). I suggest you find healthier outlets to cope with stress

(9/8) People suggested I study Primer (arxiv.org/abs/2109.08668). Their multi-dconv-head attention is what I call Canon-B (no-res)—and we found issues with it. Yet, Primer is underrated with just 180 citations. They found meaningful signals from noisy real-life exp that I couldn't

(1/8)🍎A Galileo moment for LLM design🍎 As Pisa Tower experiment sparked modern physics, our controlled synthetic pretraining playground reveals LLM architectures' true limits. A turning point that might divide LLM research into "before" and "after." physics.allen-zhu.com/part-4-archite…

It is time! Applications for the global @Google PhD Fellowship Program are NOW open.

Applications for the global @Google PhD Fellowship Program opens on Apr 10th. Fellowships support graduate students doing exceptional and innovative research in computer science and related fields as they pursue their PhD. Learn more and apply by May 15 at goo.gle/phdfellowship

Papers I talked about: (1) One-model deja-vu memorization: arxiv.org/abs/2504.05651 (2) AgentDAM "data minimization" benchmark: arxiv.org/abs/2503.09780

Excited to give a keynote talk tomorrow 9am CET at the IEEE Secure and Trustworthy ML conference.

On Mar 9, they rejected my access to Llama 2 models on huggingface, and there's no button to re-apply. Who should I talk to to fix this? @huggingface @AIatMeta