ZD1908

@ZDi____

(mostly) Audio/TTS ML research & LSTM enjoyer; by myself | 🇦🇷 24 | DMs open

Audio language modeling has always involved people training codecs to VQ audio directly. But what if we tokenized mel spectrograms, then trained a vocoder like iSTFTNet, and our AR prior on mel spectrogram indices? We can easily language model 44.1KHz audio with a single codebook

Coded a cheat program (in C++) for a geopolitical simulation game that let you, among many other things, explode your GDP, annex other countries, pretend to chat as others, and even exploited a quirk between how it handled signed and unsigned ints in places to multiply military.

share the story of how you made your first dollar online

If you ever wonder why Chinese companies like DeepSeek, Qwen, and Kimi can train strong LLMs with far fewer and nerfed Nvidia GPUs, remember: In 1969, NASA’s Apollo mission landed people on the moon with a computer that had just 4KB of RAM. Creativity loves constraints.

As someone who likes to cook, this is the best case scenario. I don't want to be helped (you don't know my workflow and would just get in the way), and I don't want unsolicited advice.

share the story of how you made your first dollar online

nice

We’re honored to join leaders in tech, government and policy today in D.C. to spotlight the role of U.S. innovation in advancing AI. Winning the AI race demands an open approach that fosters collaboration, transparency and shared innovation to unlock true progress.

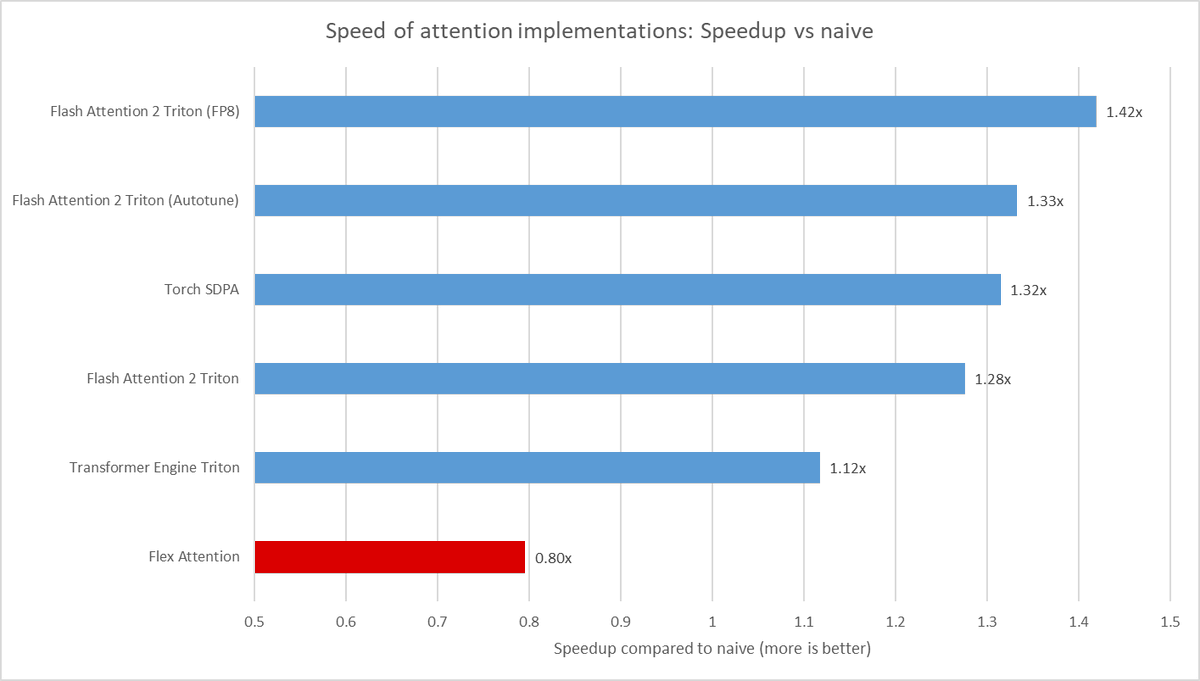

ROCm FlexAttention (torch 2.6.0) is somehow slower than naive attention on nanoGPT?

ROCm FlashAttention has an fp8 variant that provides a decent speedup over bf16, but it's kind of hidden away and not exposed in the main README, and needs to be imported in a hack-ish way (the documentation is wrong).

Uranus is warmer than we thought. New computer modeling techniques revealed that Uranus generates internal heat. This is similar to our solar system’s other gas giants, like Jupiter or Neptune. go.nasa.gov/44HzIKx

After two weeks of data collection, time to work on comparing attention implementations on ROCm

The accessibility of machine learning means you can predict pretty much anything as long as you have enough labeled data in an easy to structure format.

Finally got another message from this company. Can't wait another month to get another message.

The non governmental system is the reason why my replacement headphones cable never arrived. It's conspiring against me.

Something that could be handy -- a ROCm "quick start" guide for those switching over from CUDA, explaining the differences (and lack thereof) between CUDA and ROCm PyTorch/vLLM/et al.

The waifu and MechaHitler fiascos have convinced me: I want to work for xAI. I have applied, tho 99% chance I'll get rejected, still worth a try.

I need more tandoori masala, that stuff goes well on fries and chicken.

I was out there taking pictures of my motorcycle and some guys walking by said "alta moto" (nice bike) and I replied thanks :D