Yanbo Zhang

@YanboZhang3

PhD, Complex Systems, Postdoc at Tufts University

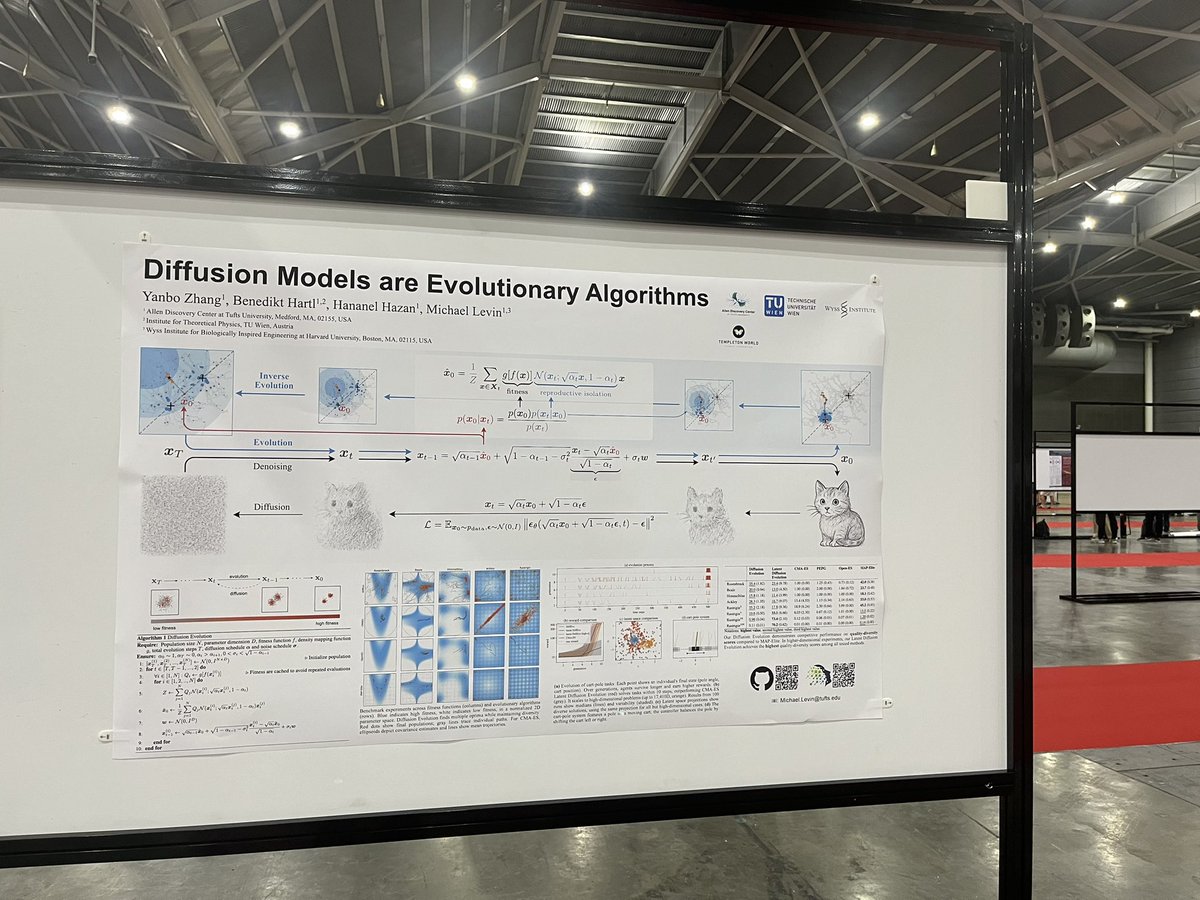

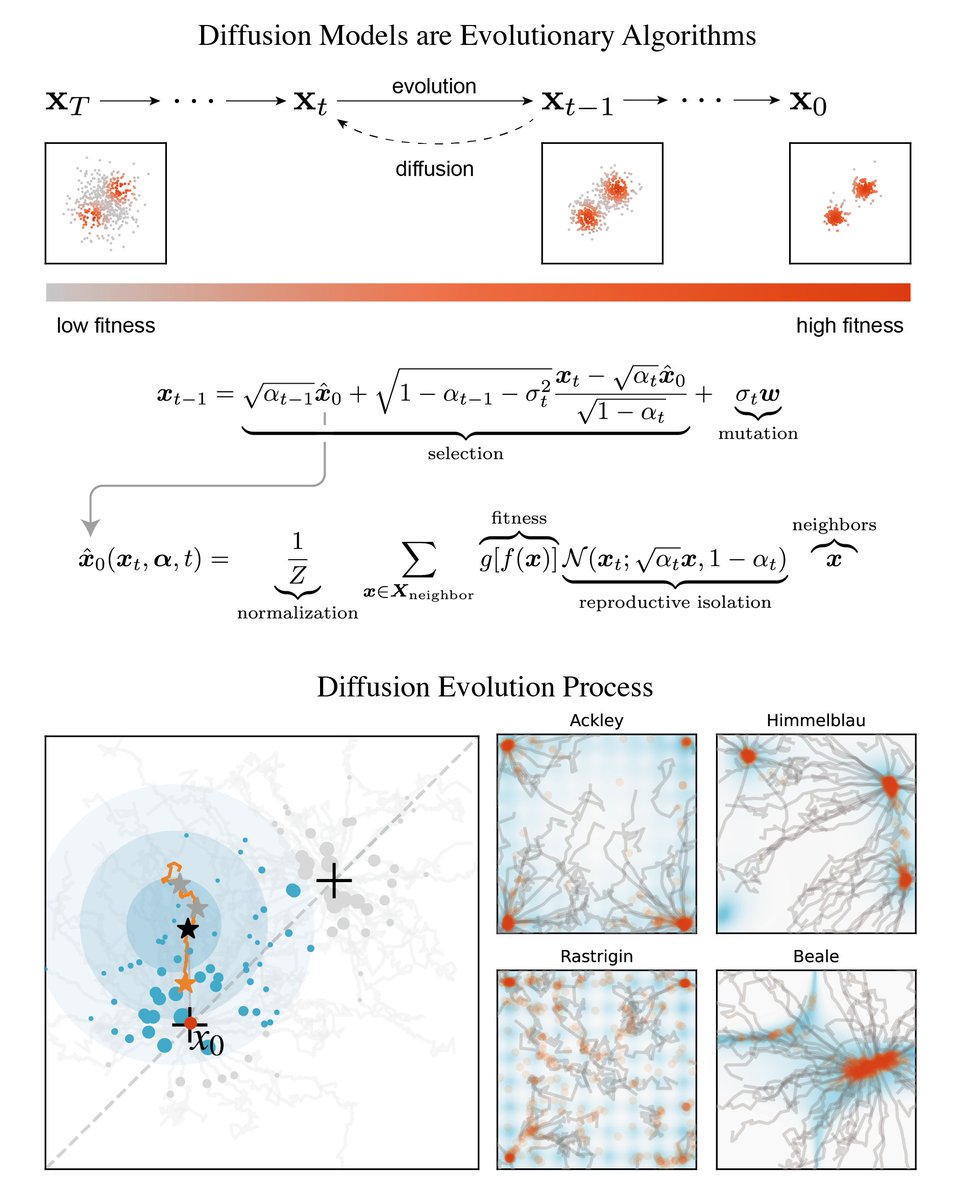

Check out our new preprint! arxiv.org/abs/2410.02543 We find that Diffusion Models are Evolutionary Algorithms! By viewing evolution as denoising, we show they share the same mathematical foundation. We then propose Diffusion Evolution (1/n)

Manus 团队发布 Blog,解密上下文工程实践 这是花了用几千万美元学费,实际各种踩坑,才得到了一些反共识的经验 非常宝贵 1. 上下文工程比“从头造轮子”更香…

I’ve switched back to VSCode + Copilot from Cursor. The latest Cursor update (about a month ago) has made autocomplete horribly unreliable, overly aggressive, and consistently nonsensical.

Why are LLMs so addicted to bullet points? For logical content, they're trash—breaking apart reasoning chains and giving people a false sense of "getting it."

The video generation process can be considered the Reasoning Process for the last frame.

For some reason, Cursor's AI auto-suggestions have become extremely difficult to use in Jupyter Notebook. There are a lot of blank lines, completely unrelated content, and unhelpful “smart” modifications.

Experiment this week: I will try to do what ever ChatGPT o3 ask me to do. Let's see what will happen. So far so good.

Mendeley is completely a trash. Full of bugs. Making you feel unsafe when you collaborate with others. And you can't even import references from a document other people send to you.

Although I often wonder how philosophers reach conclusions without rigorous experiments, the fact that some do so persuasively underscores just how wasteful today’s LLMs are with data.

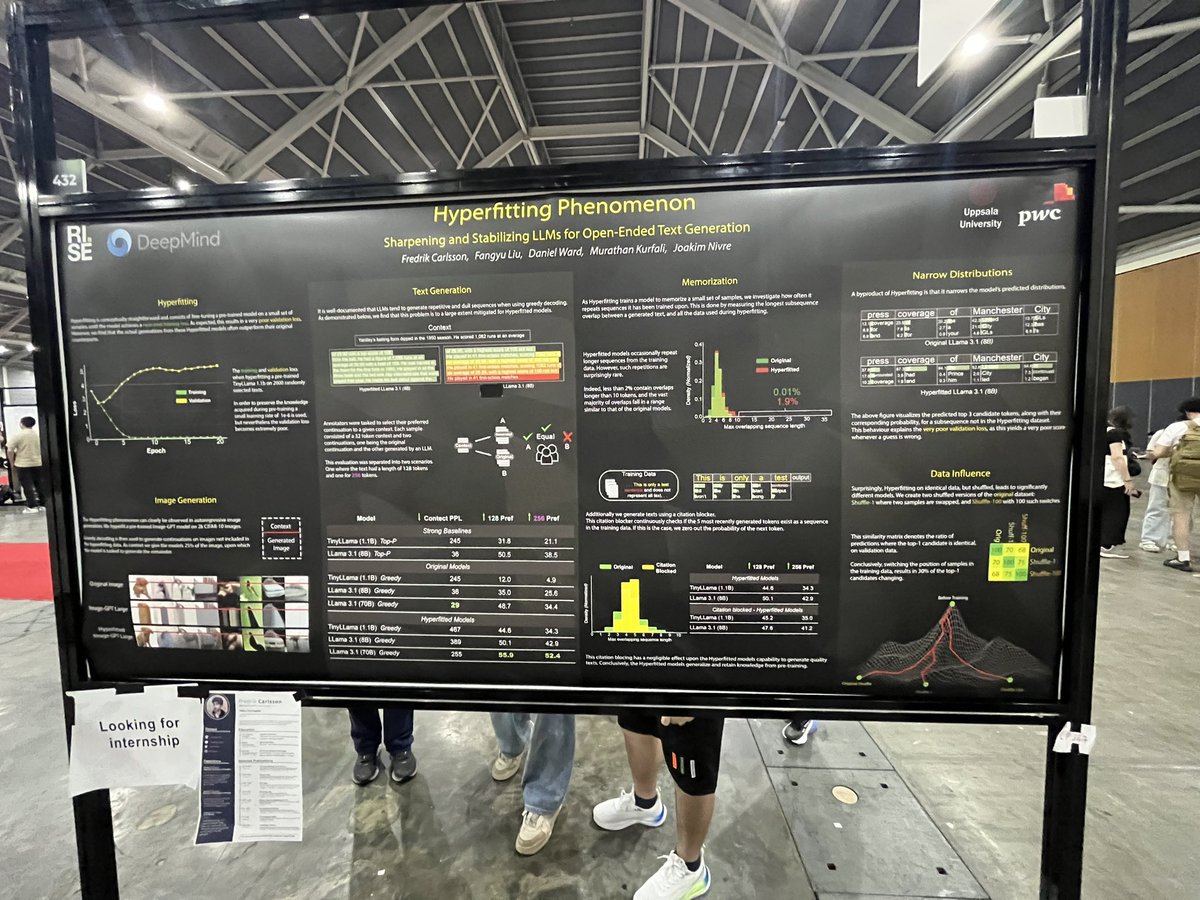

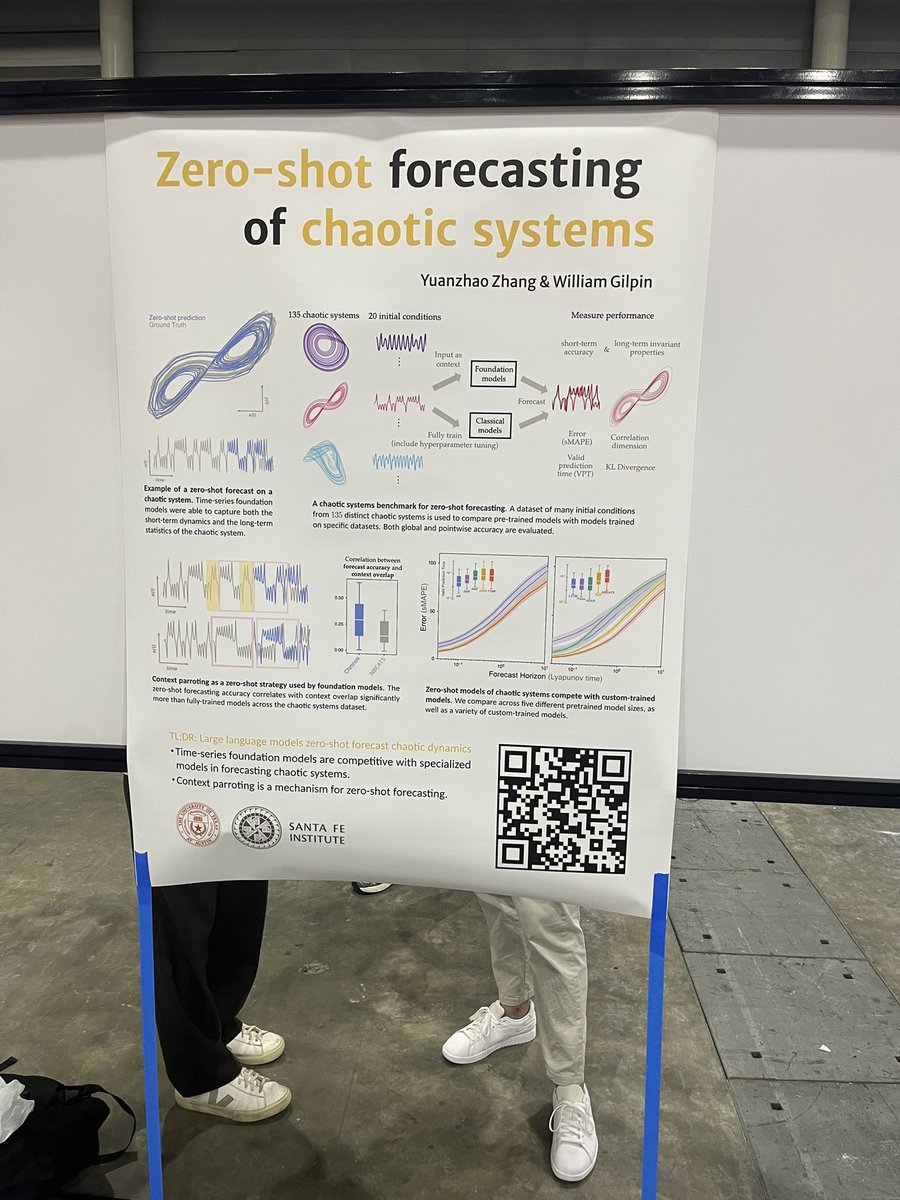

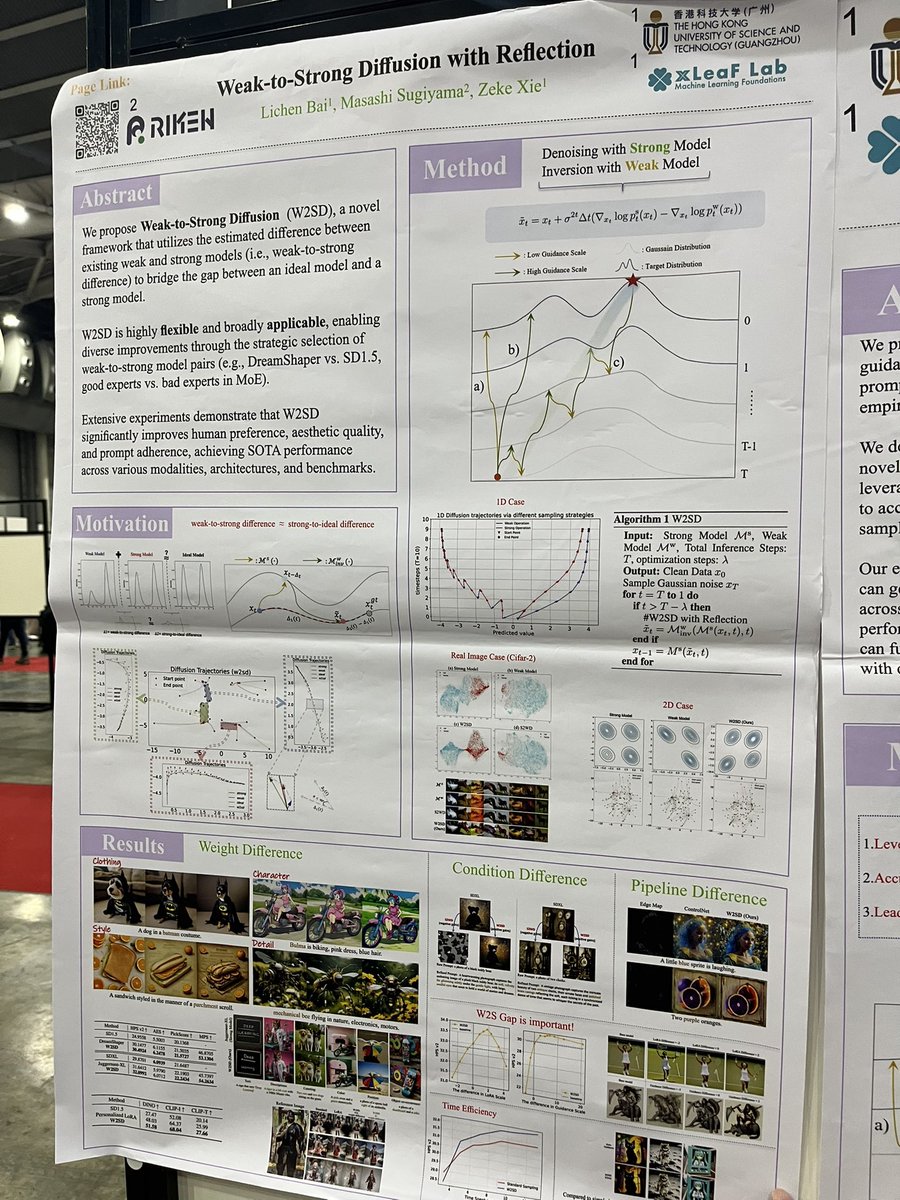

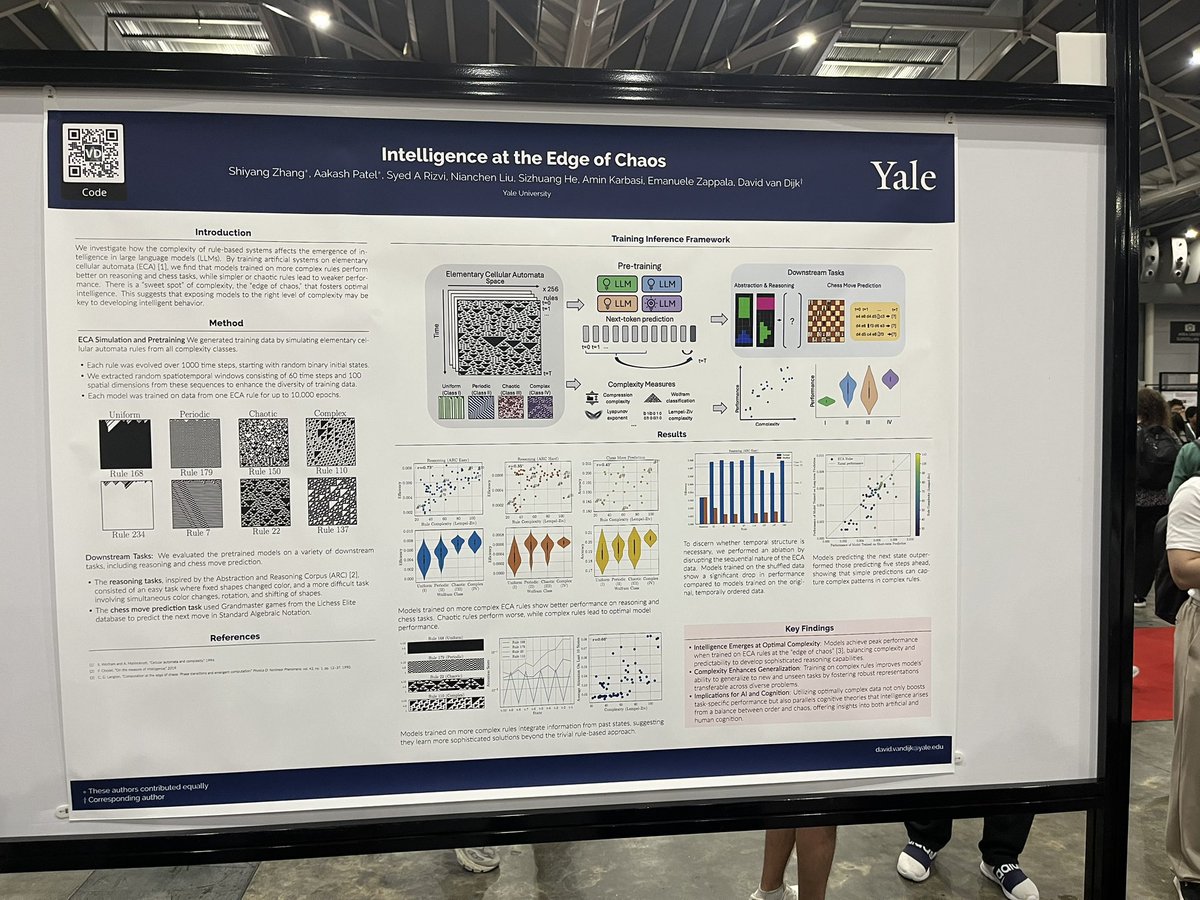

Science shouldn’t be all about chasing SOTA and building tools—it ought to bring us surprises and discoveries too. At today’s ICLR poster session, I picked out a few projects that I think deliver those surprises and discoveries, pushing back against the relentless flood of SOTA

I once thought category theory was too abstract to guide neural network design. But I realize that most NNs don’t elegantly meet the most basic category axioms. The promise of category theory lies in crafting general, nontrivial architectures that naturally embody these axioms

Everything is studio Ghibli now. Even lord of the rings.

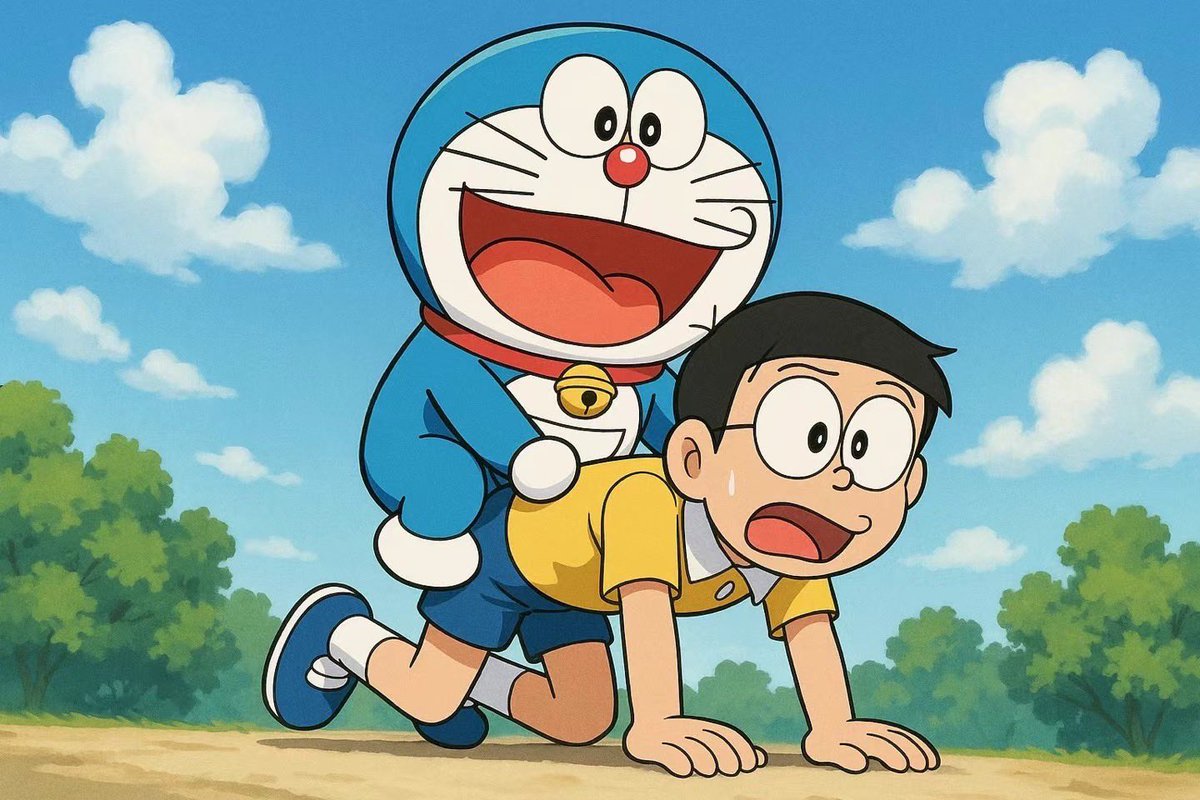

The native image generation feature of ChatGPT is very good, very very good. But, it seems struggle with long context. It give me this when I ask it to draw a poster given my recent paper...

Imagine a nice, simple, low-entropy cup of coffee. Pour some milk and you'll see beautiful & complex structures arising, before the cup reaches a high-entropy homogenous status, back to simple. Interesting things happen while entropy increases! Here a funny experiment: 1/3

Kolmogorov Complexity: intelligence (learn) = compression → LLMs; P=(!=)NP: Reasoning = Searching → reasoning models; Are they the same? 🤔