The Variational Book

@TheVariational

The topic of generative AI unites key concepts in machine learning. Follows us to learn more about all things AI.

Is the art of transforming text into images or videos something that sparks your curiosity? @DavidDuvenaud @DrJimFan @WenhuChen @_tim_brooks @kchonycthe @sleepinyourhat essence of diffusion model construction by understanding step-wise deformation

A history of equations @MLStreetTalk @ecsquendor thank you MLST for hosting a session on all things generative AI. check out the 20 page pdf! drive.google.com/file/d/1Y0ggub…

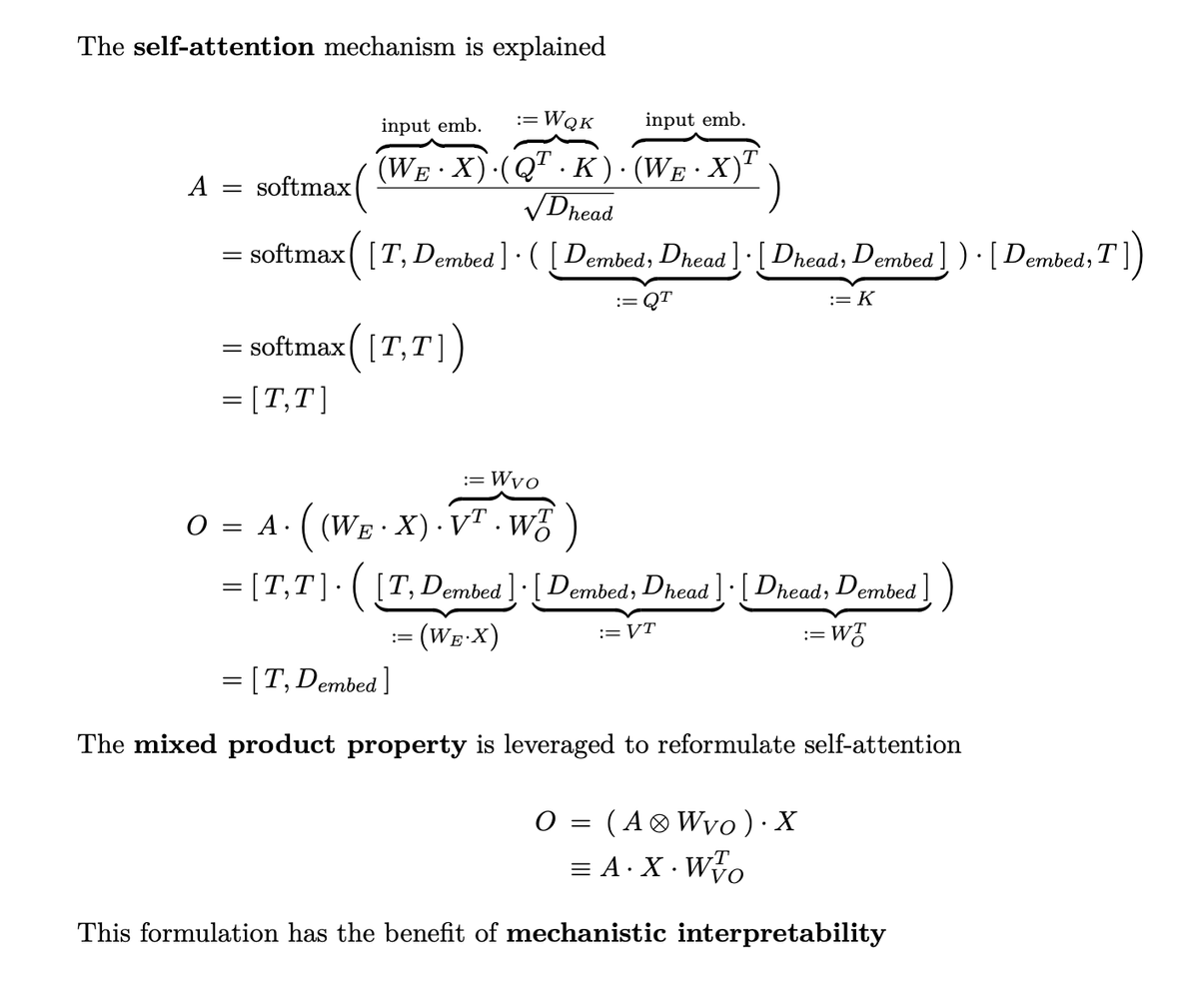

interested in mechanistic interpretability of transformers? @ch402 @NeelNanda5 @catherineols a brief look is offered

Vector-quantization is taking over! @BytedanceTalk @keyutian @pess_r @robrombach @OriolVinyalsML @koraykv The details of VQ methods are highlighted, including the VAR @NeurIPSConf paper of the year. check out the following PDF drive.google.com/file/d/1XnxS0b…

Curious about how diffusion models are influenced? @jaakkolehtinen @unixpickle @prafdhar @TimSalimans @hojonathanho Check out the review of the Autoguidance #NeurIPS2024 runner-up best paper in the following PDF drive.google.com/file/d/1WxQ7Zd…

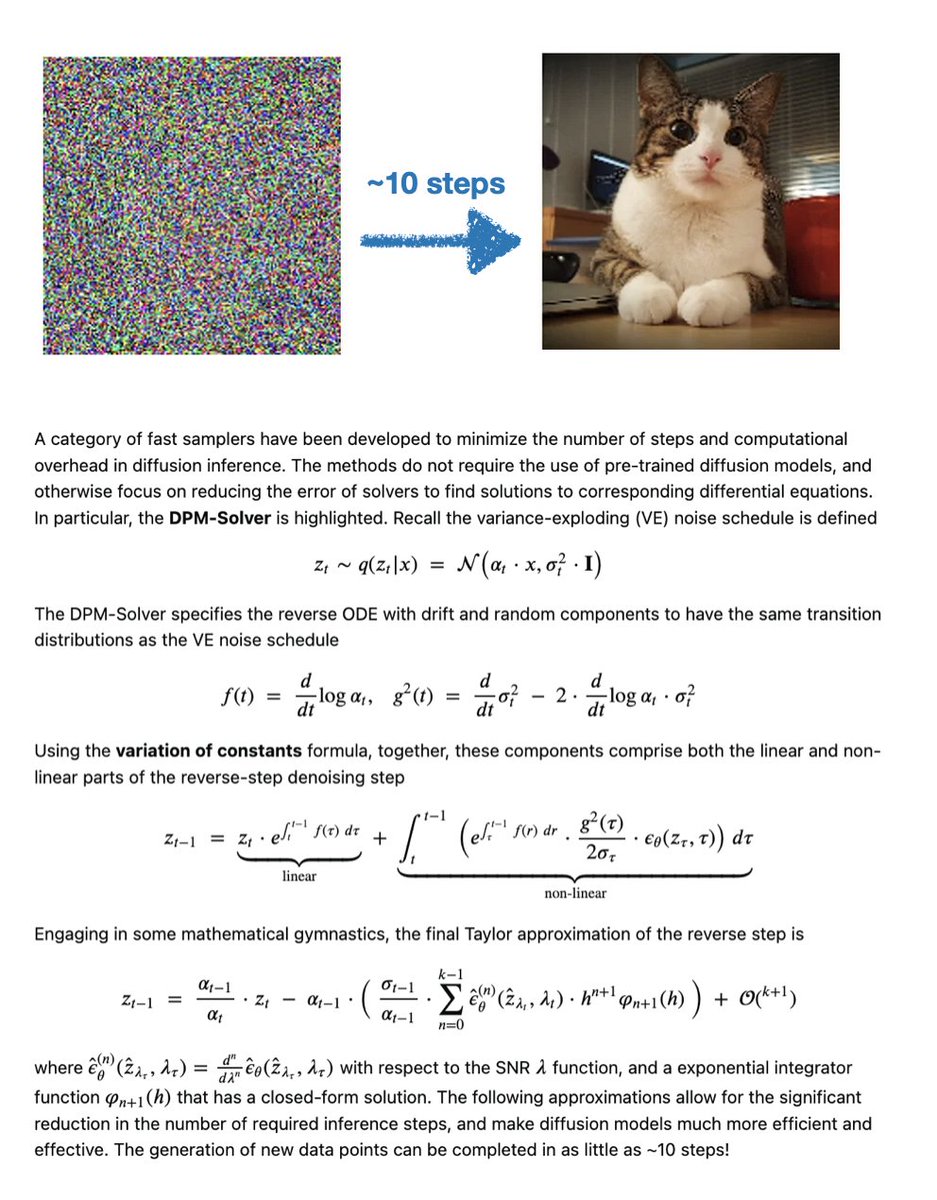

Who needs patience when you’ve got optimization?! @clu_cheng @miskcoo @junyanz89 @FidlerSanja The method of DPM-Solver is discussed, allowing high-quality data point generation in as little as 10 steps!

Are you a creative hiding in plain sight? If your imagination runs wild, then diffusion models might be for you! @bahjat_kawar @baaadas @TimSalimans @mo_norouzi We discuss DDRM and how it is applied to challenges in denoising, in-painting, de-blurring, super-resolution.

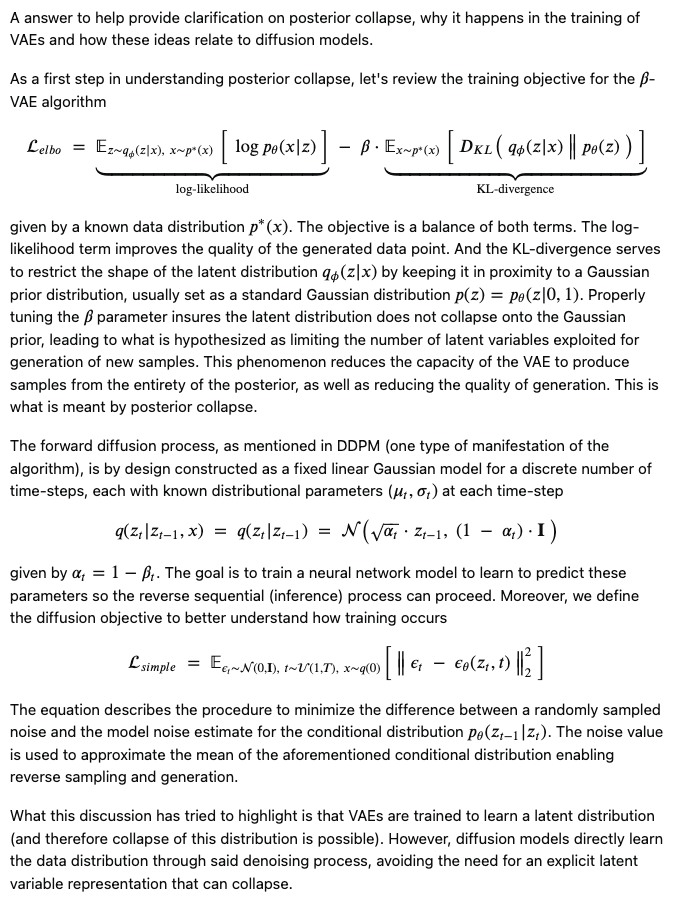

Discover the hidden link: Why posterior collapse thrives in VAEs however not in diffusion models. @gp_pulipaka @rasbt @awnihannun @fly51fly Dive into our latest insights

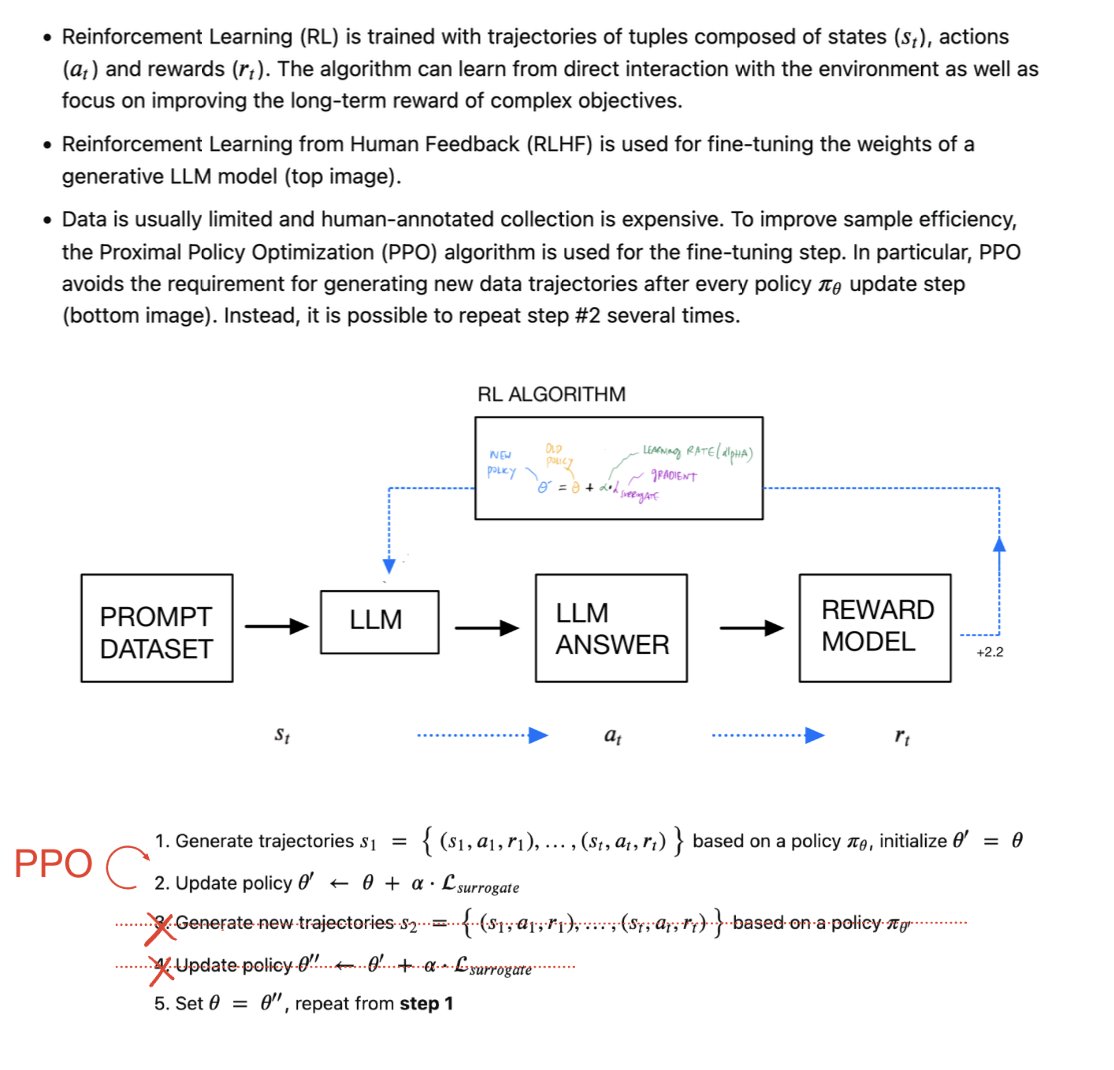

Are you also using LLMs and Reinforcement Learning Human Feedback (RLHF)? @johnschulman2 @prafdhar @AlecRad @svlevine We better understand why the Proximal Policy Optimization (PPO) algorithm is an important component of RL

Why should we wait for inference? @baaadas @chenlin_meng @StefanoErmon @DaniloJRezende @robrombach Denoising Diffusion Implicit Models (DDIM) improves upon DDPM diffusion models by reducing the time for data generation.

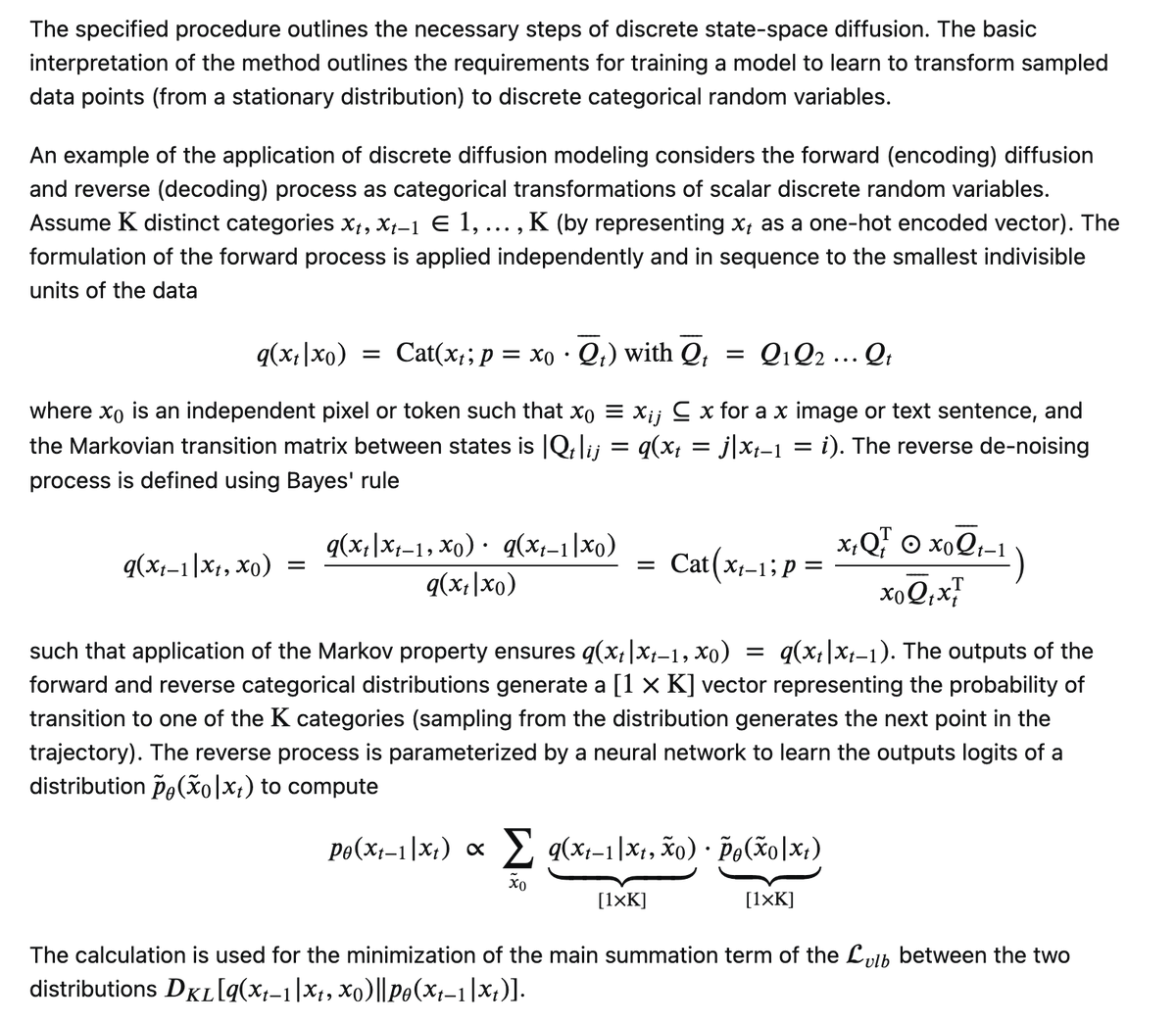

Diffusion does not have to use continuous state-spaces! @jacobaustin132 @_ddjohnson @vdbergrianne @YiTayML We highlight the case for discrete state-space diffusion.

Thanks to the ICLR Award Committee! And thank you for the kind words, Max! You were the perfect Ph.D. advisor and collaborator, kind and inspiring. I really couldn't have wished for better.

Thank you Yisong and the Award Committee for choosing the VAE for the Test of Time award. I like to congratulate Durk who was my first (brilliant) student when moving back to the Netherlands and who is the main architect of the VAE. It was absolutely fantastic working with him.

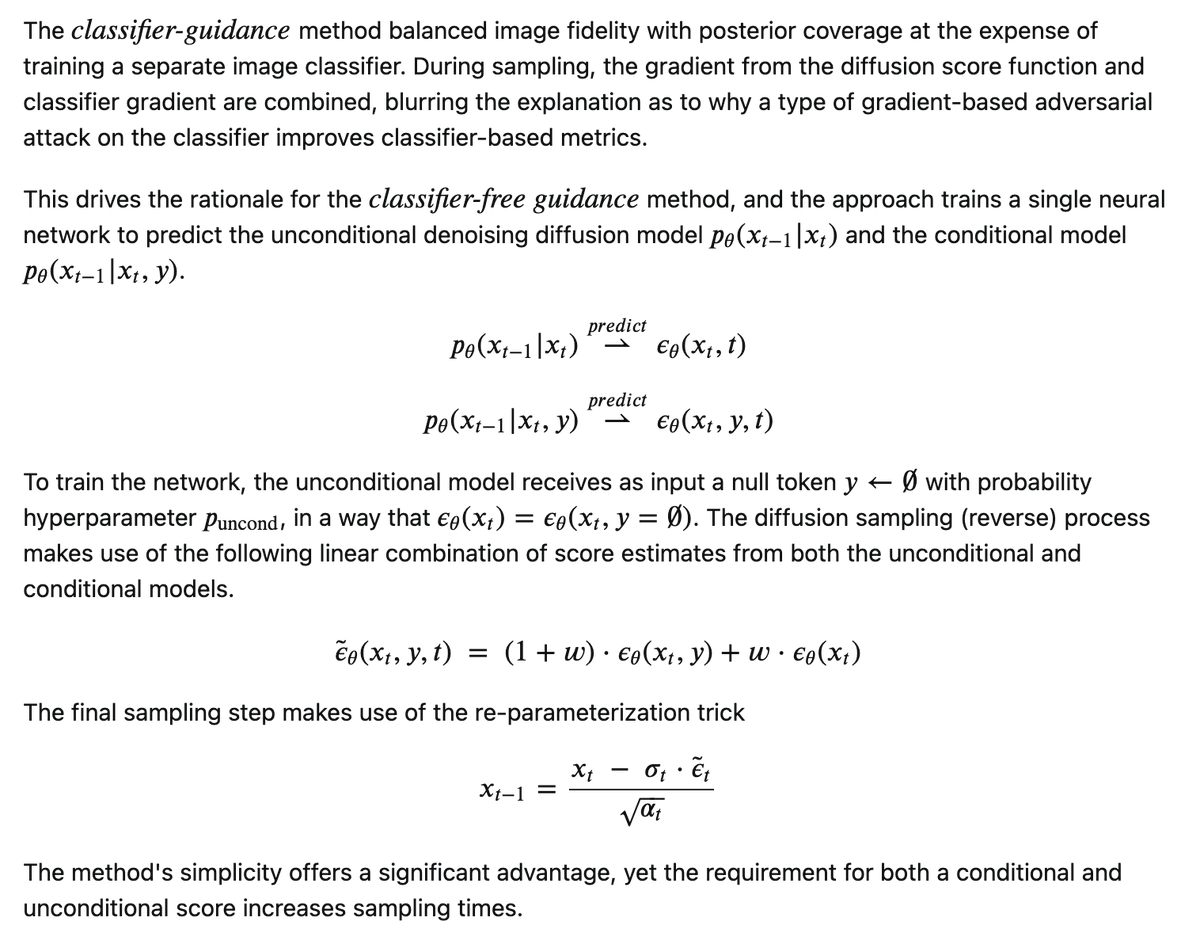

Are you certain a classifier is the best option? @hojonathanho @TimSalimans @mervenoyann @m__dehghani @chenlin_meng We discuss classifier-free guidance for diffusion models and highlight the benefits.

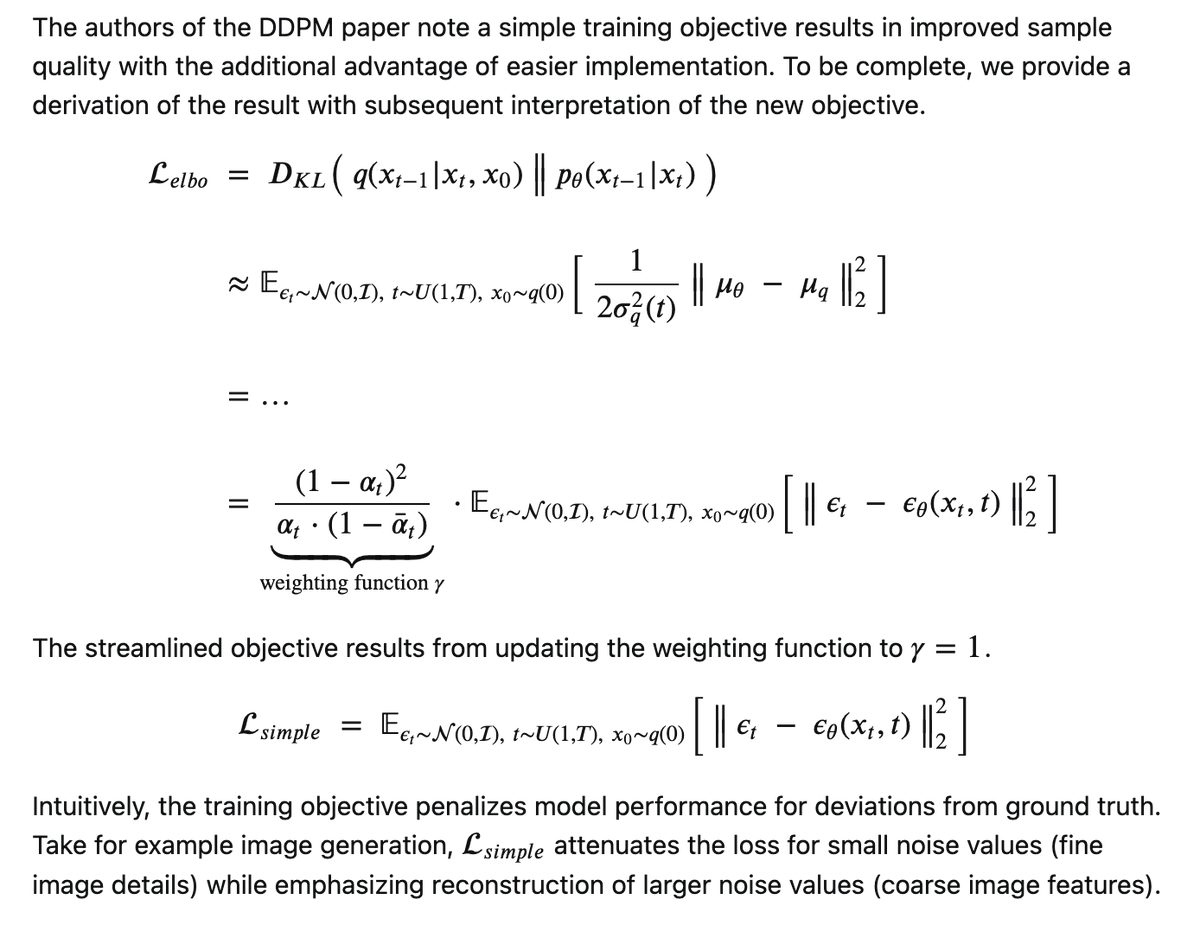

Wonder why there’s often a mismatch between theory and practice? @ajayj_ @pabbeel @hojonathanho @DavidDuvenaud @jluan We highlight a difference in the training objective of diffusion models.

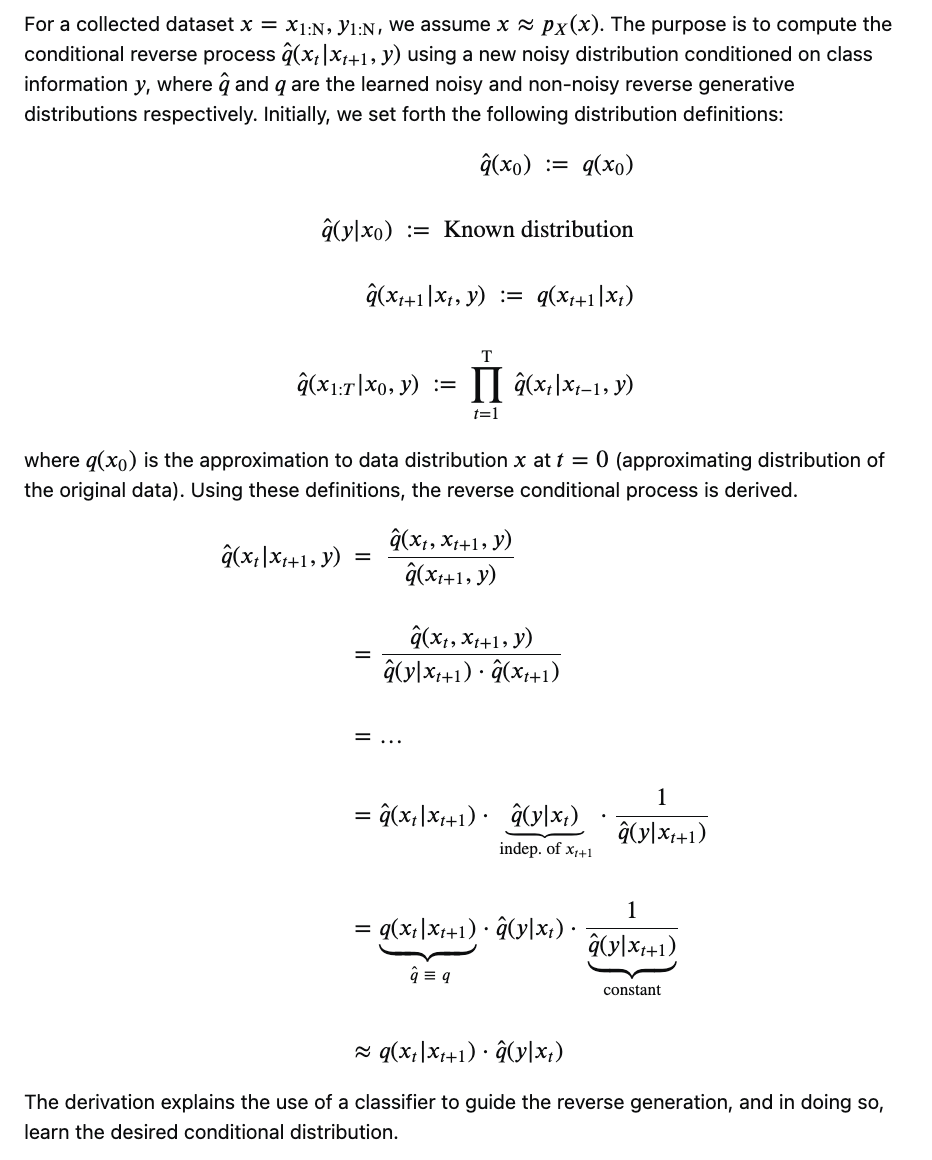

If diffusion is your thing @TimSalimans @TacoCohen @mustafasuleyman @pika_labs @openai you'll be interested in classifier-guidance, a specific method to explicitly control what is generated using conditioning information.

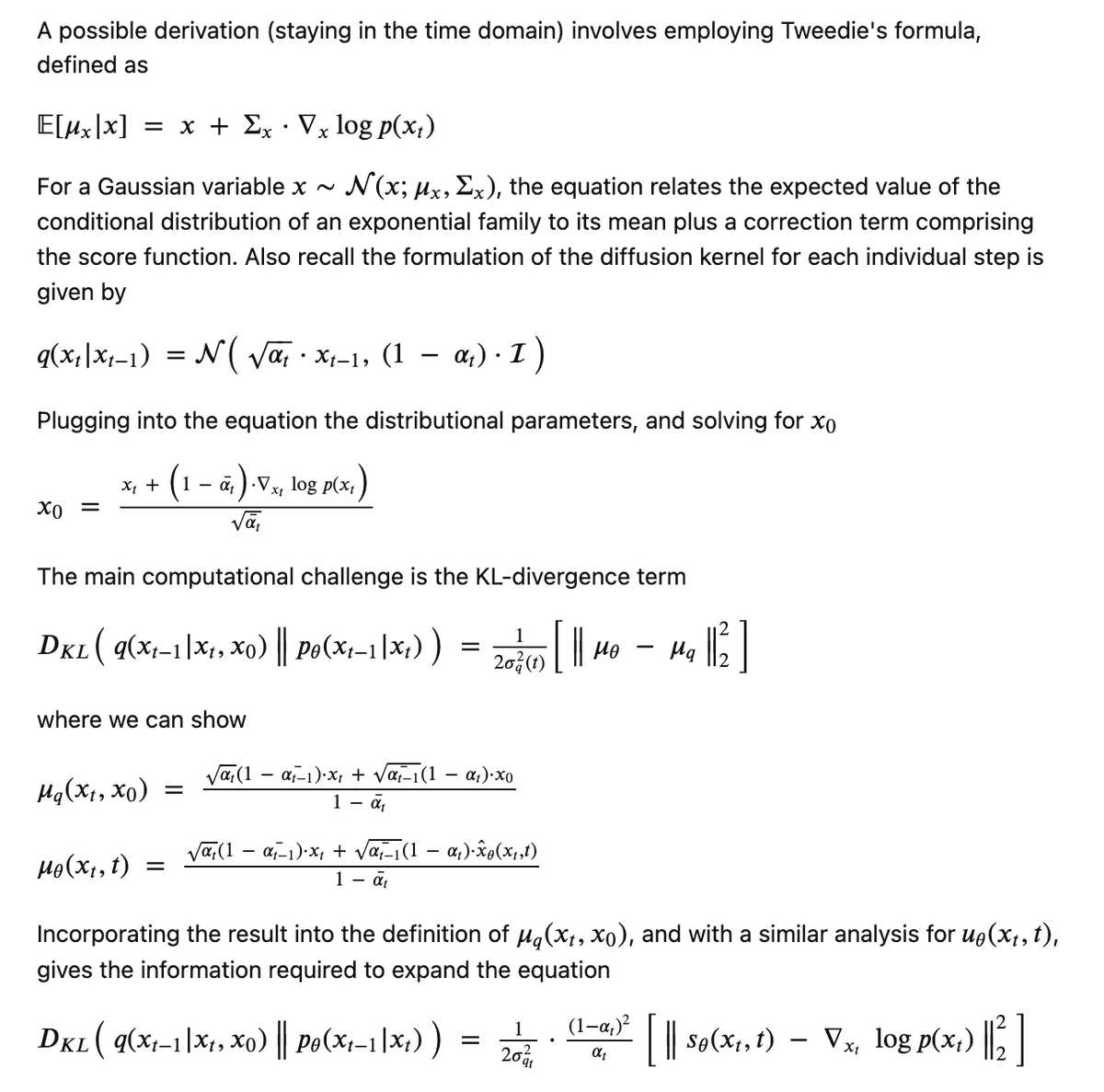

Have you wondered why so many distributions in generative AI are modeled as Gaussians? @gabrielpeyre @SchmidhuberAI @charles_irl @JosepSardanyes We examine the rationale for diffusion models. For a better understanding of equation 1, check out my previous post

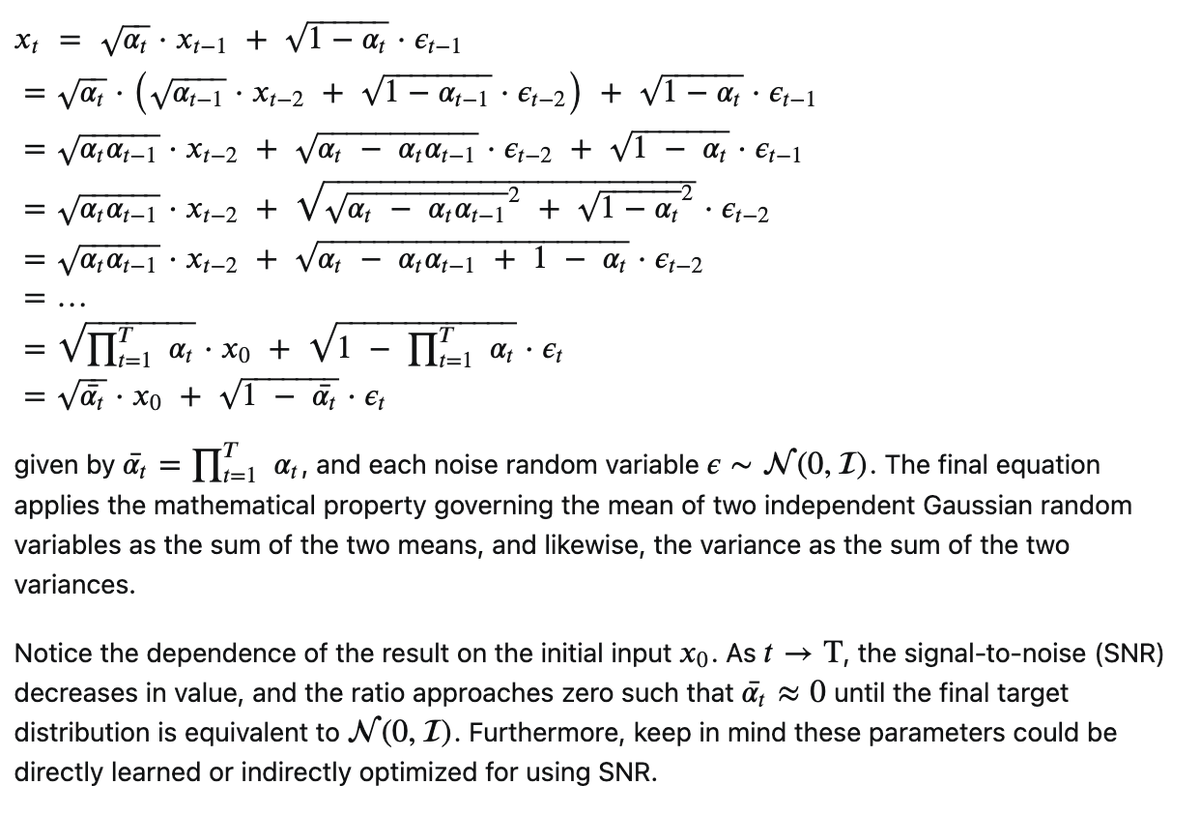

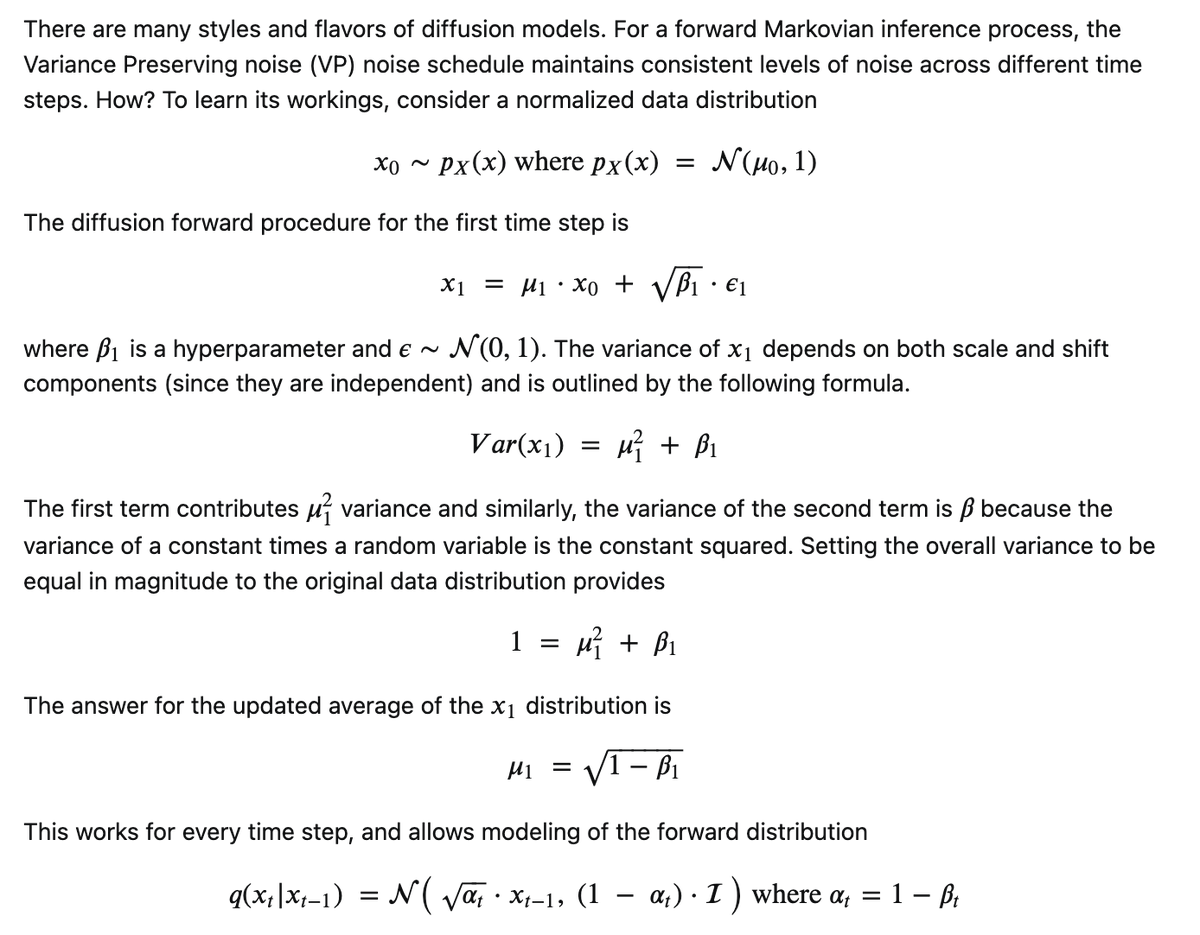

some key ingredients of diffusion models include the noise schedule. @DrShuklaHere @robrombach @Nils_Reimers @_tim_brooks @colinraffel we review the Variance Preserving (VP) procedure

You might be using a diffusion model and not even know it. @omerbartal @hila_chefer @talidekel @GoogleAI @rowancheung @hey_madni @CodeByPoonam We emphasize the unique features of the new Lumiere text to video generative model.

you've expressed interest, so here it is. @hillbig @shaneguML @umitmertcakmak @jm_alexia @sedielem @jaschasd we outline the rational progression linking the score function with diffusion models

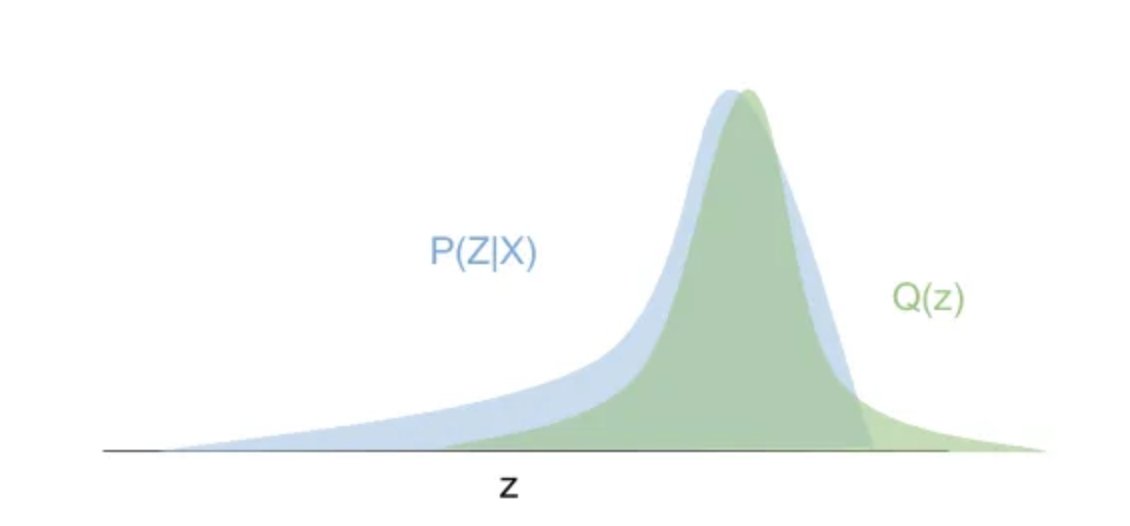

looking forward to reading this paper @CIS_Penn for those curious, we summarize black-box variational inference

Provably Scalable Black-Box Variational Inference with Structured Variational Families. (arXiv:2401.10989v1 [stat.ML]) ift.tt/It5qFTu