Alexander Stremitzer

@Stremitzer_Lab

Professor of Law, Economics, and Business at @ETH Zurich. Associated with @ETH_CLE, Interested in #contractdesign, #lawecon, #lawtech

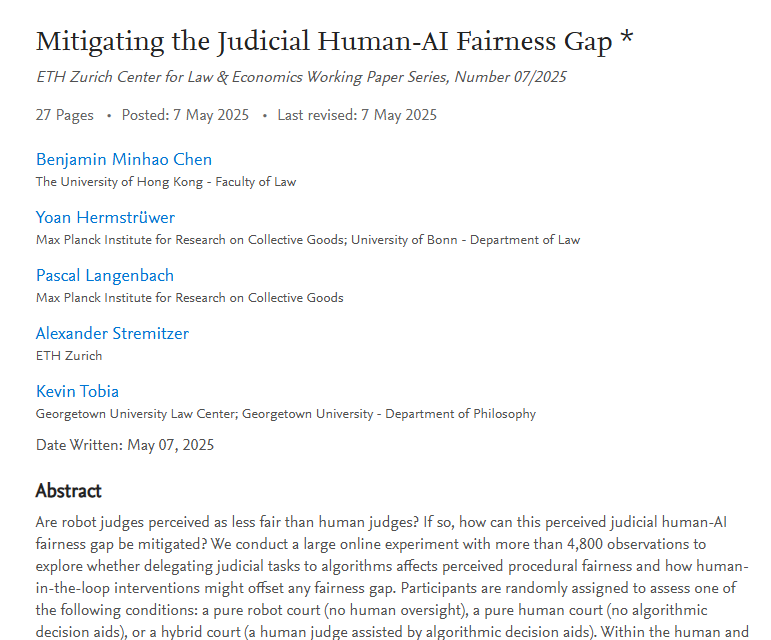

1/6 Are robot judges perceived as less fair than human judges? Yes, but minimal human oversight can eliminate this human-AI fairness gap. Check out our new Working Paper “Mitigating the Judicial Human-AI fairness gap.”

Programs from previous years: ethz.ch/content/dam/et… (@danbjork, @jblumenstock) ethz.ch/content/dam/et… (@m_sendhil) ethz.ch/content/dam/et… (@MelissaLDell) @eth_cle @econ_uzh @ETH_AI_Center @emollick @erikbryn @DAcemogluMIT @Susan_Athey @akorinek @aadukia @jensottoludwig…

Call for Papers: The 4th Annual Zurich Workshop in AI+Economics to be held Dec 5-6, 2025, hosted by ETH Zurich and University of Zurich. illuminating keynote to be given by @testingham (OpenAI) organized with @sergallet @YanagizawaD @joachim_voth Info:…

Introducing an eval dataset of 4,886 law school exam questions. See if your AI can answer them!!

1/5 How good are AI Model’s Reasoning Abilities? We have created LEXam, a legal reasoning benchmark derived from real law exams available in English and German. @eth_cle @ellliottt @YoanHermstruwer @joelniklaus @OpenAI @GoogleAI @deepseek_ai @AnthropicAI @grok @AIatMeta

Check out LEXam, our new Legal Reasoning benchmark! Thanks for the great collaboration @NJingwei, Jakob, Etienne, Yang, Yoan, @YinyaHuang, @akhtarmubashara, Florian, Oliver, Daniel,@LeippoldMarkus, @mrinmayasachan, @Stremitzer_Lab, Christoph Engel, @ellliottt, and @joelniklaus!

🚨Time to push LLMs further! 📚LEXam: The legal reasoning benchmark you’ve been waiting for: • 340 exams, 116 courses (EN/DE) • Long-form, process-focused questions • Expert-level LLM Judges • Rich meta for targeted diagnostics • Contamination-proof, extendable MCQs [1/6]🧵