Simon Stepputtis

@SimonStepputtis

Assistant Professor at @virginia_tech Creating intelligent robots that reason about their environment and the humans within them through hybrid AI 🚀

Thrilled to join @virginia_tech as an assistant professor in @VirginiaTech_ME this fall! At the TEA lab (tealab.ai), we’ll explore hybrid AI systems for efficient and adaptive agents and robots 🤖 Thank you to everyone who has supported me along the way!

📣 Thrilled to announce that I'm on the job market for Fall 2025 faculty positions! I am currently a postdoc @CarnegieMellon @CMU_Robotics. 🔍 My research is dedicated to developing robots that can intelligently reason about their environments and the humans within them. By…

🚀 Excited to share our paper ShapeGrasp: Zero-Shot Object Manipulation with LLMs through Geometric Decomposition at #IROS2024 (Session ThCT3.3)! 🎉 Amazing work by @SamuelLi826114 on leveraging geometric decomposition and LLMs for zero-shot task-oriented grasping. The LLM…

I am excited to announce that we are accepting applications for the Pathways@RSS 2025 Fellowship! 📌 Fellowship for BSc, MSc & early PhD students 📌 Supports first-time RSS / Conference attendees 📌 Attend & thrive at #RSS2025 🗓️ Find out more and apply by March 21st:…

🚀 Excited to share our latest work: extending RLAIF to work well with small language models which may produce incorrect rankings! 🚀 Catch our poster session at #EMNLP in Miami next Thursday! 📄 Paper: arxiv.org/pdf/2410.17389 🎥 Presentation: youtu.be/jKKUqB-nf7Y

Have you ever wondered how robots can proactively support a human’s task? 🤖🧑🍳 A key aspect of proactive user support is fast action anticipation. ⭐ Our method uses external domain knowledge to reduce response time and selects the most helpful, non-disruptive task. ⏱️🥗 Below,…

Want a robot to assist you in the kitchen **without any instructions** simply by watching you?🤖🏠 🚀 Presenting our recent paper on action anticipation from short video context for human-robot collaboration, accepted at Robotics and Automation Letters (RA-L).

I'm excited to share our lates work, Sigma, providing an efficient approach to multimodal segmentation that can overcome RGB-only shortcomings like low-light or overexposure issues by reasoning over thermal and depth information. At the core of our multimodal method, sigma…

Sigma Siamese Mamba Network for Multi-Modal Semantic Segmentation Multi-modal semantic segmentation significantly enhances AI agents' perception and scene understanding, especially under adverse conditions like low-light or overexposed environments. Leveraging additional

Ever wanted to know how out-of-distribution data will affect a deep neural network's accuracy? @yuzhe_lu is at #NeurIPS2023 this week presenting our paper which shows how to predict model accuracy given only unlabeled data from the target dist. Paper: arxiv.org/abs/2305.15640

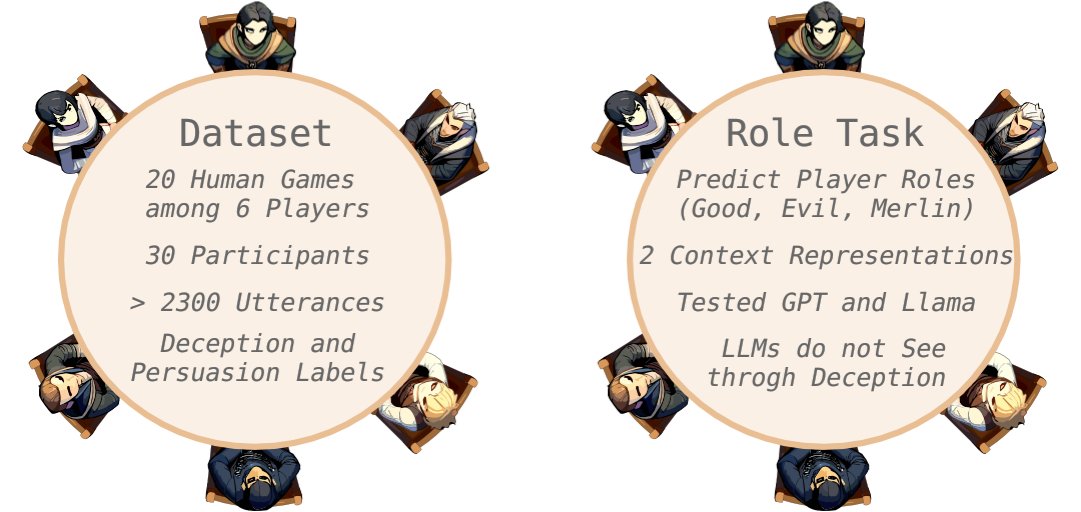

In our latest EMNLP 2023 Findings paper, we delve into the intricate realm of Avalon: The Resistance, a complex social deduction game that requires a nuanced understanding of long-horizon player interactions, their strategic discussions, as well as deception and persuasion…

Please check out our ICCV CVEU workshop paper Knowledge-Guided Short-Context Action Anticipation in Human-Centric Videos in which we utilize symbolic domain knowledge to alter a transformer's attention mechanism to improved future action prediction. Link: arxiv.org/abs/2309.05943

Please check out our new paper at the IROS-HmRI workshop!

We have a really cool paper at the Human Multi-Robot Interaction workshop today at IROS in which we show how to use LLMs to produce natural language explanations for agent policies. Paper: arxiv.org/pdf/2309.10346…

Today, we are presenting our work "Sample-Efficient Learning of Novel Visual Concepts" at @CoLLAs_Conf 2023. Check out our poster this afternoon at poster 12! Project Website: sarthak268.github.io/sample-efficie…

What if transfer learning in RL could selectively transfer only beneficial knowledge? What if it was interpretable and we knew exactly what was transferred? I have a poster today on exactly this topic at CoLLAs. Check it out: arxiv.org/pdf/2306.12314… #CoLLAs2023

I am excited to announce our talk at #CoLLAs2023 for our paper titled "Sample-Efficient Learning of Novel Visual Concepts"! With our neuro-symbolic architecture, we quickly learn about novel objects, as well as their attributes and affordances. Website: sarthak268.github.io/sample-efficie…

Our workshop on Articulate Robots is happening right now at #RSS2023 in Room 306A and online! More information: sites.google.com/andrew.cmu.edu…

I'm excited to invite #RSS2023 participants to our Workshop on Articulate Robots: Utilizing Language for Robot Learning this Friday from 1:30 to 5:45pm in room 306A! Please join our exciting lineup of speakers and fascinating abstracts! Learn more here: sites.google.com/andrew.cmu.edu…

We extended the deadline of our #RSS2023 workshop to June 11th! Join us on July 14th at @RoboticsSciSys for our panel of exciting speakers and share your work to discuss the future of articulate robots! More information and submission instructions: sites.google.com/andrew.cmu.edu…

I am happy to announce our #RSS2023 workshop on "Articulate Robots: Utilizing Language for Robot Learning" to be held as a half-day workshop on July 14th in Daegu, Republic of Korea alongside the @RoboticsSciSys conference. Website and Call: sites.google.com/andrew.cmu.edu…