Satyapriya Krishna

@SatyaScribbles

Explorer. Retweets == Lit review

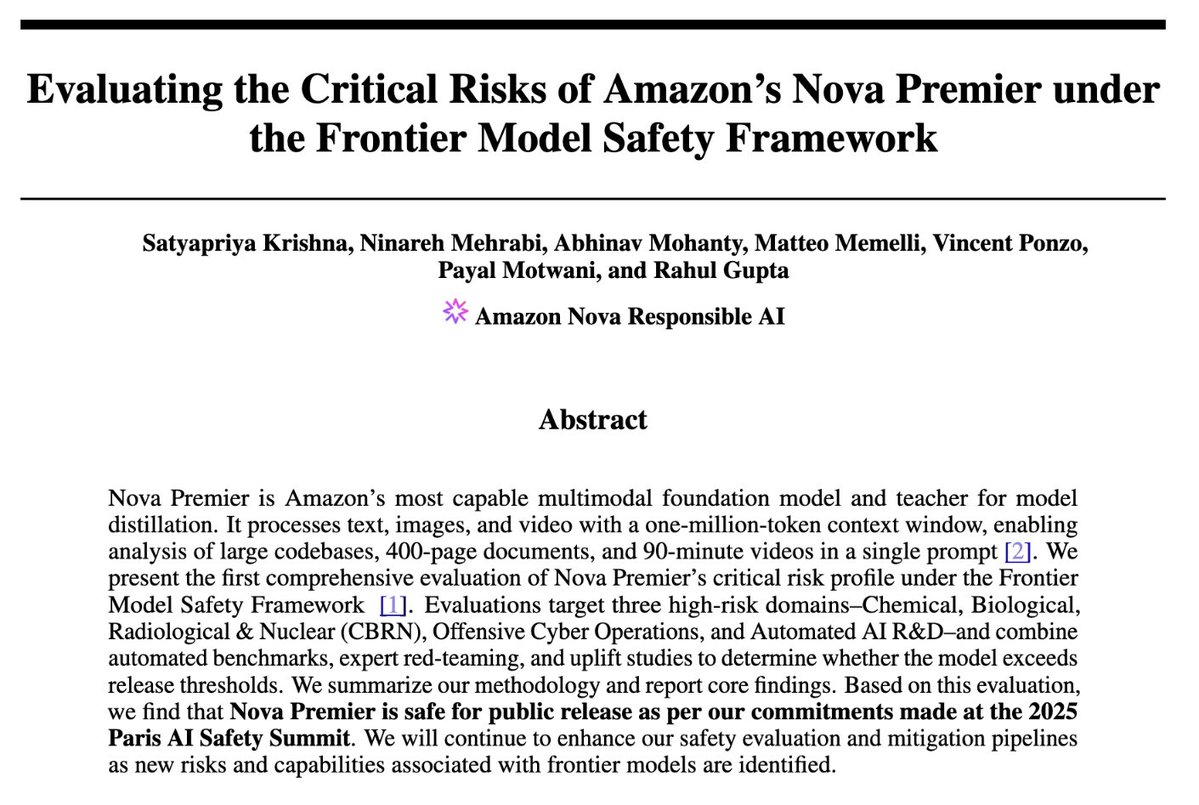

🚨 Excited to share the first frontier risk evaluation report for Amazon Nova Premier: “Evaluating the Critical Risks of Amazon’s Nova Premier under the Frontier Model Safety Framework”! This is the first comprehensive evaluation of Nova Premier’s frontier safety, aligned with…

EXPO is a new method for RL post-training of diffusion policies. With good pre-training, it’s both really stable and ~2x more efficient than the most efficient RL fine-tuning methods I know of. Paper: arxiv.org/abs/2507.07986

Fine-tuning pre-trained robotic models with online RL requires a way to train RL with expressive policies Can we design an effective method for this? We propose EXPO, a sample-efficient online RL algorithm that enables stable fine-tuning of expressive policy classes (1/6)

Is CoT monitoring a lost cause due to unfaithfulness? 🤔 We say no. The key is the complexity of the bad behavior. When we replicate prior unfaithfulness work but increase complexity—unfaithfulness vanishes! Our finding: "When Chain of Thought is Necessary, Language Models…

My colleague Rupam Mahmood explains from first principles his groundbreaking work on Streaming Deep Reinforcement Learning: youtu.be/QOfkOl9QrZY?si…

Great excuse to share something I really love: 1-Lipschitz nets. They give clean theory, certs for robustness, the right loss for W-GANs, even nicer grads for explainability!! Yet are still niche. Here’s a speed-run through some of my favorite papers on the field. 🧵👇

optimization theorem: "assume a lipschitz constant L..." the lipschitz constant:

4/N Second, IMO submissions are hard-to-verify, multi-page proofs. Progress here calls for going beyond the RL paradigm of clear-cut, verifiable rewards. By doing so, we’ve obtained a model that can craft intricate, watertight arguments at the level of human mathematicians.

We’ve decided to treat this launch as High Capability in the Biological and Chemical domain under our Preparedness Framework, and activated the associated safeguards. This is a precautionary approach, and we detail our safeguards in the system card. We outlined our approach on…

Scaling up RL is all the rage right now, I had a chat with a friend about it yesterday. I'm fairly certain RL will continue to yield more intermediate gains, but I also don't expect it to be the full story. RL is basically "hey this happened to go well (/poorly), let me slightly…

🔥 Thrilled to share our new paper accepted at TMLR: "Operationalizing a Threat Model for Red-Teaming LLMs" 🎯 Goes beyond prompt jailbreaks to systematize ALL attack vectors across LLM lifecycle 🛡️ First comprehensive taxonomy based on entry points (training → deployment)…

Operationalizing a Threat Model for Red-Teaming Large Language Models (LLMs) Apurv Verma, Satyapriya Krishna, Sebastian Gehrmann et al.. Action editor: Jinwoo Shin. openreview.net/forum?id=sSAp8… #vulnerabilities #attacks #security

xAI spent the same amount of compute on RL as Pretraining? That is insane