Sotiris Anagnostidis

@SAnagnostidis

PhD in ETH Zürich. MLP-pilled 💊. Previously @Meta GenAI, @GoogleDeepMind, @Huawei, @ntua

📣 Anthropic Zurich is hiring again 🇨🇭 The team has been shaping up fantastically over the last months, and I have re-opened applications for pre-training. We welcome applications from anywhere along the "scientist/engineer spectrum". If building the future of AI for the…

🚀 Want to speed up your image and video model inference? Come see our highlight poster at @CVPR : "FlexiDiT: Your Diffusion Transformer Can Easily Generate High-Quality Samples with Less Compute" 📍 Today at 4 PM, ExHall D – Poster #205 🔗 arxiv.org/abs/2502.20126 Work done…

Thrilled to share that our CVPR 2025 paper “𝐀𝐮𝐭𝐨𝐫𝐞𝐠𝐫𝐞𝐬𝐬𝐢𝐯𝐞 𝐃𝐢𝐬𝐭𝐢𝐥𝐥𝐚𝐭𝐢𝐨𝐧 𝐨𝐟 𝐃𝐢𝐟𝐟𝐮𝐬𝐢𝐨𝐧 𝐓𝐫𝐚𝐧𝐬𝐟𝐨𝐫𝐦𝐞𝐫𝐬”(ARD) has been selected as an Oral! ✨ Catch us at CVPR on Saturday, June 14 🗣 Oral Session 4A — 14:00-14:15, Karl Dean Ballroom…

If you are at @iclr_conf, come by my poster tomorrow at 10.00 am! You find me at Hall 3 + Hall 2B #367! See you there! iclr.cc/virtual/2025/p… #ICLR2025

Everybody gangsta until SDEs work! #ICLR2025 Noise hits every optimizer differently! For SignSGD, adaptivity increases resistance to gradient noise, while AdamW enjoys extreme stability. Plus, AdamW has an exciting new scaling rule! More below👇! arxiv.org/abs/2411.15958

Ever wondered how the loss landscape of Transformers differs from that of other architectures? Or which Transformer components make its loss landscape unique? With @unregularized & @f_dangel, we explore this via the Hessian in our #ICLR2025 spotlight paper! Key insights👇 1/8

🚨 NEW PAPER DROP! Wouldn't it be nice if LLMs could spot and correct their own mistakes? And what if we could do so directly from pre-training, without any SFT or RL? We present a new class of discrete diffusion models, called GIDD, that are able to do just that: 🧵1/12

Only a few more days to apply to our amazing PhD program! imprs.is.mpg.de/application

Updated camera ready arxiv.org/abs/2405.19279. New results include: - non-diagonal preconditioners (SOAP/Shampoo) minimise OFs compared to diagonal (Adam/AdaFactor) - Scaling to 7B params - showing our methods to reduce OFs translate to PTQ int8 quantisation ease. Check it out!

Outlier Features (OFs) aka “neurons with big features” emerge in standard transformer training & prevent benefits of quantisation🥲but why do OFs appear & which design choices minimise them? Our new work (+@lorenzo_noci @DanielePaliotta @ImanolSchlag T. Hofmann) takes a look👀🧵

Are LLMs easily influenced? Interesting work from @SAnagnostidis and @jannis1 TLDR: Having an LLM advocate for a question answer in the prompt significantly influences predictions

We’re presenting our work on concept guidance today at 13:30’s ICML poster session (# 706). Come by and say hi! #ICML #ICML2024

🚨📜 Announcing our latest work on LLM interpretability: We are able to control a model's humor, creativity, quality, truthfulness, and compliance by applying concept vectors to its hidden neural activations. 🧵 arxiv.org/abs/2402.14433

Join us today at 13.30 in #ICML to learn how to navigate across scaling laws and how to accelerate your training! Poster #1007

Scaling laws predict the minimum required amount of compute to reach a given performance, but can we do better? Yes, if we allow for a flexible "shape" of the model! 🤸

The University of Basel, Switzerland, is offering an open-rank Professorship in AI and Foundation Models. For more information, visit this link: jobs.unibas.ch/offene-stellen….

Outlier Features (OFs) aka “neurons with big features” emerge in standard transformer training & prevent benefits of quantisation🥲but why do OFs appear & which design choices minimise them? Our new work (+@lorenzo_noci @DanielePaliotta @ImanolSchlag T. Hofmann) takes a look👀🧵

To try it out yourself and for technical implementation details, check out our HF space and GitHub. 🤗 Demo: huggingface.co/spaces/dvruett… 👾 Code: github.com/dvruette/conce…

🚨📜 Announcing our latest work on LLM interpretability: We are able to control a model's humor, creativity, quality, truthfulness, and compliance by applying concept vectors to its hidden neural activations. 🧵 arxiv.org/abs/2402.14433

🚨 Calling on all FABRIC users! We need your help to learn about how you’ve been using FABRIC. Help us by taking 5 minutes to fill out the survey. Haven’t tried FABRIC yet? Just try it using our Gradio demo! ✨👨🎨 📊 Survey: forms.gle/aMWLDW8xvyhkLb… 👾 Demo:…

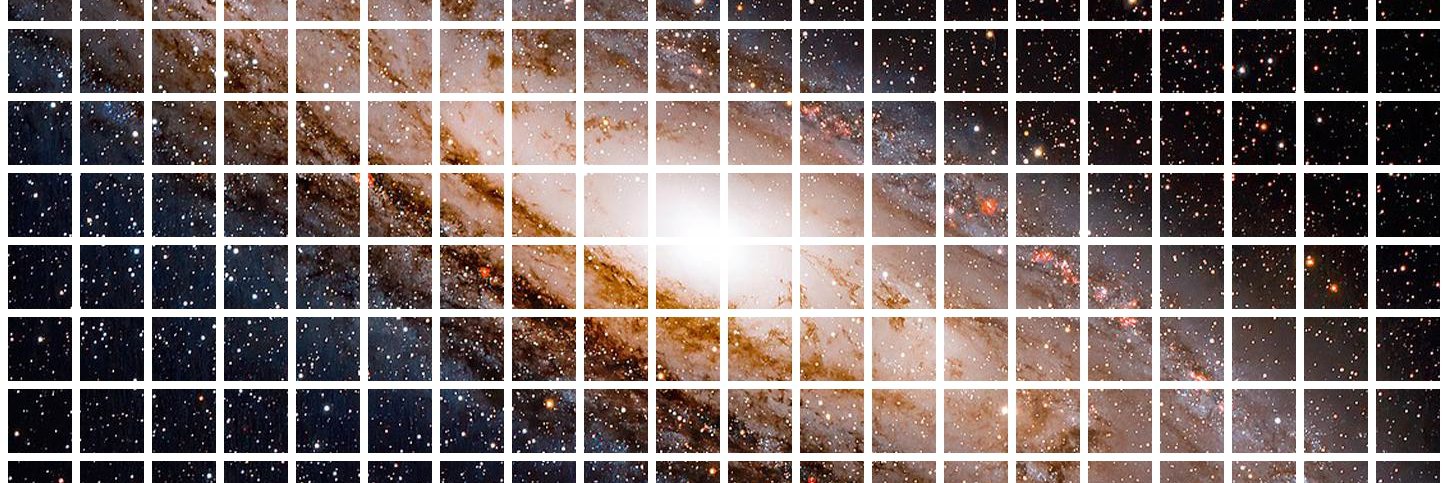

🚨📜 Announcing FABRIC, a training-free method for using iterative feedback to improve the results of any Stable Diffusion model. Instead of spending hours to find the right prompt, just click 👍/👎 to tell the model what exactly you want. 🤗 Demo: huggingface.co/spaces/dvruett…

LIME: Localized Image Editing via Attention Regularization in Diffusion Models paper page: huggingface.co/papers/2312.09… Diffusion models (DMs) have gained prominence due to their ability to generate high-quality, varied images, with recent advancements in text-to-image generation.…

I’ll be presenting "Scaling MLPs" at #NeurIPS2023, tomorrow (Wed) at 10:45am! Hyped to discuss things like inductive bias, the bitter lesson, compute-optimality and scaling laws 👷⚖️📈

Attending #NeurIPS2023 in New Orleans this week to present OpenAssistant (arxiv.org/abs/2304.07327)! Happy to chat about open-source LLMs, personalized image generation, and more. DMs are open!