Rami Ben-Ari

@RamiBenAri1

Principal Research Scientist

Check out the full paper: 📄 Paper: arxiv.org/abs/2503.18033 🌐 Project page: dvirsamuel.github.io/omnimattezero.…

Key Achievements: ✅ Removes and Extracts objects with their effects (shadows, reflections) ✅ Top background reconstruction accuracy across benchmarks ✅ Fastest Omnimatte method – 24 FPS on an A100 GPU ✅ No training or optimization required

Our method leverages self-attention maps of video diffusion models to capture motion cues, enabling object removal with their effects. This works because elements moving together are inherently linked, as described by the common fate principle in Gestalt psychology. 🚀

Our approach: We adapt zero-shot image inpainting for video by directly manipulating the spatio-temporal latent space of pre-trained video diffusion models.

Why is this non-trivial? 🔹 Zero-shot image inpainting fails on videos due to temporal inconsistencies 🔹 Object inpainting must also remove shadows, reflections, and other visual effects 🔹 Existing video inpainting methods struggle with high-fidelity background reconstruction

The challenge: Omnimatte methods decompose videos into background and foreground layers, but current approaches are either computationally heavy due to per-video optimization, or rely on curated datasets for training. Can we achieve good and real-time Omnimatte without training?

🎬 We propose a training-free method for Omnimatte that can: Remove objects along with their footprint (shadows, reflections) and Seamlessly blend them in a different video, achieving SoTA in real-time, by just using a pre-trained video diffusion model, without any optimization

🚀 Excited to share OmnimatteZero: Training-Free Real-Time Omnimatte with Video Diffusion Models! 📄 Paper: arxiv.org/abs/2503.18033 🌐 Project: dvirsamuel.github.io/omnimattezero.… 🧵👇

Happy to share that we have two papers accepted to #ICLR2025 1. Effective Foundation based Visual Place Recognition arxiv.org/abs/2405.18065 and 2. Guided Newton-Raphson Diffusion Inversion Paper: arxiv.org/abs/2312.12540 🔗 Project page: barakmam.github.io/rnri.github.io/

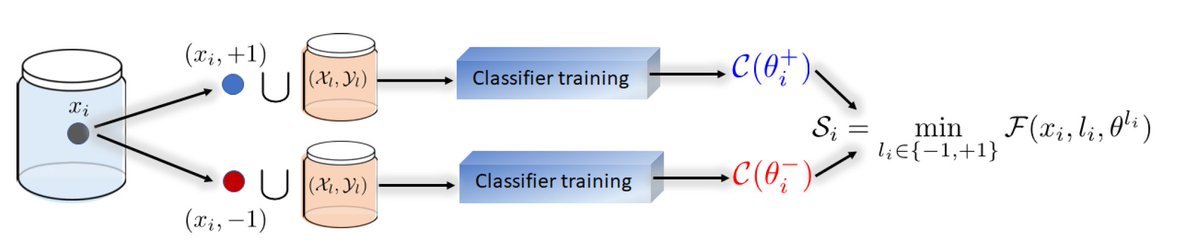

Excited to share that our paper, “Active Learning via Classifier Impact and Greedy Selection for Interactive Image Retrieval”, has been accepted to TMLR! TMLR: openreview.net/pdf?id=b68QOen… Project Page: github.com/barleah/Greedy… Short Video Presentation: youtu.be/bHDARDpu8Fg

Our latest paper, “Regularized Newton-Raphson Inversion for Text-to-Image Models,” introduces RNRI, a fast and precise method for inverting images to their noise latents.

🔍 RNRI highlights: - Enables super-fast editing of real images. - Improves the generation of rare concepts. - Solves inversion as a root-finding problem of an implicit equation. - Uses the Newton-Raphson numerical scheme for rapid convergence.

Our latest paper, “Regularized Newton-Raphson Inversion for Text-to-Image Models,” introduces RNRI, a fast and precise method for inverting images to their noise latents.

🚀 High-quality inversion of text-to-image models in real time! Now you can do interactive image editing! 🎨 📄 Paper: arxiv.org/abs/2312.12540 🌐 Project Page & Demo: barakmam.github.io/rnri.github.io/

2. Generating images of rare concepts using pre-trained diffusion models Dvir Samuel, Rami Ben-Ari, Simon Raviv, Nir Darshan, Gal Chechik arxiv.org/abs/2304.14530

1. Data Roaming and Early Fusion for Composed Image Retrieval Matan Levy, Rami Ben-Ari, Nir Darshan, Dani Lischinski arxiv.org/abs/2303.09429

AAAI 2024 is over! Have a look at the 2 papers that we presented there. (1) on Image Retrieval and (2) Text to Image Generation

Well done Dvir!

I am happy to share that our paper: "Norm-guided latent space exploration for text-to-image generation" has been accepted to @NeurIPSConf 2023. Paper: arxiv.org/abs/2306.08687 Code: github.com/dvirsamuel/See… Big thanks to @GalChechik, @ramibenari, @HaggaiMaron and Nir Darshan.