Observatory on Social Media

@OSoMe_IU

Research center at Indiana Univ studying the spread of misinformation. Tweets by OSoMe Team, @[email protected], @fil.bsky.social, @[email protected]

The Observatory on Social Media (OSoMe, pronounced awe•some) is a research center at Indiana University bringing technologists and journalists together to study and counter the spread of misinformation on social media. Our lab brings you tools like @Botometer, Hoaxy & BotSlayer.

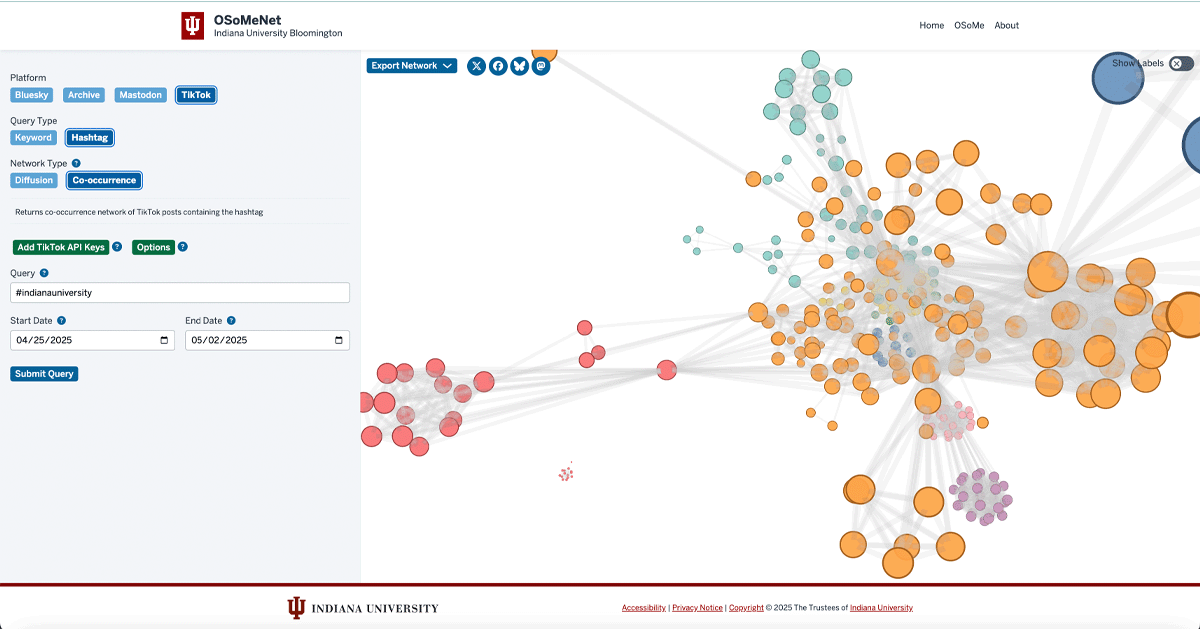

🎉 After 8 amazing years, Hoaxy is retiring! Its legacy lives on in OSoMeNet—our new, unified tool for social media research. Thank you for supporting Hoaxy! 🙌 osome.iu.edu/tools/osomenet/

🎉 After 8 amazing years, Hoaxy is retiring! Its legacy lives on in OSoMeNet—our new, unified tool for social media research. Thank you for supporting Hoaxy! 🙌 osome.iu.edu/tools/osomenet/

Can you trust climate information? How and why powerful players are misleading the public theconversation.com/can-you-trust-…

Are you an early-career Italian computer scientist working in the US or Canada? Apply for the ISSNAF 2025 MARIO GERLA AWARD! 🧪 (Spread the word, please!) issnaf.org/2025-young-inv…

Most Americans believe misinformation is a problem — federal research cuts will only make the problem worse theconversation.com/most-americans…

AI Chatbots Are Making LA Protest Disinformation Worse wired.com/story/grok-cha…

The AI Slop Fight Between Iran and Israel 404media.co/the-ai-slop-fi…

The human response to the climate crisis is being obstructed and delayed by the production and circulation of misleading information about the nature of climate change and the available solutions. ipie.info/research/sr202…

New preprint: How Malicious AI Swarms Can Threaten Democracy 🧪 osf.io/preprints/osf/…

Congress is on the verge of passing a dangerous proposal: a 10-year ban on protecting families from AI harms. Help protect our kids and stop this nightmarish proposal by acting now: p2a.co/ZWfY1cP

When the government cancels your research grant, here’s what you can do nature.com/articles/d4158…

Our team just launched three new tools to help you explore social media data: 🌉NewsBridge – Adds AI-powered context to Facebook posts 🔎Barney’s Tavern – Search 34B social media posts 🌎OSoMeNet – Visualize how info spreads across platforms osome.iu.edu/research/blog/…

A clear analysis of the manufactured controversy that wrongly equates fighting misinformation with censorship How to Address Misinformation—Without Censorship time.com/7282640/how-to…

All is done by my wonderful co-authors here! @moondark, @btrantruong, @clearingkimsy, Fan Huang, Nick Liu, Alessandro Flammini, Filippo Menczer, @OSoMe_IU

Note that a toxic post is defined as a post with >.5 toxicity score from OpenAI moderation endpoint. platform.openai.com/docs/guides/mo…

As Bluesky continues to mature, influential accounts are emerging, posing familiar risks of misinformation, abuse and toxicity on platform. Understanding these dynamics can help inform effective governance and moderation strategies moving forward.

Our analysis reveals that Bluesky rapidly developed a dense, highly clustered network structure. This "friend-of-a-friend" connectivity, characterized by strong hubs, enables swift and viral information diffusion, similar to established platforms such as Twitter/X and Weibo.

Despite initial bursts and declines in total and average activity, Bluesky achieved a stable level of daily engagement, with around 15% active users after the US Elections, matching inactivity rates on X/Twitter (~85% lurkers).

Bluesky's growth was driven by key events: the public access launch, Brazil's ban on X, X's block policy change, and the US elections. Each event triggered significant user migrations and formed distinct communities.