Yinhuai

@NliGjvJbycSeD6t

PhD@HKUST|Humanoid, Manipulation, CV

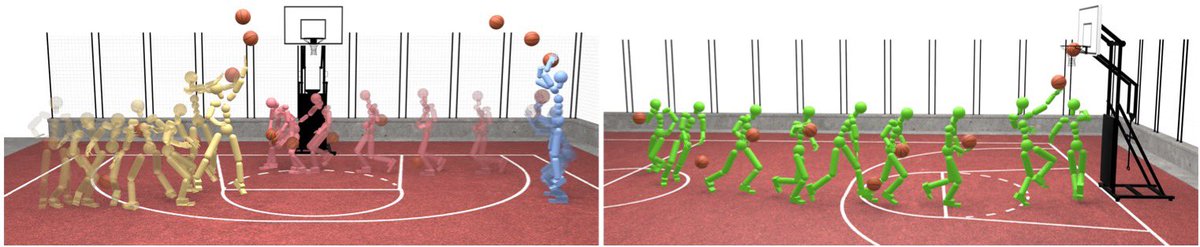

Tired of designing task rewards? New work, PhysHOI, enable simulated humanoid to learn diverse basketball skills 🏀 purely from human demonstrations. Code available now! Site: wyhuai.github.io/physhoi-page/ Paper: arxiv.org/abs/2312.04393 Code/Data: github.com/wyhuai/PhysHOI

WoCoCo is accepted by #CoRL2024. Codes will be released weeks later. (Complaint: 2 of 3 reviewers raised 90% of the questions and did not join the rebuttal. I feel it like an ICRA submission with long delayed notification. We have revised our manuscript accordingly tho.)

🚨 Without Any Motion Priors, how to make humanoids do versatile parkour jumping🦘, clapping dance🤸, cliff traversal🧗, and box pick-and-move📦 with a unified RL framework? Introduce WoCoCo: 🧗 Whole-body humanoid Control with sequential Contacts 🎯Unified designs for minimal…

🔥 #CVPR2025 Highlight Paper 🔥 Join us at Hall D #166! 📅 July 14, 5-7 PM 💬 Let's discuss Humanoid Skill Learning! 📜 Paper: “SkillMimic: Learning Basketball Interaction Skills from Demonstrations” 🏀 Project Page: ingrid789.github.io/SkillMimic/ 💻 Code/Data: github.com/wyhuai/SkillMi…

Ever seen a humanoid robot serve beer without spilling a drop? Now you have. 🍻 Introducing Hold My Beer: learning gentle locomotion + stable end-effector control. lecar-lab.github.io/SoFTA/

🤖Can a humanoid robot carry a full cup of beer without spilling while walking 🍺? Hold My Beer ! Introducing Hold My Beer🍺: Learning Gentle Humanoid Locomotion and End-Effector Stabilization Control Project: lecar-lab.github.io/SoFTA/ See more details below👇

Who can stop this guy🤭? Highly robust basketball skills powered by our #SIGGRAPH2025 work Project page: ingrid789.github.io/SkillMimicV2/ There are more cases for enhancing general interaction and locomotion skills!

Trapped by data quality and volume challenges in imitation learning? Check our #SIGGRAPH2025 paper: 🤖SkillMimic-V2: Learning Robust and Generalizable Interaction Skills from Sparse and Noisy Demonstrations. 🌐 Page:ingrid789.github.io/SkillMimicV2/ 🧑🏻💻 Code:github.com/Ingrid789/Skil…

Excited to share our latest work! 🤩 Masked Mimic 🥷: Unified Physics-Based Character Control Through Masked Motion Inpainting Project page: research.nvidia.com/labs/par/maske… with: Yunrong (Kelly) Guo, @ofirnabati, @GalChechik and @xbpeng4. @SIGGRAPHAsia (ACM TOG). 1/ Read…

#CVPR2024 #HandObjectInteraction Excited to share our dataset TACO - the first large-scale real-world 4D bimanual tool usage dataset covering diverse Tool🥄-ACtion🙌-Object🍵 compositions and object geometries. Join us at Poster 213, Arch 4A-E, Fri 10:30am - noon PDT🍺.

From learning individual skills to composing them into a basketball-playing agent via hierarchical RL -- introducing SkillMimic, Learning Reusable Basketball Skills from Demonstrations 🌐: ingrid789.github.io/SkillMimic/ 📜: arxiv.org/abs/2408.15270… 🧑🏻💻: github.com/wyhuai/SkillMi… Work led…

Simulated humanoid now learns how to handle a basketball🏀🏀🏀! New work, PhysHOI, led by @NliGjvJbycSeD6t, learns dynamic human objects (basketballs, grabbing, etc). Site🌐: wyhuai.github.io/physhoi-page/ Paper📄: arxiv.org/abs/2312.04393 Code/Data🧑🏻💻: github.com/wyhuai/PhysHOI (coming…

🤖Introducing 📺𝗢𝗽𝗲𝗻-𝗧𝗲𝗹𝗲𝗩𝗶𝘀𝗶𝗼𝗻: a web-based teleoperation software!  🌐Open source, cross-platform (VisionPro & Quest) with real-time stereo vision feedback.  🕹️Easy-to-use hand, wrist, head pose streaming. Code: github.com/OpenTeleVision…

It took my brain a while to parse what's going on in this video. We are so obsessed with "human-level" robotics that we forget it is just an artificial ceiling. Why don't we make a new species superhuman from day one? Boston Dynamics has once again reinvented itself. Gradually,…

🤔 Ever wondered if simulation-based animation/avatar learnings can be applied to real humanoid in real-time? 🤖 Introducing H2O (Human2HumanOid): - 🧠 An RL-based human-to-humanoid real-time whole-body teleoperation framework - 💃 Scalable retargeting and training using large…

This is how ALOHA's "teleoperation" system works - a fancy word for "remote control". Training robots will be more and more like playing games in the physical world. A human operates a "joystick++" to perform tasks and collect data, or intervene if there's any safety concern.…

Figure-01 has learned to make coffee ☕️ Our AI learned this after watching humans make coffee This is end-to-end AI: our neural networks are taking video in, trajectories out Join us to train our robot fleet: figure.ai/careers

You can now ask your simulated humanoid to perform actions, in REAL-TIME 👇🏻 Powered by the amazing EMDM (@frankzydou, @Alex_wangjingbo, etal) and PHC. EMDM: frank-zy-dou.github.io/projects/EMDM/… PHC: github.com/ZhengyiLuo/Per… Simulation: Isaac Gym

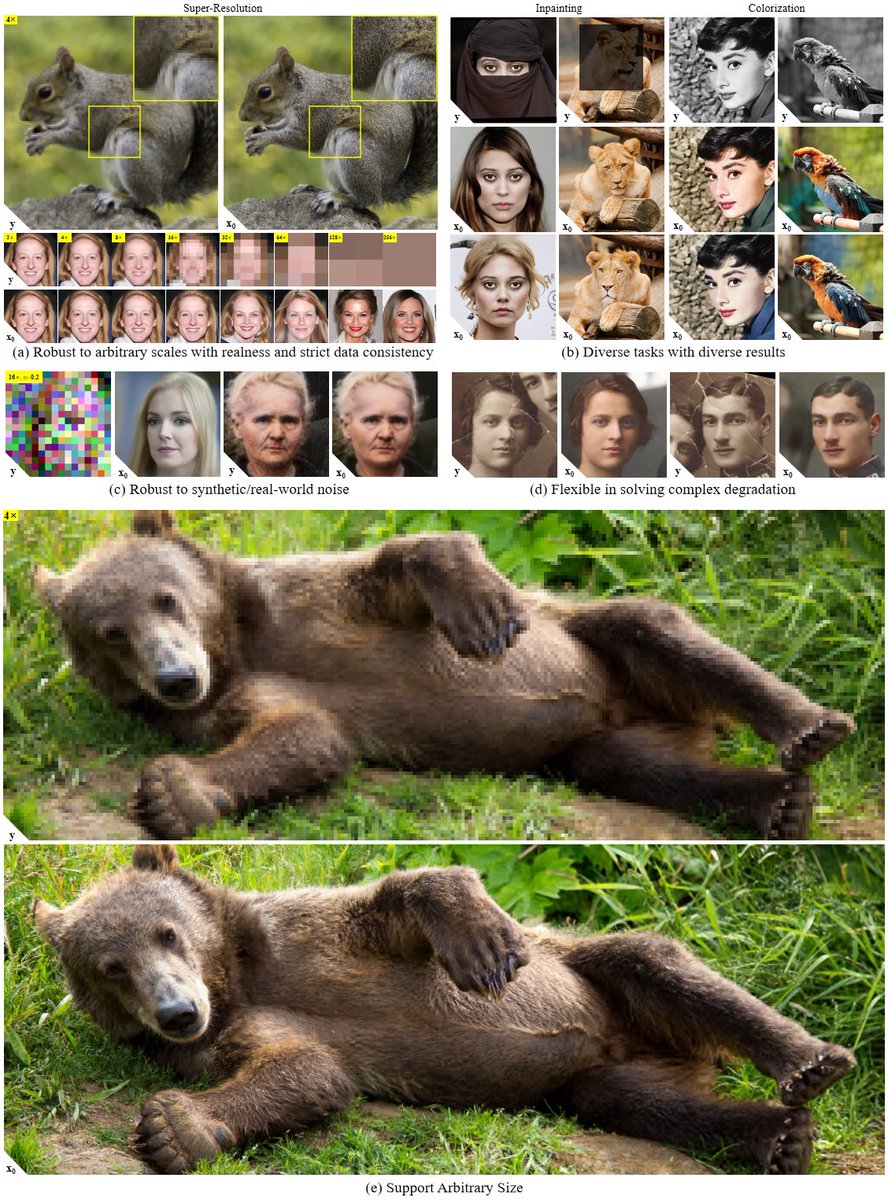

Interested in image restoration? Check our #ICLR2023 spotlight paper "Zero Shot Image Restoration Using Denoising Diffusion Null-Space Model" for a brand new perspective! 👻 arxiv.org/pdf/2212.00490… github.com/wyhuai/DDNM

DDNM is a zero-shot image restoration model. It decomposes data space into the range/null space of the linear degradation operator and then refines an image in null space only to ensure data consistency while improving realness. arxiv.org/abs/2212.00490