Nima Gard

@NimaGard

Director of AI @PathRobotics Build, Build, Build

We have an excellent panel and speaker. Join us on July 24 at our HQ. @b_k_bucher @NimaFazeli7 @stepjamUK @peterzhqian

Curious about how foundational models are reshaping robotics? Join us on July 24 for Real World Robotics. A free evening of ideas, insights, and conversation at the edge of AI and autonomy. July 24 | 5:00 – 8:00 PM | Path Robotics HQ Register for free here:…

Curious about how foundational models are reshaping robotics? Join us on July 24 for Real World Robotics. A free evening of ideas, insights, and conversation at the edge of AI and autonomy. July 24 | 5:00 – 8:00 PM | Path Robotics HQ Register for free here:…

We're only a week out from our next event! Come visit our headquarters, meet the speakers & build some new connections. There are a few spots left! RSVP for free here ➡️ form.typeform.com/to/snp79xHN

Much needed

🚀 Introducing PhysiX: One of the first large-scale foundation models for physics simulations! PhysiX is a 4.5B parameter model that unifies a wide range of physical systems, from fluid dynamics to reaction-diffusion, outperforming specialized, state-of-the-art models.

New model alert in Transformers: EoMT! EoMT greatly simplifies the design of ViTs for image segmentation 🙌 Unlike Mask2Former and OneFormer which add complex modules like an adapter, pixel decoder and Transformer decoder on top, EoMT is just a ViT with a set of query tokens ✅

Check out @phillip_isola’s work. It proposes using language as a way to convey visual information. It’ll be interesting to study the 3D spatial understanding of LLMs for robotics applications through similar experiments.

Slides from my talk on "Language as a Visual Format" at the Visual Generative Modeling workshop at CVPR (mostly derived from slides made by @hyojinbahng and @carolinemchan): dropbox.com/scl/fi/5ok46te…

The slides for my CVPR talks are now available at latentspace.cc

I'm giving 3 talks at #CVPR2025 workshops and tutorials: 1⃣ "Rare Yet Real: Generative Modeling Beyond the Modes" will cover some of our work on gen AI for science where tail modeling and predictor calibration are crucial (Wed 11:10 - Room 102 B). uncertainty-cv.github.io/2025/

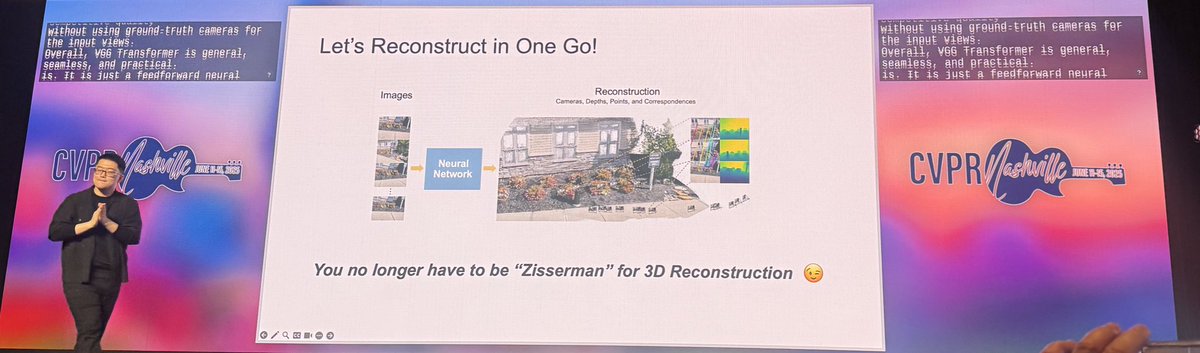

“You no longer have to be Zisserman for 3D reconstruction” 😁 - VGG Transformer @jianyuan_wang congrats on the best paper award #CVPR2025