Nima Fazeli

@NimaFazeli7

Assistant Professor of Robotics (@UMRobotics), Computer Science (@UMichCSE), and Mechanical Engineering (@umichme) -- University of Michigan, Ann Arbor

We have an excellent panel and speaker. Join us on July 24 at our HQ. @b_k_bucher @NimaFazeli7 @stepjamUK @peterzhqian

Curious about how foundational models are reshaping robotics? Join us on July 24 for Real World Robotics. A free evening of ideas, insights, and conversation at the edge of AI and autonomy. July 24 | 5:00 – 8:00 PM | Path Robotics HQ Register for free here:…

Michael Jordan gave a short, excellent, and provocative talk recently in Paris - here's a few key ideas - It's all just machine learning (ML) - the AI moniker is hype - The late Dave Rumelhart should've received a Nobel prize for his early ideas on making backprop work 1/n

Great conversations at #RSS2025 with colleagues @pulkitology @ebiyik and others !

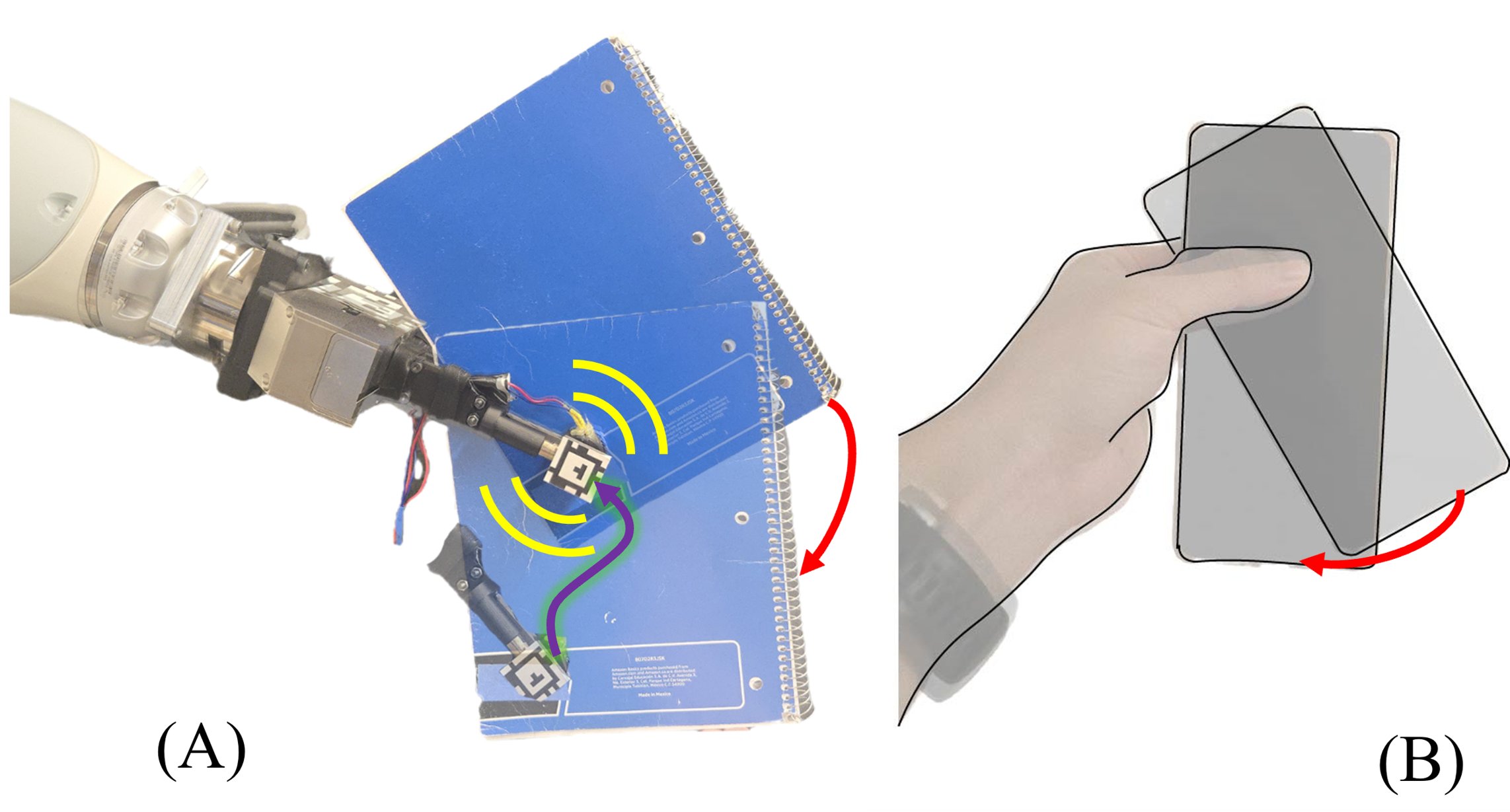

Vib2Move shows how simple hardware + smart motion can achieve dexterity. * Minimal assumptions on object shape or surface * Works without force control * Fast response rate * Great for constrained or low-cost settings Huge thanks to my advisor @NimaFazeli7 and our amazing lab.

And with @_krishna_murthy @aprorok @danfei_xu @NimaFazeli7

Great conversations at #RSS2025 with colleagues @pulkitology @ebiyik and others !

Just gave my first oral presentation today in Manipulation I session at RSS! Come check out my poster at #6 too!

How can robots feel and localize extrinsic contacts through both vision👀and touch🖐️? #RSS2025 @RoboticsSciSys Introducing ViTaSCOPE: a sim-to-real visuo-tactile neural implicit representation for in-hand object pose and extrinsic contact estimation! jayjunlee.github.io/vitascope/

👀🤚 Robots that see and feel at once! ViTaSCOPE fuses point-cloud vision with high-res tactile shear to nail in-hand 6-DoF pose plus contact patches—trained entirely in sim, zero-shot on hardware. Dive into the demos 👇 jayjunlee.github.io/vitascope/ #RSS2025 #robotics #tactileSensing

🚀 #RSS2025 sneak peek! We teach robots to shimmy objects with fingertip micro-vibrations precisely—no regrasp, no fixtures. 🎶⚙️ Watch Vib2Move in action 👇 vib2move.github.io #robotics #dexterousManipulation

I'm very excited to host the Robotics Worldwide Workshop at MIT with Amanda Prorok next week! We have an incredible lineup of roboticists from around the world who will join us at MIT for lightning talks, a panel discussion, and a poster session.

Check out @NimaFazeli7’s RI seminar, “Sensing the Unseen: Dexterous Tool Manipulation Through Touch and Vision” Thank you for visiting us and sharing your expertise, Nima! youtu.be/YhbGGtKr9NI?si…

When you're making a business decision, it is generally good to pick simple, fast, and cheap 💸 options. When you're making a career decision (e.g., academia 👩🎓🧑🎓), it pays to be an expert in hard things.

Dear PhD rec portals: Stop asking me to rank 'motivation' or other traits in percentiles. Just take my letter. How am I supposed to know if someone is 'top 2%' when I've written recs for 20 students? Do you think I remember letters from 2020? This isn't stats; it's chaos. 🚩

Earlier this year, @NimaFazeli7 recieved a @NSF CAREER Award. We took the chance to catch up with Professor Fazeli to learn a bit more about the fascinating research on "intelligent and dexterous robots that seamlessly integrate vision and touch.”

Perfect start to the #CoRL2024 week! Was a pleasure organizing the NextGen Robot Learning Symposium at @TUDarmstadt with @firasalhafez @GeorgiaChal Thanks to the speakers for the great talks! @YunzhuLiYZ @NimaFazeli7 @Dian_Wang_ @HaojieHuang13 @Vikashplus @ehsanik @Oliver_Kroemer

Cheers to all the authors who received awards for their posters/demos at Michigan AI Symposium! 🏆 🎉 Your innovative research & hard work truly stood out! Thank you to session chairs: @dangengdg & Zilin Wang Presenters: Yinpei Dai, Zixuan Huang, Daniel Philipov, Yixuan Wang

🆕🔥Dual asymmetric limit surfaces and their applications to #planarmanipulation by Xili Yi, An Dang & @NimaFazeli7 🔗bit.ly/3TVmPqy @UMRobotics @umichme #RoboticManipulation

🌟 New paper 🌟 🤖💬We are excited to introduce RACER, a flexible supervisor-actor framework that enhances visuomotor policies through rich language guidance with a fine-tuned VLM for task execution and failure correction. …h-language-failure-recovery.github.io More👇

Work done by our amazing team: @YinpeiD (co-lead), @NimaFazeli7 @UMRobotics, Joyce Chai @SLED_AI @michigan_AI. ✨For more details, please visit: 📄 Paper: arxiv.org/abs/2409.14674 🌐 Website: …h-language-failure-recovery.github.io

Imagine controlling robots with simple gestures! We've developed a system that lets you point at objects and tell robots to 'move this' or 'close that,' with language-gesture-controlled video generation! Check out our project dubbed "this&that": cfeng16.github.io/this-and-that/