Joel Bauer

@Neuro_Joel

Neuroscientist at heart, with too many hobbies. Currently a postdoc at the Sainsbury Wellcome Centre in London.

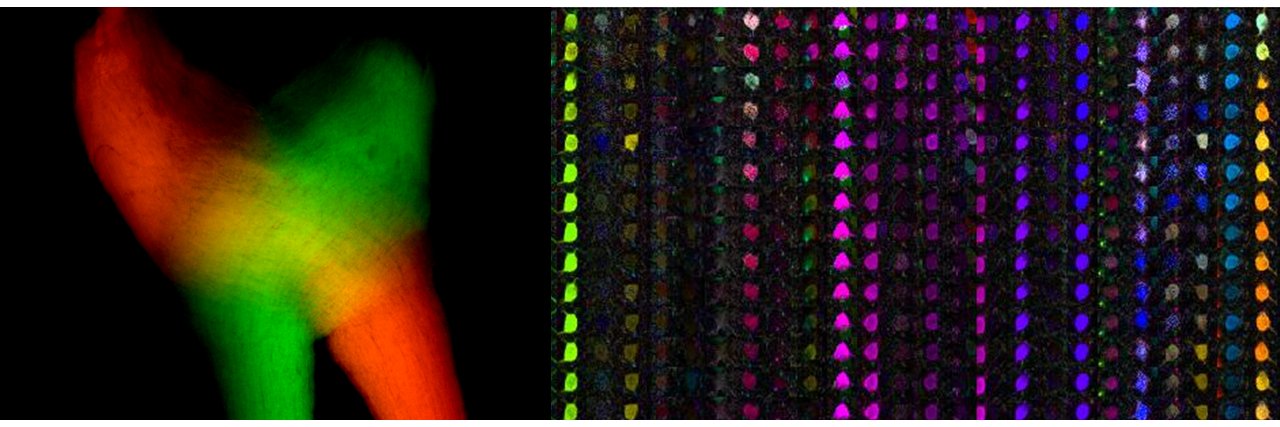

🚀 Excited to share the first preprint from my postdoc in the labs of @ClopathLab and Troy Margrie at @SWC_Neuro! We've reconstructed movies from mouse visual cortex activity. 📽️✨ #Neuroscience #AI 1/6 doi.org/10.1101/2024.0… youtu.be/TA6Oi5NfuMs

Introducing RatInABox: an open-source package for motion and cell modelling in continuous environments. RatInABox makes modelling the neural processes underpinning spatial navigation faster, easier, more reproducible and more realistic. Give it a go here: shorturl.at/mqP47

Can we hear what's inside your head? 🧠→🎶 Our new paper in @PLOSBiology, led by Jong-Yun Park, presents an AI-based method to reconstruct arbitrary natural sounds directly from a person's brain activity measured with fMRI. doi.org/10.1371/journa…

Amazing work! Looking forward to playing with this model 🤓

After 7 years, thrilled to finally share our #MICrONS functional connectomics results! We recorded activity from ~75K neurons in visual cortex in a single mouse, then mapped its wiring using electron microscopy. To systematically characterize neuron function, we built the first…

In @eLife: Layer 6 corticocortical neurons are a major route for intra- and interhemispheric feedback doi.org/10.7554/eLife.…

Going to #Cosyne2025? Check out our researchers’ posters and talks: sainsburywellcome.org/web/content/sw… @CosyneMeeting

Our new reconstruction paper using Inverse Receptive Field Attention (IRFA) is online! IRFA reconstructs images and visualizes feature and spatial Inverse Receptive Fields. The architecture does not rely on pre-trained models. 👉 arxiv.org/pdf/2501.03051

Early days, but it looks like our authors don't think we need an impact factor either! Submissions are strong in numbers and high quality since the Clarivate announcement. We should not let a multibillion dollar company dictate how science is evaluated!

Following the news that eLife will not receive an Impact Factor in 2025, we’ve shared an update on how our model is doing since we were first placed “on hold” by Web of Science, and what we’re up to now. Find out more. elifesciences.org/inside-elife/c…

Our new work on reconstructing visual perception is online as a #NeurIPS paper! "MonkeySee: Space-time-resolved reconstructions of natural images from macaque multi-unit activity" 🧠📷: openreview.net/pdf?id=OWwdlxw… Looking forward to connecting in Vancouver at #NeurIPS2024 !

What are the brain’s “real” tuning curves? Our new preprint "SIMPL: Scalable and hassle-free optimisation of neural representations from behaviour” argues that existing techniques for latent variable discovery are lacking. We suggest a much simpl-er way to do things. 1/21🧵

Our study on how to overcome off-target optical stimulation-evoked cortical activity in the mouse brain in vivo is now peer-reviewed published, please see: cell.com/iscience/fullt…

Are you at #SfN24? Check out the posters from our researchers @CampagnerDario, Yu Lin Tan, and @Neuro_Joel today.

If your at #SfN24 and interested in image/movie reconstruction from brain activity come check out my poster PSTR024.03 (board E27) between 13:00 and 17:00 today. 👀🧠

A supplementary page for our recent work on reconstructing images imagined in the mind from brain activity has now been released. It includes demo code on Google Colab and FAQ for those interested in our research. Please take a look if you are interested. sites.google.com/view/mentalima…

🚨 Poster alert 🚨 Happy to share our science on representational drift in mouse V1 at #FENSForum2024 this afternoon. Drop by at board PS04-27PM-144 to chat :) see you there! @Neuro_Joel @t_bonhoeffer

Our preprint is out! During my PhD at the @NIN_knaw, we studied the role of concept cells (found in the human hippocampus) in language comprehension. biorxiv.org/content/10.110…

I get to present our poster about two-timeframe monosynaptic rabies tracing and visual system plasticity this afternoon (Wednesday) at #FENS2024, PS02-26PM-137. Come by! 😊😊 @MPIforBI @t_bonhoeffer @MHuebener @xpieter

🧠👁️Our MindEye2 preprint is out! We reconstruct seen images from fMRI brain activity using only 1 hour of training data. This is possible by first pretraining a shared-subject model using other people's data, then fine-tuning on a held-out subject with only 1 hour of data.🧵