Richard Antonello

@NeuroRJ

Postdoc in the Mesgarani Lab at Columbia University. Studying how the brain processes language by using LLMs. (Formerly @HuthLab at UT Austin)

Our @CogCompNeuro GAC paper is out! We focus on two main questions: 1⃣ How should we use neuroscientific data in model development (raw experimental data vs. qualitative insights)? 2⃣ How should we collect experimental data for model development (model-free vs. model-based)?

Our CCN 2022 GAC write up is finally out! doi.org/10.51628/001c.… (Hoping it’s a good read for all the trainees and colleagues!) @GretaTuckute @dfinz @eshedmargalit @jcbyts @alonamarie @s_y_chung @ev_fedorenko @KriegeskorteLab @kalatwt

LLMs excel at fitting finetuning data, but are they learning to reason or just parroting🦜? We found a way to probe a model's learning process to reveal *how* each example is learned. This lets us predict model generalization using only training data, amongst other insights: 🧵

Can we precisely and noninvasively modulate deep brain activity just by riding the natural visual feed? 👁️🧠 In our new preprint, we use brain models to craft subtle image changes that steer deep neural populations in primate IT cortex. Just pixels. 📝arxiv.org/abs/2506.05633

We'll be presenting this at #ACL2025 ! Come find me and @tomjiralerspong in Vienna :)

New paper! 🌟How does LM representational geometry encode compositional complexity? A: it depends on how we define compositionality! We distinguish compositionality of form vs. meaning, and show LMs encode form complexity linearly and meaning complexity nonlinearly... 1/9

🤯Your LLM just threw away 99.9 % of what it knows. Standard decoding samples one token at a time and discards the rest of the probability mass. Mixture of Inputs (MoI) rescues that lost information, feeding it back for more nuanced expressions. It is a brand new…

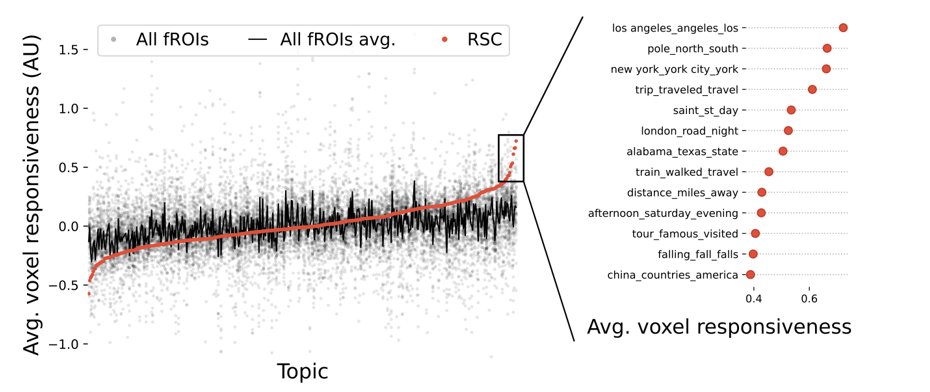

For those attending NAACL, today I'll be presenting recent work on how we can use language encoding models to identify functional specialization throughout cortex. Stop by my talk at 10:30 at the CMCL workshop!

Today, we're releasing a new paper – One-Minute Video Generation with Test-Time Training. We add TTT layers to a pre-trained Transformer and fine-tune it to generate one-minute Tom and Jerry cartoons with strong temporal consistency. Every video below is produced directly by…

Excited to introduce funROI: A Python package for functional ROI analyses of fMRI data! funroi.readthedocs.io/en/latest/ #fMRI #Neuroimaging #Python #OpenScience Work w @neuranna 🧵👇

🚨Announcing our #ICLR2025 Oral! 🔥Diffusion LMs are on the rise for parallel text generation! But unlike autoregressive LMs, they struggle with quality, fixed-length constraints & lack of KV caching. 🚀Introducing Block Diffusion—combining autoregressive and diffusion models…

Vectorization into a neat SVG!🎨✨ Instead of generating a messy SVG (left), we produce a structured, compact representation (right) - enhancing usability for editing and modification. Accepted to #CVPR2025 !

Reasoning models lack atomic thought ⚛️ Unlike humans using independent units, they store full histories🤔 Introducing Atom of Thoughts (AOT): lifts gpt-4o-mini to 80.6% F1 on HotpotQA, surpassing o3-mini and DeepSeek-R1 ! The best part? It's plugs in for ANY framework 🔌 1/5

🎉Excited to share: My first ML conference paper, Population Transformer 🧠, is an Oral at #ICLR2025! This work has truly evolved since its first appearance as a workshop paper last year. So thankful to have worked with the best advisors + collaborators! 🤗 More soon!

How can we train models on more brains and sensor layouts? We present Population Transformer (PopT) which learns population-level interactions on intracranial electrodes, with 🔥decoding and interpretability benefits. See our poster at #ICML2024 @AI_for_Science 12pm

EEG Decoding with Multi-Timescale Language Models. Our paper was recently published in Computational Linguistics. Tweetprint to come shortly 🤞doi.org/10.1162/coli_a…

New research from Meta FAIR — Meta Memory Layers at Scale. This work takes memory layers beyond proof-of-concept, proving their utility at contemporary scale ➡️ go.fb.me/3lbt4m

"The Illusion Illusion" vision language models recognize images of illusions... but they also say non-illusions are illusions too

Catch me and @czlwang presenting our poster today (12/14) 3:30-5:30pm at the #NeurIPS2024 NeuroAI Workshop! 🧠 neuroai-workshop.github.io Paper: arxiv.org/abs/2406.03044

How to upset the (few remaining) neuroscientists at NeurIPS 101

New post! What do brain scores teach us about brains? Does accounting for variance in the brain mean that an ANN is brain-like? 1/

The UniReps Workshop is happening THIS SATURDAY at #NeurIPS! 🤖🧠 Join us for a day of insightful talks and engaging discussions with @s_y_chung, @phillip_isola, @NeelNanda5, @ermgrant, @leavittron, @ItsNeuronal, Marco Cuturi and Stefanie Jegelka! 🎤 Check out the full…

Come by our poster (#3801), exploring how we can use the question-answering abilities of LLMs to build more #interpretable models of language processing in the 🧠, starting in one hour at #NeurIPS !

LLM embeddings are opaque, hurting them in contexts where we really want to understand what’s in them (like neuroscience) Our new work asks whether we can craft *interpretable embeddings* just by asking yes/no questions to black-box LLMs. 🧵 arxiv.org/abs/2405.16714