Mehul Damani

@MehulDamani2

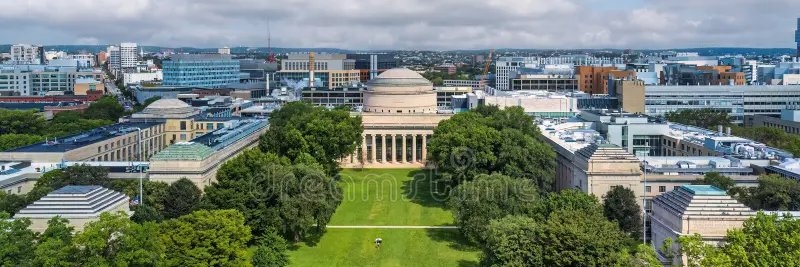

PhD Student at MIT | Reinforcement Learning, NLP

🚨New Paper!🚨 We trained reasoning LLMs to reason about what they don't know. o1-style reasoning training improves accuracy but produces overconfident models that hallucinate more. Meet RLCR: a simple RL method that trains LLMs to reason and reflect on their uncertainty --…

👉 New preprint! Today, many the biggest challenges in LM post-training aren't just about correctness, but rather consistency & coherence across interactions. This paper tackles some of these issues by optimizing reasoning LMs for calibration rather than accuracy...

🚨New Paper!🚨 We trained reasoning LLMs to reason about what they don't know. o1-style reasoning training improves accuracy but produces overconfident models that hallucinate more. Meet RLCR: a simple RL method that trains LLMs to reason and reflect on their uncertainty --…

Come check out our ICML poster on combining Test-Time Training and In-Context Learning for on-the-fly adaptation to novel tasks like ARC-AGI puzzles. I will be presenting with @jyo_pari at E-2702, Tuesday 11-1:30!

Excited to share our position paper on the Fractured Entangled Representation (FER) Hypothesis! We hypothesize that the standard paradigm of training networks today — while producing impressive benchmark results — is still failing to create a well-organized internal…

Could a major opportunity to improve representation in deep learning be hiding in plain sight? Check out our new position paper: Questioning Representational Optimism in Deep Learning: The Fractured Entangled Representation Hypothesis. The idea stems from a little-known…

I am super excited to be presenting our work on adaptive inference -time compute at ICLR! Come chat with me on Thursday 4/24 at 3PM (Poster #219). I am also happy to chat about RL/reasoning/ RLHF/ inference scaling (DMs are open)!

Inference-time compute can boost LM performance, but it's costly! How can we optimally allocate it across prompts? In our latest work, we introduce a simple method to adaptively allocate more compute to harder problems. 🔥 Paper: arxiv.org/abs/2410.04707 Learn more! 1/N

The next frontier for AI shouldn’t just be generally helpful. It should be helpful for you! Our new paper shows how to personalize LLMs — efficiently, scalably, and without retraining. Meet PReF (arxiv.org/abs/2503.06358) 1\n

I just wrote my first blog post in four years! It is called "Deriving Muon". It covers the theory that led to Muon and how, for me, Muon is a meaningful example of theory leading practice in deep learning (1/11)

[1/x] can we scale small, open LMs to o1 level? Using classical probabilistic inference methods, YES! Joint @MIT_CSAIL / @RedHat AI Innovation Team work introduces a particle filtering approach to scaling inference w/o any training! check out …abilistic-inference-scaling.github.io

🧩 Why do task vectors exist in pretrained LLMs? Our new research uncovers how transformers form internal abstractions and the mechanisms behind in-context learning(ICL).

With @OpenAI o1, we developed one way to scale test-time compute, but it isn't the only way and might not be the best way. I'm excited to see academic researchers explore new approaches in this direction.

Why do we treat train and test times so differently? Why is one “training” and the other “in-context learning”? Just take a few gradients during test-time — a simple way to increase test time compute — and get a SoTA in ARC public validation set 61%=avg. human score! @arcprize

It was a great pleasure working on this project with amazing collaborators! Excited to see more opportunities opened up by scaling test-time compute!

Why do we treat train and test times so differently? Why is one “training” and the other “in-context learning”? Just take a few gradients during test-time — a simple way to increase test time compute — and get a SoTA in ARC public validation set 61%=avg. human score! @arcprize

Thanks for the attention, couple important points: 1) See @MindsAI_Jack, their team is the first one who applied method privately and they get the 1st rank in the competition. 2) See the concurrent work as well: x.com/ellisk_kellis/… 3) Obviously this is not AGI, it's a…

Why do we treat train and test times so differently? Why is one “training” and the other “in-context learning”? Just take a few gradients during test-time — a simple way to increase test time compute — and get a SoTA in ARC public validation set 61%=avg. human score! @arcprize