Marco Mascorro

@Mascobot

Partner @a16z (investor in @cursor_ai, @thinkymachines, @bfl_ml, @WaveFormsAI & more) | Roboticist | Cofounder @Fellow_AI | @MIT 35 under 35 | Opinions my own.

There's so much infra to build for RL, at least in the open source world (environments, setups for reward shaping, rollouts, etc). I guess most AI labs have already done a lot of this, but feels so early in open source RL

In the past month, Cursor found 1M+ bugs in human-written PRs. Over half were real logic issues that were fixed before merging. Today, we're releasing the system that spotted these bugs. It's already become a required pre-merge check for many teams.

Qwen3 coder is out, and it's almost on pair with Claude Sonnet 4 (better in 6 benchmarks). This seems like a great OS coding model:

>>> Qwen3-Coder is here! ✅ We’re releasing Qwen3-Coder-480B-A35B-Instruct, our most powerful open agentic code model to date. This 480B-parameter Mixture-of-Experts model (35B active) natively supports 256K context and scales to 1M context with extrapolation. It achieves…

Kimi 2's paper is out. Kimi 1.5 was also a great paper, but all the attention was taken by DeepSeek R1 back in time. Kimi 1.5 also had an interesting approach to RL back in time (and before Dr. GRPO which improves GRPO by removing the division by standard deviation (which…

Kimi put out their paper :) github.com/MoonshotAI/Kim…

Their bet allowed for formal math AI systems (like AlphaProof). In 2022, almost nobody thought an LLM could be IMO gold level by 2025.

We are seeing much faster AI progress than **Paul Christiano** and **Yudkowsky** predicted, who had gold in 2025 at 8% and 16% respectively, by methods that are more general than expected

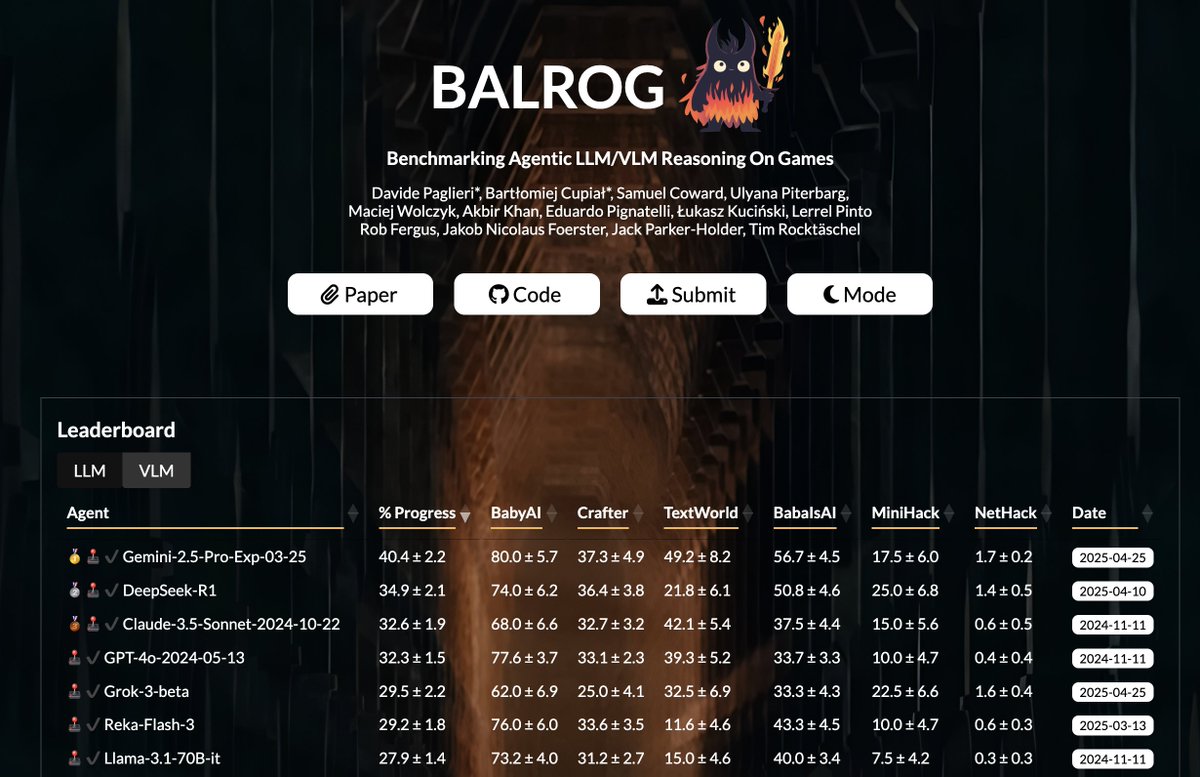

This is very cool too in the direction of benchmarking agentic LLM/VLM reasoning on games:

Pretty excited about benchmarks in the form of games, especially when the models don't have any prior form of knowledge of the games in the training set. We are going all back to pre-LLMs techniques (OpenAI Dota2, OpenAI RL Gym, NetHack RL learning environments, etc) We…

Today we’re releasing our first public preview of ARC-AGI-3: the first three games. Version 3 is a big upgrade over v1 and v2 which are designed to challenge pure deep learning and static reasoning. In contrast, v3 challenges interactive reasoning (eg. agents). The full version…

ChatGPT Agent approach to combine DeepResearch + Operator is very interesting and probably the best of both worlds: search/crawl over lots of input text data + Operator for interactivity (Visual input, Auth, etc with mouse and keyboard). For web agents, it was always unclear…

ChatGPT can now do work for you using its own computer. Introducing ChatGPT agent—a unified agentic system combining Operator’s action-taking remote browser, deep research’s web synthesis, and ChatGPT’s conversational strengths.

This is very cool, Live-Stream Diffusion (LSD) AI Mode. Input any video stream (camera/video) to a diffusion video in real-time (<40ms latency). Congrats @DLeitersdorf & @DecartAI team!

Introducing MirageLSD: The First Live-Stream Diffusion (LSD) AI Model Input any video stream, from a camera or video chat to a computer screen or game, and transform it into any world you desire, in real-time (<40ms latency). Here’s how it works (w/ demo you can use!):

Not sure what will get announced tomorrow, but something that could REALLY move the needle with agents is something like Operator:

💯

agree with lots of what jensen has been saying about ai and jobs; there is a ton of stuff to do in the world. people will 1) do a lot more than they could do before; ability and expectation will both go up 2) still care very much about other people and what they do 3) still be…

Super thrilled to back @miramurati and the amazing team @thinkymachines - a GOAT team that has made major contributions to RL, pre-training/post-training, reasoning, multimodal, and of course ChatGPT! No one is better positioned to advance the frontier. @martin_casado @pmarca…

Thinking Machines Lab exists to empower humanity through advancing collaborative general intelligence. We're building multimodal AI that works with how you naturally interact with the world - through conversation, through sight, through the messy way we collaborate. We're…

Can’t wait for Kimi K2 reasoning - K2 (base) seems pretty good even on creative writing. Hopefully we can get better training/sampling efficiencies with RL and can't wait to see how these models perform when RL compute is the vast majority of training (and hopefully when it's…

Remember: K2 is *not* a reasoning model. And very few active tokens in the MoE. So it's using less tokens, *and* each token is cheaper and faster.

Neat! @danielhanchen you guys work fast! Dynamic 1.8-bit (245GB from 1.1TB) for Kimi K2:

You can now run Kimi K2 locally with our Dynamic 1.8-bit GGUFs! We shrank the full 1.1TB model to just 245GB (-80% size reduction). The 2-bit XL GGUF performs exceptionally well on coding & passes all our code tests Guide: docs.unsloth.ai/basics/kimi-k2 GGUFs: huggingface.co/unsloth/Kimi-K…

If you be super neat if the labs producing the top OS LLMs like Kimi K2, etc. could release the smaller distilled versions of them (like DeepSeek R1 with the smaller distills they released), so that we all can run the most optimized, on pair speculative decoding

The improvement rate of Cursor is amazing, it keeps getting even better and better

Cursor is getting even better, and better.

Lee Kuan Yew: “Air conditioning was a most important invention for us, perhaps one of the signal inventions of history. It changed the nature of civilization by making development possible in the tropics. Without air conditioning you can work only in the cool early-morning…